David Sassoon is the founder and publisher of InsideClimate News, the nonpartisan and nonprofit news organization that won the Pulitzer Prize for National Reporting in 2013. He has been a writer, editor and publisher for 25 years, involved with public interest issues, including human rights, cultural preservation, healthcare, education and the environment. In 2003, he began researching the business case for climate action for the Rockefeller Brothers Fund. As an outgrowth of his research, Sassoon founded a blog in 2007 that has grown and evolved into InsideClimate News. He earned his undergraduate degree from Harvard University and a master’s degree from Columbia University’s Graduate School of Journalism. He is the author of “Tiny Specks in a Hurry: The Story of a Journey to Mustang.”

David Sassoon

Publisher

InsideClimate News

Explore more from The Transmitter

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

A 2013 Nature paper from David Anderson’s lab revealed a group of sensory neurons involved in pleasurable touch and led Abdus-Saboor down a new research path.

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

A 2013 Nature paper from David Anderson’s lab revealed a group of sensory neurons involved in pleasurable touch and led Abdus-Saboor down a new research path.

Sex bias in autism drops as age at diagnosis rises

The disparity begins to level out after age 10, raising questions about why so many autistic girls go undiagnosed earlier in childhood.

Sex bias in autism drops as age at diagnosis rises

The disparity begins to level out after age 10, raising questions about why so many autistic girls go undiagnosed earlier in childhood.

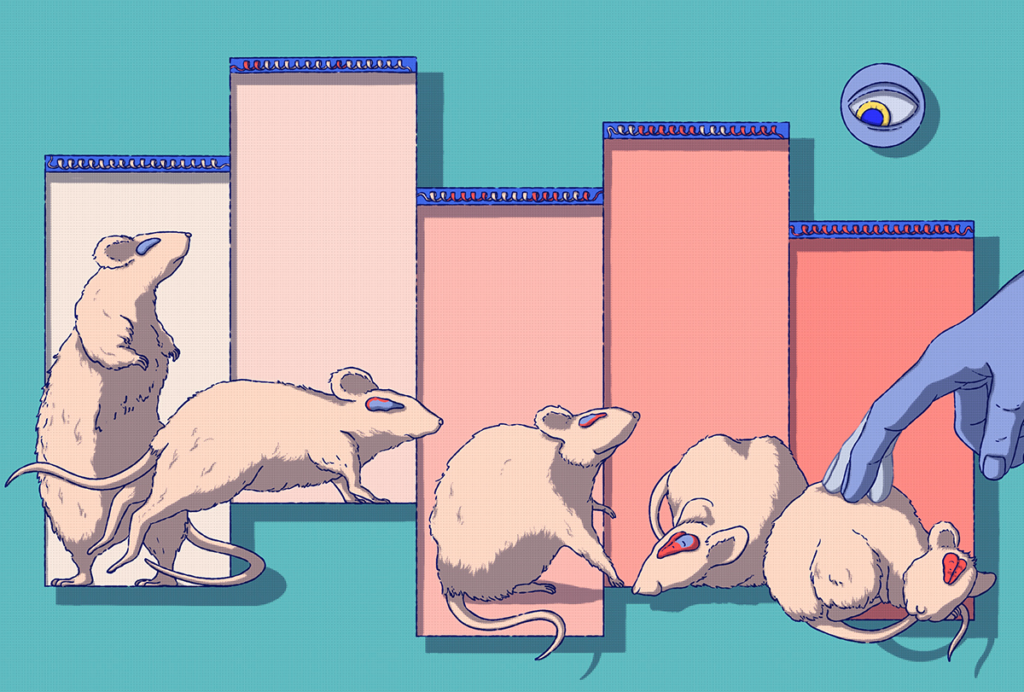

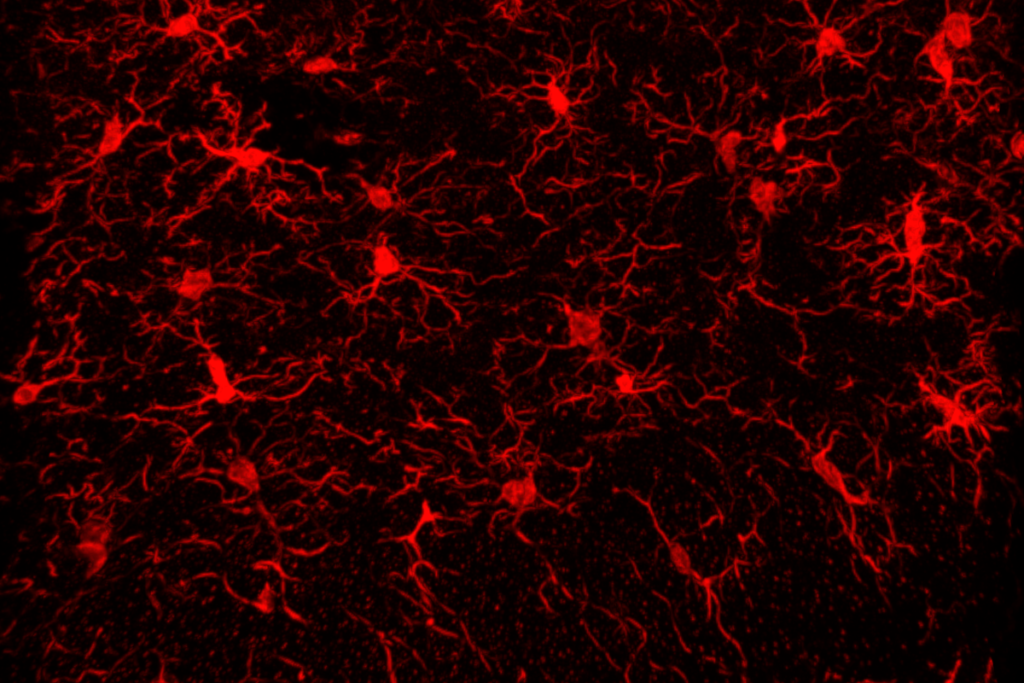

Microglia implicated in infantile amnesia

The glial cells could explain the link between maternal immune activation and autism-like behaviors in mice.

Microglia implicated in infantile amnesia

The glial cells could explain the link between maternal immune activation and autism-like behaviors in mice.