Morton Ann Gernsbacher is Vilas Research Professor and Sir Frederic Bartlett Professor of Psychology at the University of Wisconsin–Madison. She is a specialist in autism and psycholinguistics and has written and edited professional and lay books and over 100 peer-reviewed articles and book chapters on these subjects. She currently serves as co-editor of the journal Psychological Science in the Public Interest and associate editor for Cognitive Psychology, and she has previously held editorial positions for Memory & Cognition and Language and Cognitive Processes. She was also president of the Association for Psychological Science in 2007.

Morton Ann Gernsbacher

Professor

University of Wisconsin - Madison

From this contributor

Book review: “Neurotribes” recovers lost history of autism

Steve Silberman’s new book, “Neurotribes,” recounts his 15-year quest to understand “the legacy of autism.”

Book review: “Neurotribes” recovers lost history of autism

Explore more from The Transmitter

Marcelle Lapicque: A forgotten pioneer in neuroscience

Lapicque was the first Black woman neuroscientist in Europe, new research suggests.

Marcelle Lapicque: A forgotten pioneer in neuroscience

Lapicque was the first Black woman neuroscientist in Europe, new research suggests.

In-vivo base editing in a mouse model of autism, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 23 February.

In-vivo base editing in a mouse model of autism, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 23 February.

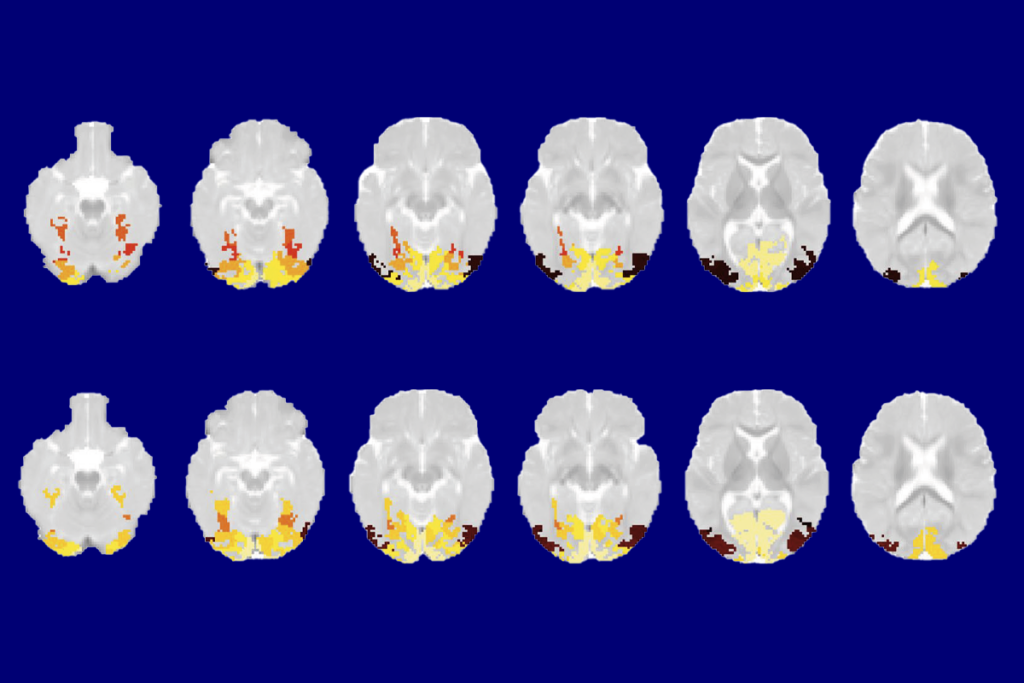

Infant visual system categorizes common objects by 2 months of age

Brain activity patterns in the ventral visual cortex appear to distinguish images across 12 categories, including birds and trees, longitudinal functional MRI scans suggest.

Infant visual system categorizes common objects by 2 months of age

Brain activity patterns in the ventral visual cortex appear to distinguish images across 12 categories, including birds and trees, longitudinal functional MRI scans suggest.