How scuba diving helped me embrace open science

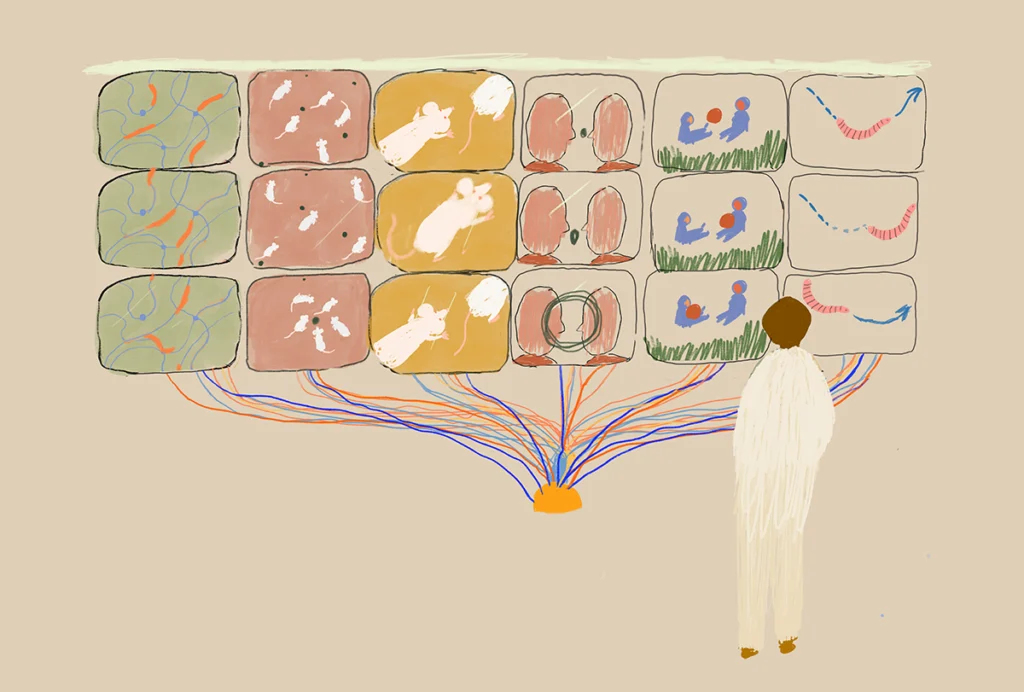

Our lab adopted practices to make data- and code-sharing feel safer, including having the coding equivalent of a dive buddy. Trainees call the buddy system a welcome safety net.

It is difficult to find someone in computational research who will publicly say they don’t support open-science practices. By increasing transparency, open science accelerates progress and enhances equity. Sharing data and code makes the most efficient use of data collected from volunteer research participants, maximizes the return on public investments and helps investigators at under-resourced institutions.

Open science also breeds careful science: Researchers who know that their work will be open for external review may be less likely to cut corners. At the same time, though — despite support for open science and all its concomitant benefits — fear of this scrutiny can deter even well-intentioned investigators from fully participating.

To overcome this hurdle, my lab has adopted practices to make data- and code-sharing feel safer. Notably, we created a reproducibility buddy system — inspired by the buddy system in scuba diving, in which divers pair up to monitor and help each other in case of trouble. The practice is time-consuming, but it has helped us catch mistakes early and has made lab members feel more comfortable sharing their work.

The impetus to develop this system grew from my own experience as a new faculty member. My biggest hang-up in embracing open science was simple: fear. Computational neuroscience can be complicated — lots of data and lots of code, all with many potential failure points. Code written by professional software developers includes approximately 20 to 70 bugs per 1,000 lines of code; code written by a brilliant but inexperienced graduate student likely includes far more. In a computational project with a complex code base, it is reasonable to assume there are many undiscovered bugs or errors.

The consequences of finding an error after results are published can be serious. Shortly after I started my lab, I found an error in a paper after it was published in a high-profile journal. Even though we caught the error ourselves, I was mortified. And the process of finding the error, understanding what results it affected, getting all stakeholders on board and working with the editor to correct the record was astonishingly time-intensive and personally draining. I imagine the process would have been even more stressful had an outside party relying on open code reported the error. Along the way, I also received an implicit message from colleagues: One erratum, you can still probably get tenure. Two? I wouldn’t bet on it. This is not a culture that drives one to embrace open science.

O

ver time, we have changed the way we work so that open science feels less scary. Perhaps the single most useful among these is our “reproducibility buddy system.” This framework — which I am sure is not novel — occurred to me after scuba diving. Scuba diving is generally quite safe but requires significant equipment because of the inescapable reality that we cannot breathe under water. To reduce potentially dangerous errors, one always dives with a dive buddy, who is responsible for helping check equipment and assist during a dive if problems arise.Compared with a basic scuba outing, most academic projects are far more complex. If we are required to have a buddy to dive, why don’t we have one for computational science?

The reproducibility buddy, which my lab members lovingly abbreviate to “reproducibilibuddy,” serves a roughly analogous role. At the start of a project, we identify a team member to reproduce the project. To align incentives, this person is almost always the second author of the published paper. The reproducibility buddy replicates the work at several key checkpoints, going over every line of code and making sure they can run the code and get the same results.

Importantly, this process begins early in a project; nothing is worse than finding an error after a set of results has been polished to a high gloss ahead of submission to a journal. For example, one of the first major checkpoints occurs before we generate any results — we first reproduce all the nitty-gritty steps required to aggregate the data. Later, we ask the buddy to try to reproduce the first main result that becomes the anchor for any subsequent manuscript. Ahead of submission, the first author comprehensively cleans and comments on all code and creates a wiki that provides an overview of how to use it; the buddy’s task is to reproduce the primary results using only this documentation. Finally, this process is updated as the work evolves in the revision process.

Sadly, this all takes time. Writing clean, well-commented code that can be easily replicated takes longer than hacked-together one-offs. Even under ideal circumstances, performing the replication itself is labor intensive. As in so many other domains, there is a real speed-versus-accuracy trade-off. Furthermore, the system is far from foolproof; results can be “reproducible but wrong” — code that runs and returns the reported result but is based on flawed scientific logic or a misunderstanding of the output.

When we first began piloting the buddy system five years ago, I anticipated pushback from the team. To my surprise, the opposite has occurred. Trainees report that the buddy system feels like a welcome safety net. Although replicating someone else’s work is time-intensive, it is also a great exercise in code review that helps both parties learn from each other. Perhaps the single most common reaction is relief: The buddy system allows everyone to sleep better at night, knowing that their results have been vetted. With time, I have repeatedly learned that when we fail to use the checkpoints built into this buddy system, we do so at our own peril: Errors that should have been caught early on are found later and are far more costly to correct.

Implementing specific practices for reproducibility has helped our lab members be less afraid and even love open computational science. However, it is not a replacement for systematic change. As detailed elsewhere, there are multiple opportunities to encourage open science at every stage of the scientific life cycle. Granting agencies can prioritize data-sharing and re-use, open code and replication of findings. Top journals could expand options for published data descriptors to ensure credit for sharing data. Editors could enforce standards for data- and code-sharing, allow for registered reports and encourage updated results. Academic appointment and promotion committees could de-emphasize numerical measures of productivity and instead reward open practices and results that replicate. Perhaps most ambitiously, academic journals could compensate peer reviewers, making it financially feasible for results to be independently replicated as part of the review process. Although there have been encouraging developments on many fronts in open science, changing the existing consensus is inevitably slow. For now, we find that — as in diving — doing open science with a buddy is both safer and more fun.

University of Pennsylvania

Recommended reading

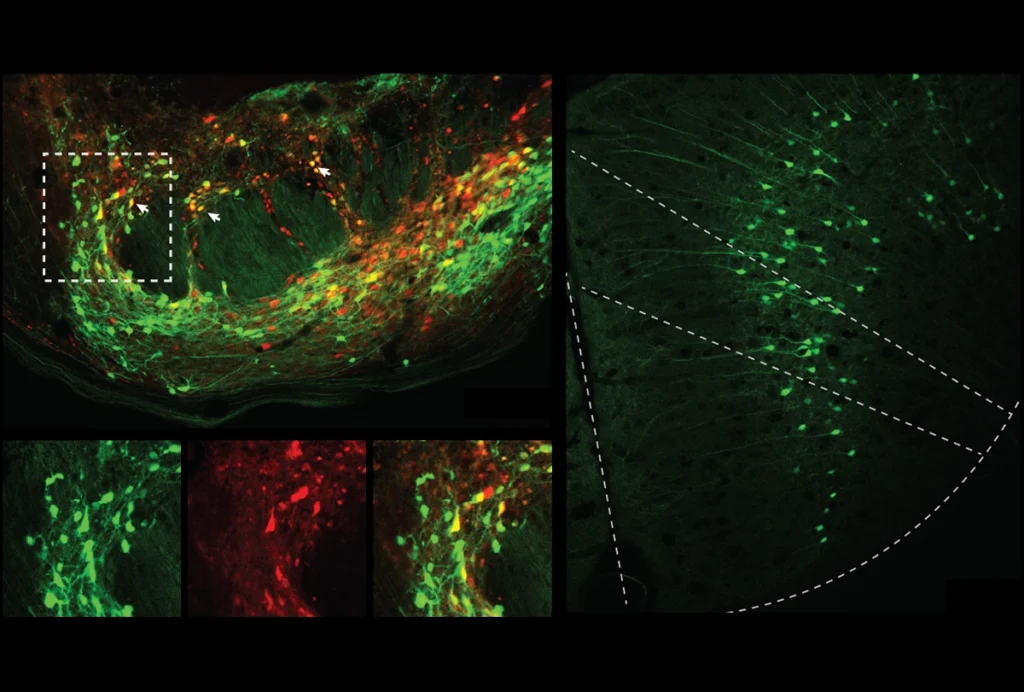

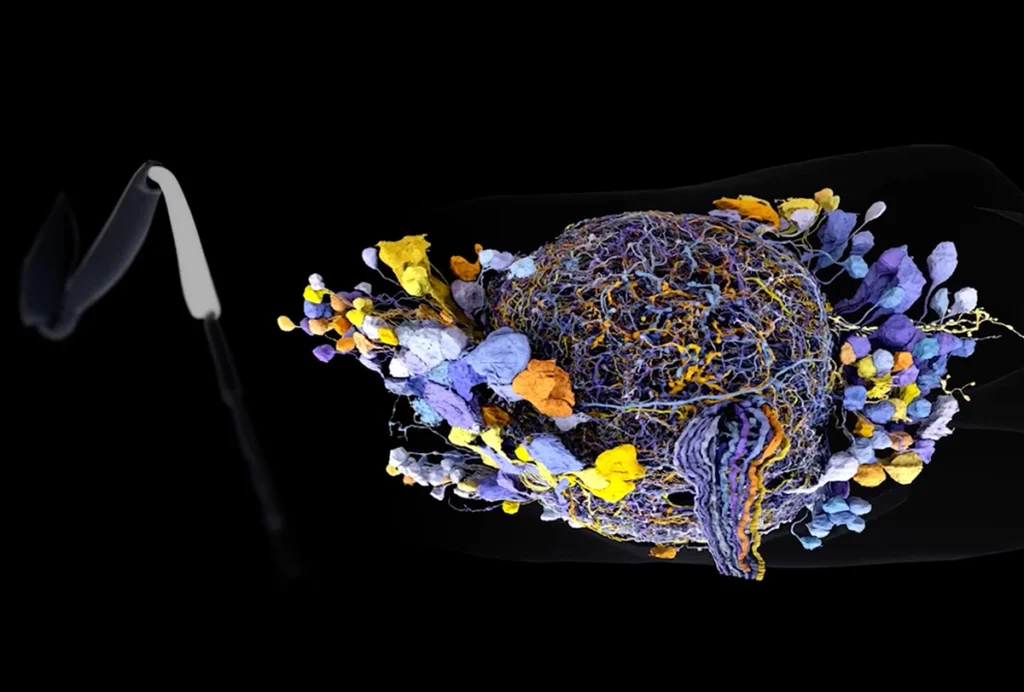

Designing an open-source microscope

Neuroscience needs a research-video archive

Explore more from The Transmitter

Building an autism research registry: Q&A with Tony Charman