Earl K. Miller is Picower Professor of Neuroscience at the Massachusetts Institute of Technology, with faculty roles in the Picower Institute for Learning and Memory and the Department of Brain and Cognitive Sciences. His lab focuses on neural mechanisms of cognition, especially working memory, attention and executive control, using both experimental and computational methods. He holds a B.A. from Kent State University and an M.A. and Ph.D. from Princeton University. In 2020, he received an honorary Doctor of Science degree from Kent State University.

Earl K. Miller

Professor of neuroscience

Massachusetts Institute of Technology

Selected articles

- “An integrative theory of prefrontal cortex function” | Annual Review of Neuroscience

- “Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices” | Science

- “The importance of mixed selectivity in complex cognitive tasks” | Nature

- “Gamma and beta bursts during working memory readout suggest roles in its volitional control” | Nature Communications

Explore more from The Transmitter

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

After a clinical research career, an interlude at Apple and four months in early retirement, Raphe Bernier found joy in teaching.

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

After a clinical research career, an interlude at Apple and four months in early retirement, Raphe Bernier found joy in teaching.

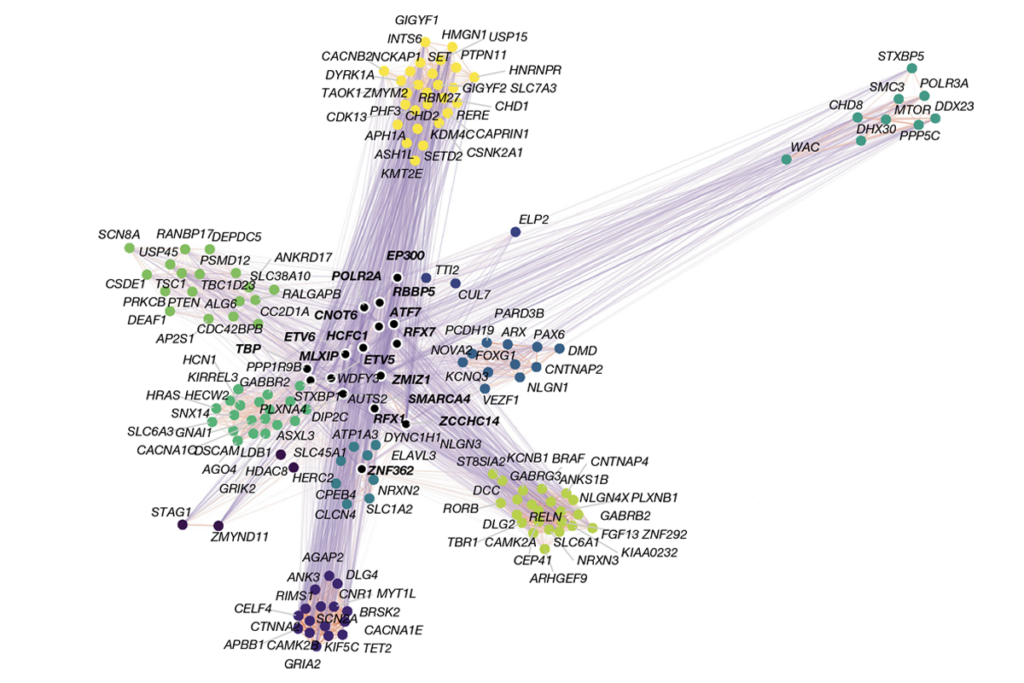

Organoid study reveals shared brain pathways across autism-linked variants

The genetic variants initially affect brain development in unique ways, but over time they converge on common molecular pathways.

Organoid study reveals shared brain pathways across autism-linked variants

The genetic variants initially affect brain development in unique ways, but over time they converge on common molecular pathways.

Single gene sways caregiving circuits, behavior in male mice

Brain levels of the agouti gene determine whether African striped mice are doting fathers—or infanticidal ones.

Single gene sways caregiving circuits, behavior in male mice

Brain levels of the agouti gene determine whether African striped mice are doting fathers—or infanticidal ones.