Elizabeth Preston is a science writer and editor in the Boston area. She has written for The Atlantic, Wired, Jezebel and the Boston Globe, among other publications. Her blog, Inkfish, is published by Discover.

Elizabeth Preston

From this contributor

Test paints quick picture of intelligence in autism

A picture-based test is a fast and flexible way to assess intelligence in large studies of people with autism.

Test paints quick picture of intelligence in autism

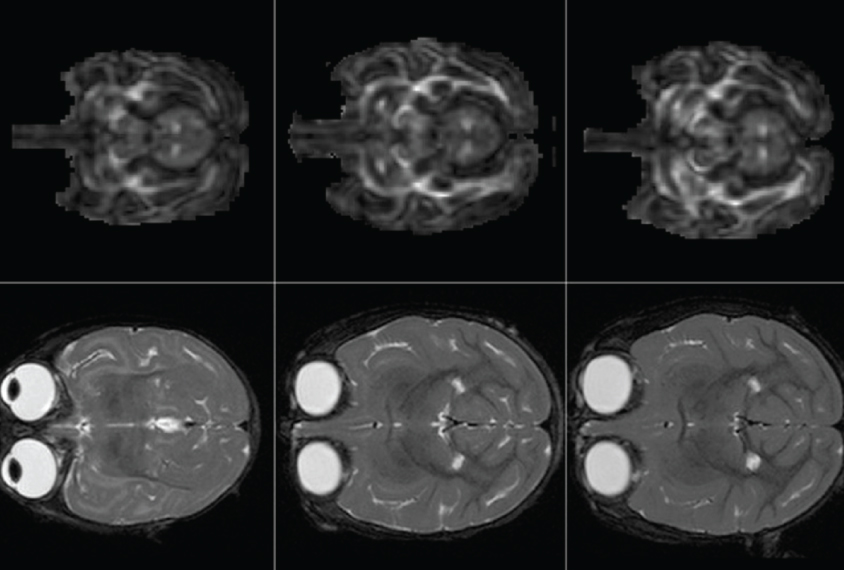

New atlases chart early brain growth in monkeys

A collection of brain scans from monkeys aged 2 weeks to 12 months reveals how their brain structures and nerve tracts develop over time.

New atlases chart early brain growth in monkeys

Work in progress: An inside look at autism’s job boom

Splashy corporate initiatives aim to hire people with autism, but finding and keeping work is still a struggle for those on the spectrum. Can virtual avatars and for-profit startups help?

Work in progress: An inside look at autism’s job boom

Explore more from The Transmitter

Neuroscience needs single-synapse studies

Studying individual synapses has the potential to help neuroscientists develop new theories, better understand brain disorders and reevaluate 70 years of work on synaptic transmission plasticity.

Neuroscience needs single-synapse studies

Studying individual synapses has the potential to help neuroscientists develop new theories, better understand brain disorders and reevaluate 70 years of work on synaptic transmission plasticity.

New insights on sex bias in autism, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 16 February.

New insights on sex bias in autism, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 16 February.

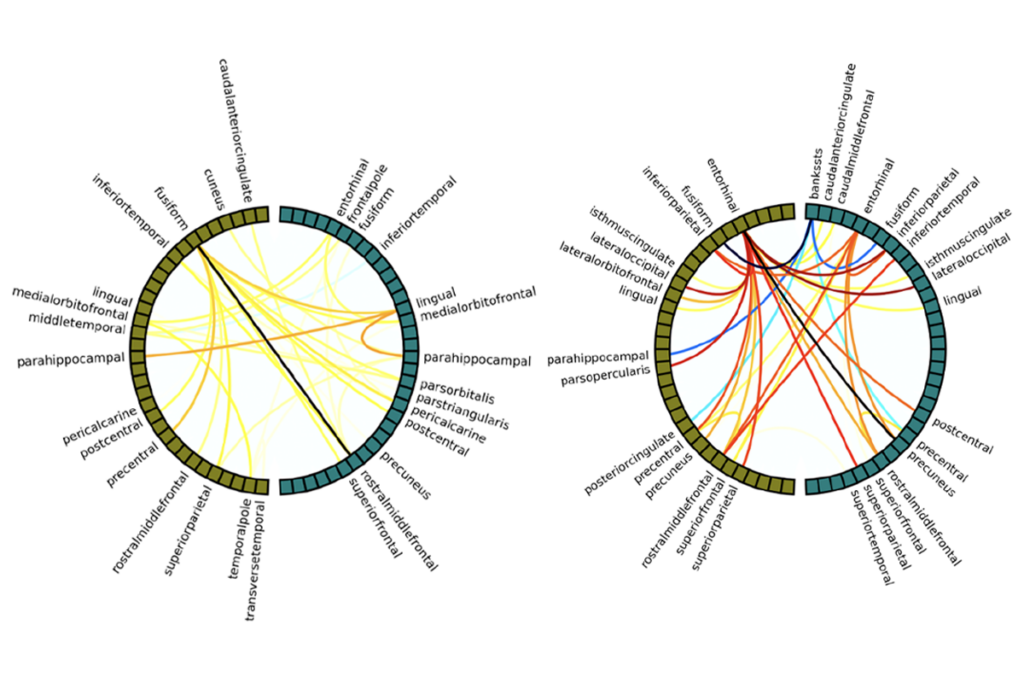

Neuroscience has a species problem

If our field is serious about building general principles of brain function, cross-species dialogue must become a core organizing principle rather than an afterthought.

Neuroscience has a species problem

If our field is serious about building general principles of brain function, cross-species dialogue must become a core organizing principle rather than an afterthought.