Rahul Rao is a freelance science writer, graduate of New York University’s Science, Health and Environmental Reporting Program, and “Doctor Who” fan.

Rahul Rao

From this contributor

Web app tracks pupil size in people, mice

The app relies on artificial intelligence and could help researchers standardize studies of pupil differences in autistic people and in mouse models of autism.

New library catalogs the human gut microbiome

Researchers put hundreds of gut bacteria strains through their paces to chart the compounds each creates — and to help others explore the flora's potential contribution to autism.

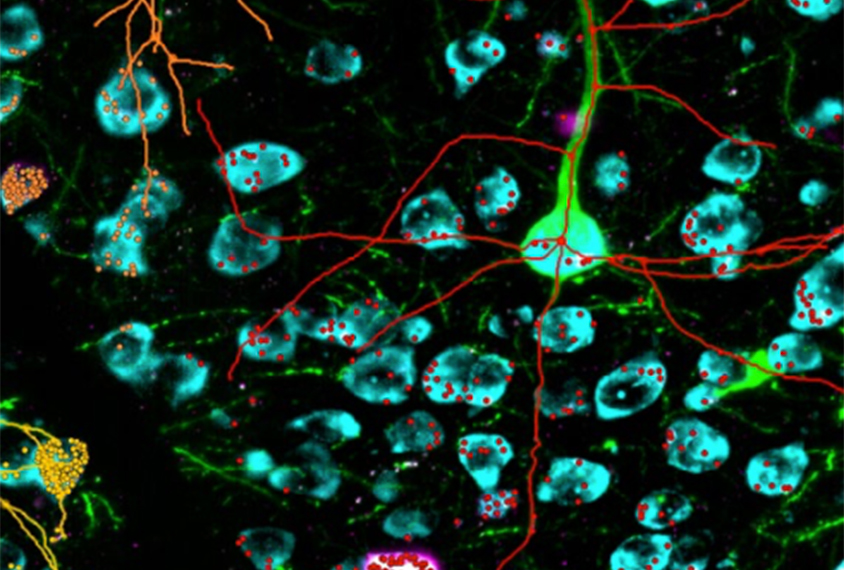

New unified toolbox traces, analyzes neurons

‘SNT’ helps researchers sift through microscope images to reconstruct and analyze neurons and their connections.

Explore more from The Transmitter

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

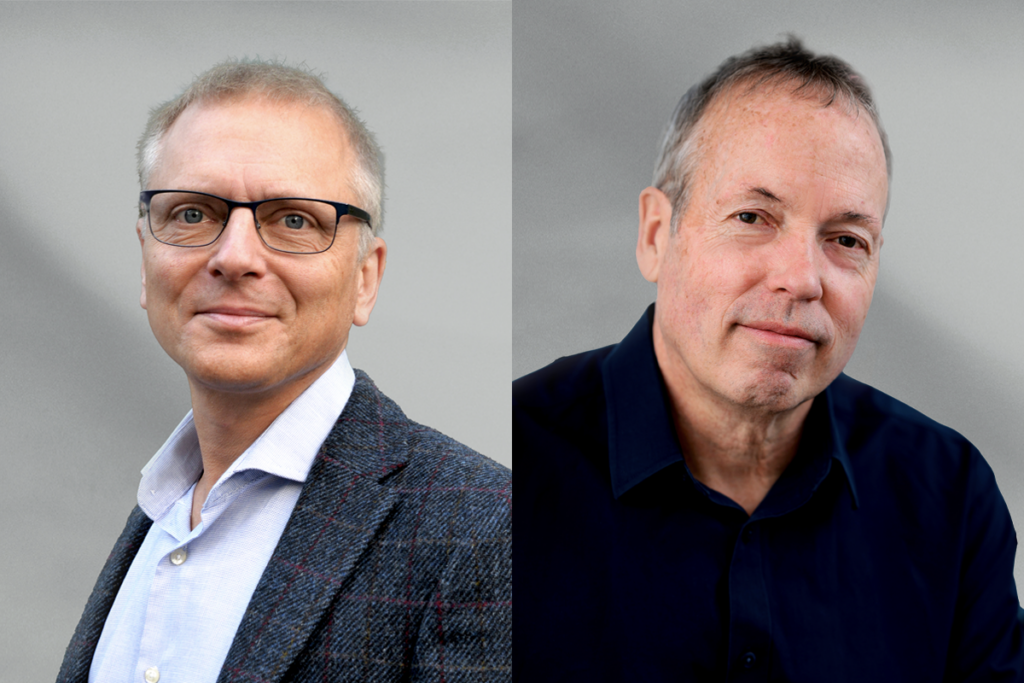

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.