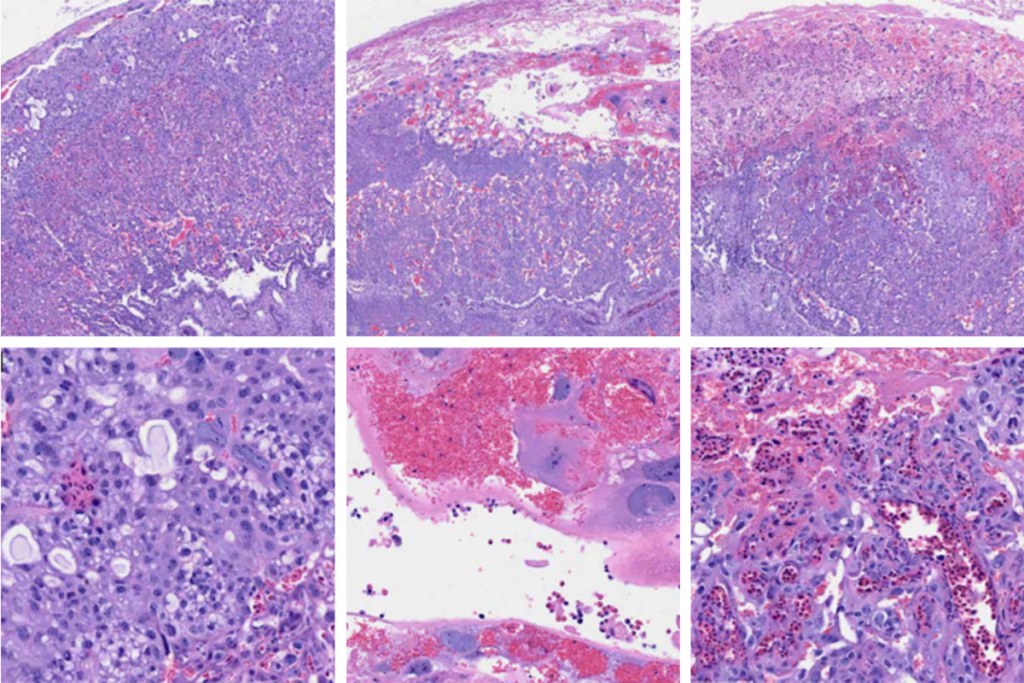

Steve Ramirez is assistant professor of psychological and brain sciences at Boston University and a former junior fellow at Harvard University. He received his B.A. in neuroscience from Boston University and began researching learning and memory in Howard Eichenbaum’s lab. He went on to receive his Ph.D. in neuroscience in Susumu Tonegawa’s lab at the Massachusetts Institute of Technology, where his work focused on artificially modulating memories in the rodent brain. Ramirez’s current work focuses on imaging and manipulating memories to restore health in the brain.

Both in and out of the lab, Ramirez is an outspoken advocate for making neuroscience accessible to all. He is passionate about diversifying and magnifying the voices in our field through intentional mentorship—an approach for which he recently received a Chan-Zuckerberg Science Diversity Leadership Award. He has also received an NIH Director’s Transformative Research Award, the Smithsonian’s American Ingenuity Award and the National Geographic Society’s Emerging Explorer Award. He has been recognized on Forbes’ 30 under 30 list and MIT Technology Review‘s Top 35 Innovators Under 35 list, and he has given two TED Talks.