Individual neurons in the cerebral cortex are finely tuned to the sounds of human speech—beyond just picking out consonants and vowels, two new independent studies show. The cells encode small sounds called phonemes that are said in a similar way; the order in which syllables are spoken; the beginning of sentences; vocal pitch; and word stress, among other features of speech.

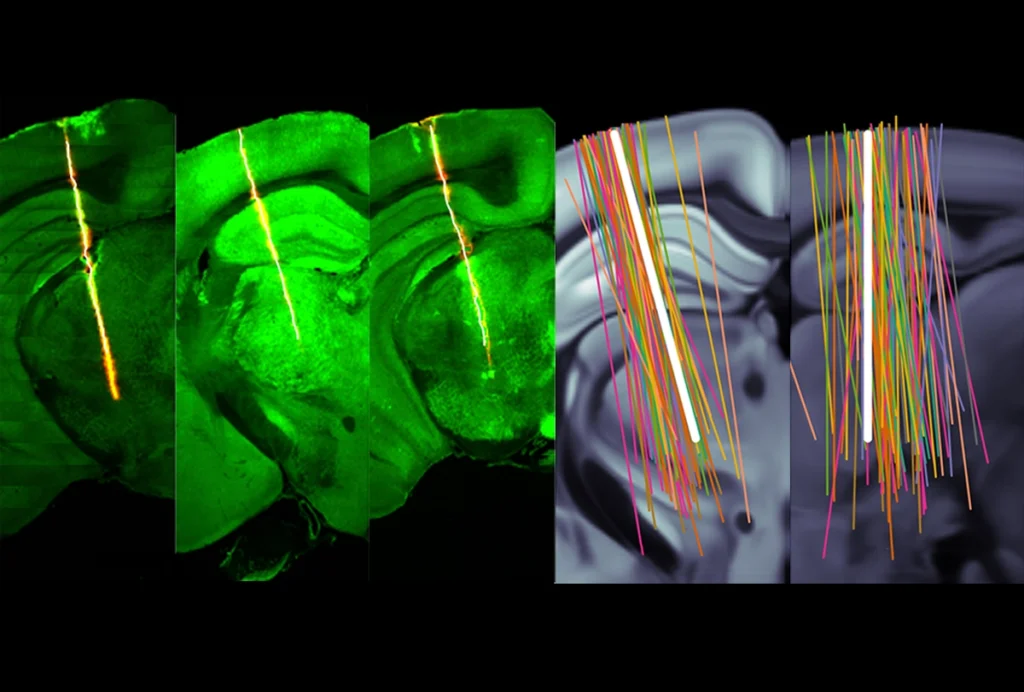

The studies were able to reveal this new level of detail by using Neuropixels probes, which capture the activity of hundreds of individual neurons across all layers of the cortex. Past recordings made from the surface of the brain have found groups of neurons that tune to sequences of sound or certain articulation movements, such as the height of the tongue when people say vowels or the nasal sounds in the consonants “m” and “n.”

Now the Neuropixels data extend that 2D view into 3D and offer a “more fine-grain view of what is happening across different cortical layers,” says Kara Federmeier, professor of psychology and neuroscience at the University of Illinois, who was not involved in either study but studies speech by using electroencephalogram recordings from outside the skull. “It’s difficult to get that kind of information at any scale in humans.”

I

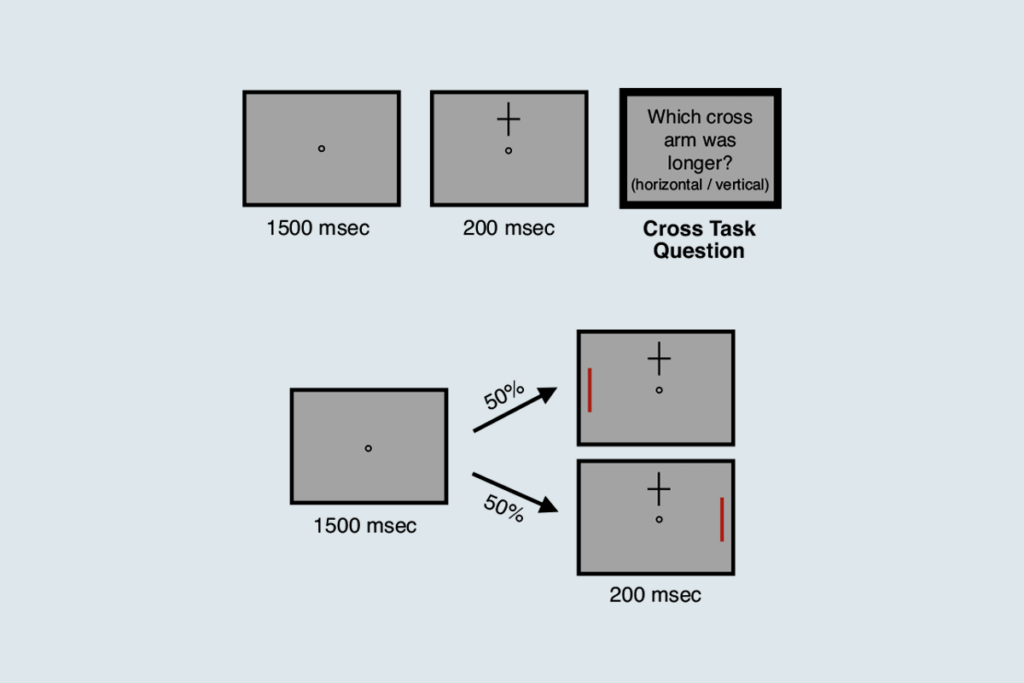

n one of the new studies, the Neuropixels probes recorded the posterior middle frontal gyrus of the prefrontal cortex in five people undergoing surgery for deep brain stimulation. Participants each spoke hundreds of words during various trials, describing such scenes as a boy chasing a dog.Roughly half of the 272 recorded neurons changed their activity in preparation for words about to be spoken, the researchers found, and about 20 percent fired before specific groups of phonemes were said aloud.

The team trained a decoding software on the recorded neural activity from 75 percent of the total words spoken across participants and tested it on the remaining 25 percent of words. Because some sounds occur more frequently than others, the team normalized the decoder performance based on how likely the sounds are to occur in words. “It keeps you honest,” says lead investigator Ziv Williams, associate professor of neurosurgery at Massachusetts General Hospital. The software was able to correctly choose between words with and without a given speech sound about 75 percent of the time.

Different neurons fired when the participants listened to recordings of their own speech, but the software could use the activity from 37 listening neurons to correctly classify words with or without certain phoneme groups 70 percent of the time, according to the study, which was published in Nature in January.

Many neurons, the recordings show, encode a mixture of sound features, such as the position of phonemes and syllables in upcoming words and morphemes, which are units of language that add meaning to a word, such as the suffix “-ed.” Decoding performance peaked for morphemes roughly 400 milliseconds before their utterance, followed by phonemes and then syllables.

Neural activity can predict the sequence of word construction, Williams says. “When you think about it, that is a very exciting thing to be able to do, for me to be able to predict what I’m going to say before I actually vocalize that.”

Kirill Nourski, associate professor of neurosurgery at the University of Iowa, who was not involved in either study, says he would have expected neurons to first process the most elemental unit of speech, the phoneme, before morphemes, which add a different meaning to a word.

A spatial distribution of the neurons characterized by the top decoding performance for the phonemes, syllables and morphemes would help clear this up, he says. The most posterior electrode location among the five participants nears the border between the prefrontal cortex and the premotor cortex, an area associated with higher-order planning. “It sort of reflects the complexity, but in the other direction—you need more time to think it through,” Nourski says.

I

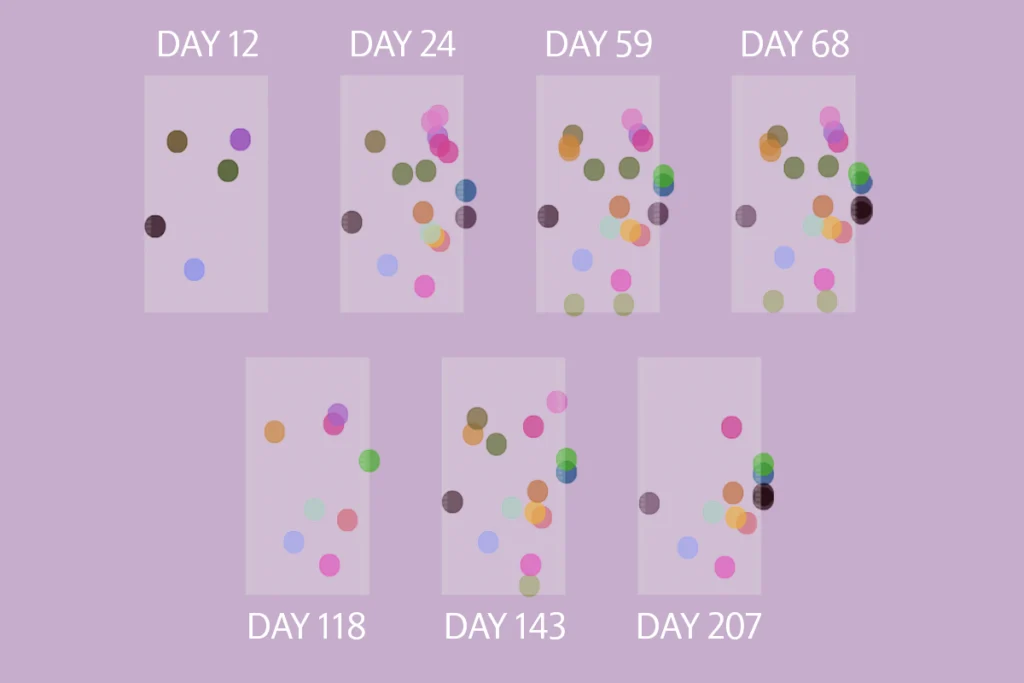

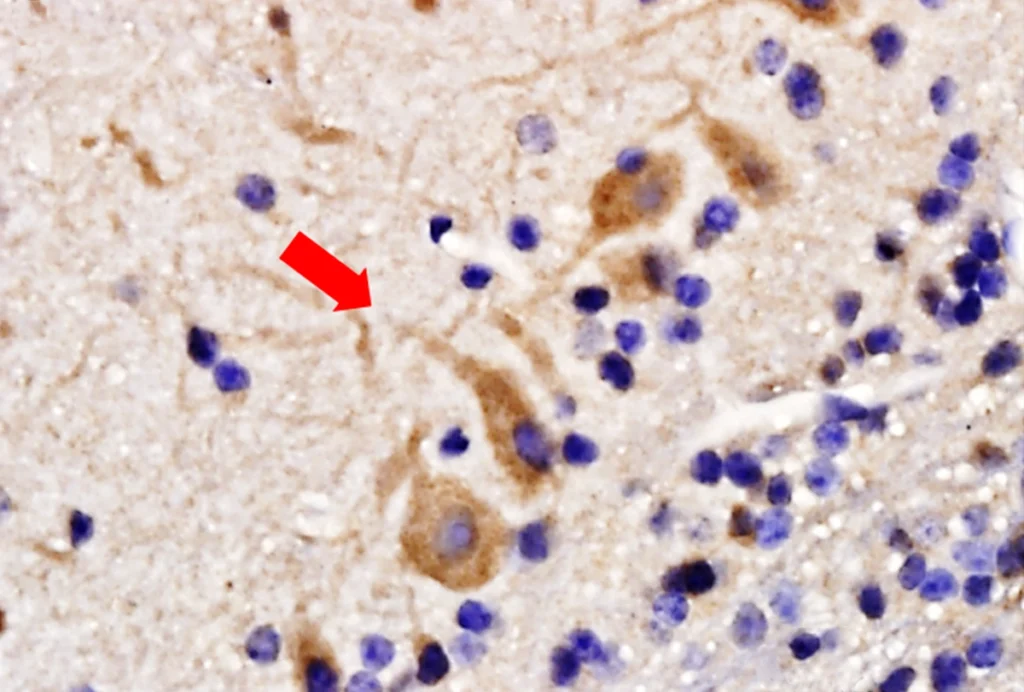

n the other new study, eight participants already undergoing neurosurgery listened to recordings of 110 unique sentences. Neuropixels probes recorded activity from 685 single neurons across the superior temporal gyrus.More than 60 percent of the neurons responded to features of speech. Some responded to the beginning of a sentence; some neurons tuned to fricative consonants such as “s,” others to plosive consonants such as “b.” Neurons at similar depths tended to prefer similar phoneme groups or speech cues, but these preferences changed across the depths.

Though most neurons at each Neuropixels location tuned to a dominant speech feature such as pitch or speech onset, roughly 25 to 50 percent of cells in each location encoded other speech features. “That is very different from a lot of the sort of canonical views of cortical columns in primary sensory areas,” says lead investigator Matthew Leonard, associate professor of neurological surgery at the University of California, San Francisco. It points to “a really strong role for local computation,” he adds. The study was published in Nature in December.

The 451st neuron to emerge from the data when the team sorted them according to spike patterns—a cell subsequently dubbed “Ray” after Ray Bradbury, the author of “Fahrenheit 451,”—typified the heterogeneous response, Leonard says. Ray responded to many speech features but had a penchant for certain vowel sounds.

Activity from Ray and neighboring neurons located 4 to 6 millimeters below the brain’s surface correlated well with high-frequency electrocorticography (ECoG) recordings collected from electrodes placed directly on the brain’s surface just prior to inserting the Neuropixels probes, the results show.

Neurons in the upper layers of the cortex might be expected to contribute most to the ECoG recordings, says Nourski, who uses ECoG recordings to study audition. Yet, like a house with quiet neighbors upstairs and raucous partiers on the ground floor, “even if you’re standing on the roof, you’ll hear more from the party animals,” Nourski says. “[It’s] something that makes you think, well, what kind of noisy neighbors are those?”

Although single-neuron-level human brain studies have “an inherent cool factor,” the San Francisco team did well to verify the match between ECoG and Neuropixels data, Federmeier says. Research based on Neuropixels is limited by the rare availability of willing and qualifying neurosurgery participants.

“I cannot run out and do Neuropixels on my undergraduates,” she adds.

The Neuropixels experiments were costly and among the most technically challenging his lab has ever conducted, Leonard says, so he understands why some of his peers question whether human speech studies using Neuropixels are worth it. “For us, I will say it’s definitely worth it compared to trying to do this on a different scale.”

The extent to which principles illuminated at the single-neuron level explain language rules such as grammar and semantic processes remains an interesting and open question, says Elliot Murphy, a postdoctoral neurosurgery researcher at University of Texas Health Science Center, who wasn’t involved in either study. “It would be really cool to try and adjudicate between different high-order theories based on information provided at the single-unit level,” he says.

Ultimately, both groups say the goal is to understanding language at the level of individual neurons. Leonard says he plans to study the transformation from sounds to “our personal perception of it” within the cortical columns of the superior temporal gyrus. Williams says his future studies will explore comprehension of “complex strings of words that come together to build phrases and sentences.”