Two studies fail to replicate ‘holy grail’ DIANA fMRI method for detecting neural activity

The signal it flags is more likely the result of cherry-picking data, according to the researchers who conducted one of the new studies, but the lead investigator on the original work disputes that conclusion.

Two independent teams of researchers have failed to reproduce the findings from a 2022 study that claimed to directly measure neural activity using functional MRI (fMRI). The findings were published today in Science Advances. The lead investigator of the original team disputes the conclusions of the two studies.

If the technique—called “direct imaging of neuronal activity,” or DIANA—had worked, it “would have been a holy grail,” says Xin Yu, associate professor of radiology at Massachusetts General Hospital and Harvard Medical School, who was involved in one of the replications but not the 2022 study.

But the new studies may have ended that quest: “DIANA is, unfortunately, a bust,” says Martijn Cloos, associate professor at the University of Queensland, who wasn’t involved in either of the replications or the 2022 study.

Traditional fMRI detects changes in blood flow and oxygenation as a proxy for neural activity because it is too slow to capture the quick pace of action potentials. “We’re measuring vasculature, not neurons. And we would really love to measure neurons,” Cloos says.

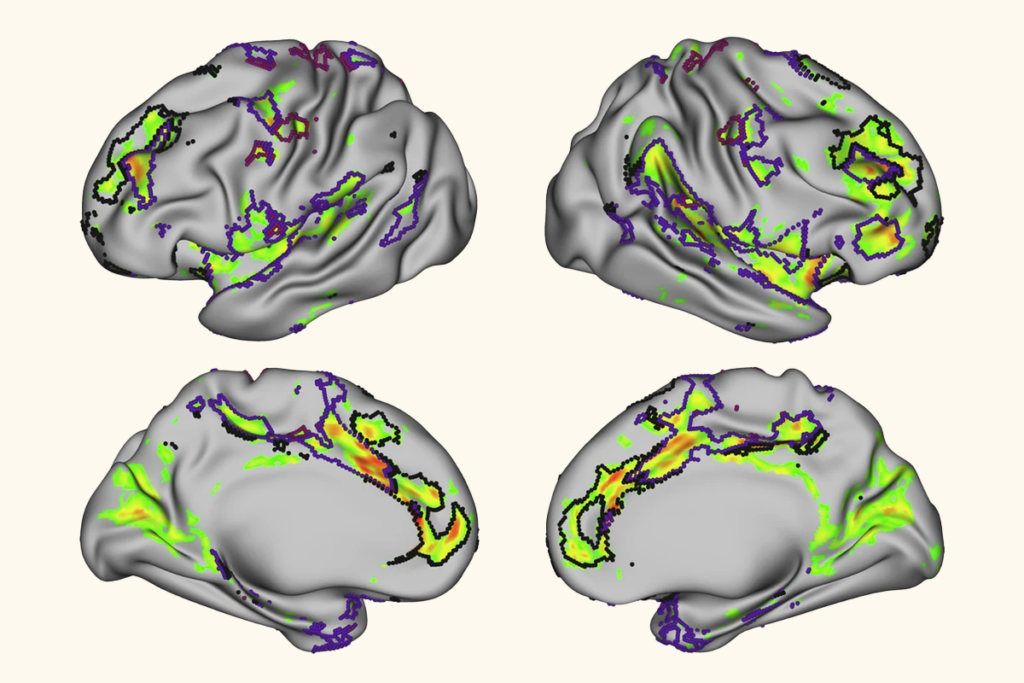

In the 2022 study, which was published in Science, researchers led by Jang-Yeon Park at Sungkyunkwan University collected fMRI data in a way that revealed signal changes on the scale of tens of milliseconds. They imaged the somatosensory cortex of anesthetized mice while stimulating their whisker pads with an electric shock.

Through this approach, Park’s team appeared to spot signs of neural activity—namely, a signal peak that emerged 25 milliseconds after each shock.

Scientists around the world rushed to test the technique using their own MRI machines, including Alan Jasanoff, professor of biological engineering at the Massachusetts Institute of Technology and the McGovern Institute for Brain Research. Jasanoff found an 18-millisecond signal, but through a series of additional control experiments concluded that it was an artifact of data-collection timing.

“The peak is real; there actually is a peak, but it doesn’t have anything to do with neural activity,” Jasanoff says. “So there’s no hope for DIANA as a method for measuring neural activity.”

The second study, led by another scientist at Sungkyunkwan University—Seong-Gi Kim, professor of biomedical engineering—could only find a DIANA signal when the researchers limited their analysis to data that resembled the signal they were looking for. Kim, Yu and their colleagues argue that the DIANA signal reported in 2022 is the result of cherry-picking trials from noisy data.

Park is the in the process of preparing new papers and conference abstracts that respond to the criticisms, he says, including a new data analysis method that does not involve selecting which trials to average.

“I firmly believe, despite the controversy, the DIANA signal exists,” he says. “Further studies from other groups—including me and my collaborators—will clearly reveal the truth. I think it’s just a matter of time.” He understands that sometimes replications fail, he says, “but to me, it looks like they reached a hasty conclusion.”

M

ost fMRI studies gather data from an entire coronal section of the brain one voxel, or 3D unit of brain space, at a time, Kim says.The DIANA method is faster because it collects data voxel by voxel for just one part of the slice at a time. For example, in the 2022 study, the researchers stimulated the whiskers and collected data for one row of a brain slice, then stimulated the whiskers again and collected data for the next row, and so on. By contrast, the traditional approach would be to stimulate the whiskers once and collect data for the entire brain slice, Kim says.

The novelty of the DIANA method was not the image-collecting protocol or a new technology, but that the authors “decided to go really fast and look at the really short signals,” says Peter Bandettini, chief of the Section on Functional Imaging Methods at the National Institute of Mental Health, who collaborated on Kim’s replication study.

Kim followed the 2022 study’s protocol as closely as possible, he says, but used his university’s 15.2 tesla scanner instead of the 9.4 tesla scanner Park used. The additional resolution should have made the DIANA signal easier to detect, Kim says. He also swapped the anesthesia-as-needed protocol for a constant infusion method to minimize variations in anesthesia depth throughout the experiment, he says.

For the data analysis, he recruited the help of Bandettini and Ravi Menon, professor of medical biophysics, medical imaging, neuroscience and psychiatry at the University of Western Ontario. They could not find a DIANA signal, even when they averaged 1,050 trials together.

“We had a number of calls to discuss this, and I’m very convinced,” Menon says. “I mean, between the three of us, we have almost a century of fMRI experience.”

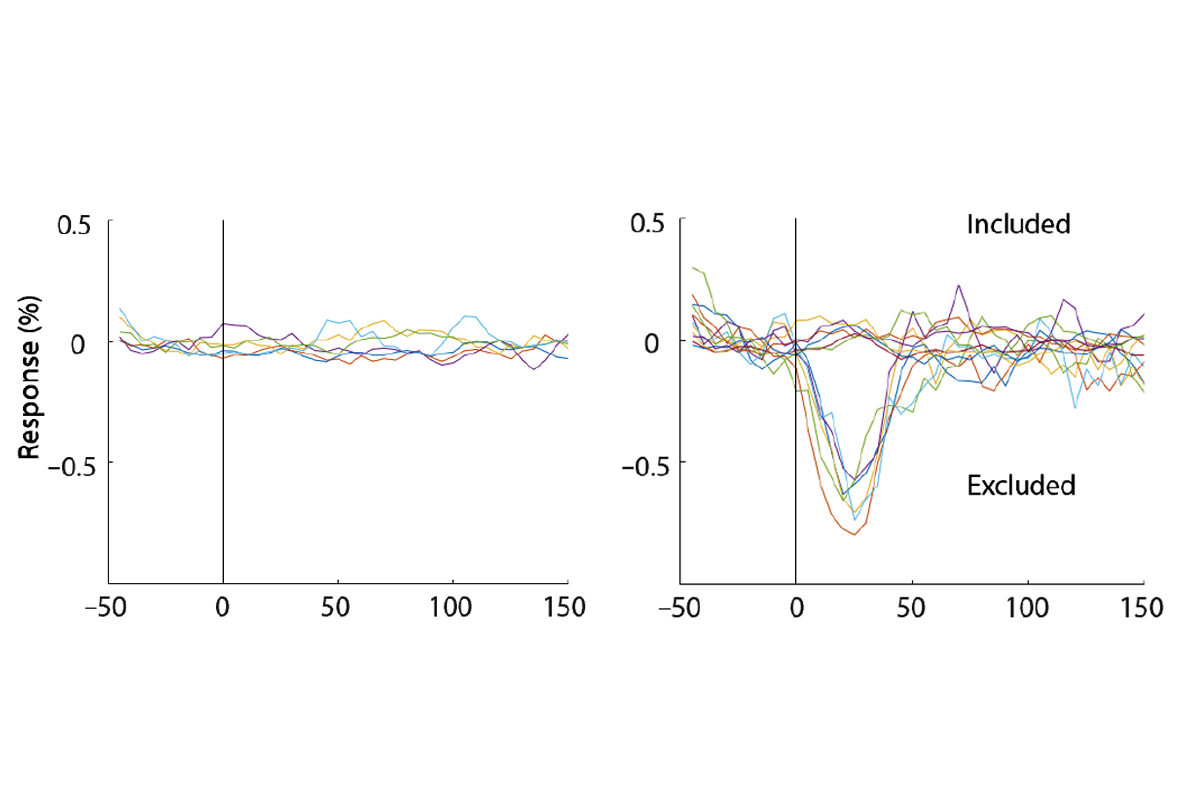

They did find a signal, however, when they excluded what they call in their study “presumably bad trials.” They sorted each trial based on how closely it showed peak activity 25 milliseconds after the electrical stimulation—the DIANA signal they were looking for—and a control signal of peak activity 35 milliseconds after stimulation. They could detect a significant signal for both conditions after removing 10 percent of trials.

“You can get any response that you want, just by averaging noise together,” Bandettini says.

This is not a typical data-analysis approach, says Noam Shemesh, principal investigator at the Champalimaud Centre for the Unknown, who was not involved in the replications or the original study. “We don’t exclude data, almost ever. Apart from data that is obviously wrong,” he says, and the excluded trials must meet a set of objective criteria that are separate from the signal you are studying. “If the person sneezed and they moved, and the brain now looks like a garbage bag—that is a fair exclusion.” Another valid reason to exclude a trial is if electrophysiological data recorded at the same time indicate no neural activity occurred, Kim says, which the 2022 study did not do.

Park denies cherry-picking data but has adopted a new data analysis method to make that clearer, he says. In his first study, he collected data from 48 to 98 trials for each mouse and selected 40 to average. Now he acquires only 40 trials from each mouse and averages them all, he says.

“I have clear criteria now. We clearly see what people really want. So we tried our best to satisfy the audience,” he says. He is wrapping up two additional DIANA studies, one using an 11.7 tesla scanner in mice and another using a 3 tesla scanner in people, he says, and plans to submit the papers and post them on bioRxiv in the next month.

In the other replication study, Jasanoff and Valerie Doan Phi Van, a postdoctoral fellow in his lab, stimulated the hind limb of anesthetized rats and observed a signal peak in the somatosensory cortex 18 milliseconds later. But they observed the same peak when they carried out the experiment with the electrical stimulus cable turned off, which hinted that the peak was an artifact caused by the program that controls the scanner, Jasanoff says.

During each round of the experiment, the MRI scanner collects 200 milliseconds’ worth of data divided into 5-millisecond chunks. “In order to get any sort of coherent signal out of that, it’s critical that stimulation of the animal be precisely synchronized with the scanner,” Jasanoff says. Park’s team used an electrical pulse in the scanner to accomplish that, which inadvertently shifted data acquisition by 10 microseconds each round.

“Ten microseconds is a ridiculously small amount. Nevertheless, because of the physics of MRI, it kicks the whole system out of kilter a little bit and ends up leading to these peaks that look like they’re synchronized with the stimulus,” Jasanoff says, but they are actually synchronized with the electrical pulse. A synchronization protocol that accounted for this quirk did not produce a discernable DIANA signal, the study found.

Park says his team initially saw this artifact, too. “We didn’t figure out clearly where it came from, but we could suppress it,” he says. “I appreciate that he figured out where this artifact signal comes from.”

Together, the two new studies suggest “a combination of both ‘cherry picking’ and an unintended biased acquisition may have led to the wrong conclusions,” Shemesh says.

Cloos also failed to replicate the DIANA method in a 2023 study in humans, and he says that in a forthcoming study, he has not been able to find the signal in rats. He does not want to speculate on why Park’s group found a signal, he says, because the only way to know would be to reanalyze their original data.

Park and his team have not shared their unprocessed data, both Kim and Bandettini say. “They’ve refused to do that,” Bandettini adds.

Park denies that he has not shared his data and says that he has sent his data and MRI sequence to labs at 11 institutions that contacted him directly. He also says he plans to upload the raw data to his lab website in the next couple of months.

C

loos posted his human replication study as a preprint on arXiv in March 2023; Kim posted his team’s replication attempt and statistical argument as a preprint on bioRxiv in May; and Jasanoff submitted his study to Science in June, he says. In August, Science added an editorial expression of concern to the original DIANA paper. “The editors have been made aware that the methods described in the paper are inadequate to allow reproduction of the results and that the results may have been biased by subjective data selection,” the expression says. “We are alerting readers to these concerns while the authors provide additional methods and data to address the issues.”The two replication studies published today are “not an official part of the editorial process involved in assessing and acting on any irregularities present in the original study,” a spokesperson from Science told The Transmitter in an email, but the ongoing investigation could take the replication results into consideration.

“The editors are still awaiting an update from the authors, regarding their work to provide additional methods and data. Once those steps are complete and the editors have more information, they will decide on next steps,” the spokesperson wrote.

Park says he hopes other labs keep trying to reproduce his study, and that he wants to see his results get replicated. He does not know why replications have not been successful yet, he says, but he recommends that other researchers try his forthcoming data analysis method and take short breaks during data collection so the DIANA signal does not get weaker over time.

“I’m still learning my data, without any reference. So I really want people to be a little bit more patient,” Park says. “I really want to work with people. I really want their help, their insight. They’ve been working on fMRI for a long time.”

Kim says the replication study is the end of his involvement in DIANA, and Menon and Bandettini are exploring other ways to use magnetic resonance to measure neural activity. “We would love DIANA to work. We weren’t trying to squash this because we were rivals,” Bandettini says. “This came naturally through trying to reproduce things.”

Shemesh and Yu say they think it’s still possible that DIANA could pan out with different technology or analysis methods. The failed replications are not a dead end for DIANA, Yu says, because it’s a natural part of the scientific process to take steps forward and then backward.

“This is healthy,” he says. “We need this thinking, rethinking. And eventually—that part I’m optimistic— we’ll find the paths. We’ll really be able to move the field forward.”

Correction

Recommended reading

‘Ancient’ brainstem structure evolved beyond basic motor control

Dose, scan, repeat: Tracking the neurological effects of oral contraceptives

New ‘decoder’ tool translates functional neuroimaging terms across labs

Explore more from The Transmitter

Machine learning spots neural progenitors in adult human brains

Xiao-Jing Wang outlines the future of theoretical neuroscience