Scientists understandably question the impact of their work at times. Even when we feel we are making the right choices day by day, we sometimes wonder, “Will anyone else care?” Our desire to do science that has a lasting influence is a natural one. Many of us were influenced by key papers in our field from decades ago — papers that we go back to time and again — and we would be happy if our work could fill that place for someone else. But given that even well-received studies can fall out of favor over time, how can we aim for our work to have an enduring impact?

Wrestling with this question, it often feels like the grass is greener for scientists doing different kinds of work from our own. “If I were doing experimental work,” I tell myself as a computational neuroscientist, “then maybe I’d be more certain that I was contributing something of long-term value.” When I build a computational model, it is only as good as its underlying assumptions. All the work I do to understand and explore the model’s behavior turns to dust if the model is later on no longer considered a decent match to reality. Experiments, on the other hand, study reality directly. No matter what happens next, a properly performed experiment still adds a brick to the wall of facts. Surely then, experimental work should have, on average, a longer shelf life and more impact.

Yet experimentalists don’t seem more confident in the long-term durability of their work. As the replication crisis in psychology has shown, entire swaths of empirical research can be wiped out when faulty methods are uncovered and corrected. Even if certain methods aren’t wrong per se, they can often be replaced with newer, better ones. Not many scientists look to PET imaging papers now that functional MRI data exists, for example. Similarly, many studies done with coarser pharmacological methods for controlling neural activity were re-done with more precision after the introduction of optogenetics. With methods constantly evolving, maybe the impact of any average experimental finding doesn’t have such a long tail after all. Furthermore, the types of facts we’re most interested in change as theories do. Sound methodology doesn’t guarantee impact if it’s used to answer an outdated question.

What gives a piece of work broad and lasting significance? As a case study, I looked into some of the most cited papers published in the journal Nature Neuroscience in 1999. Nearly 25 years on, how do these papers hold up, and what made them so influential? I was able to find two experimental papers and two computational papers with similarly high citation counts to focus on. (Citation counts below come from Google Scholar.) Though this is by no means a rigorous meta-science exploration, it offers a nice jumping-off point to reflect on what gives a paper staying power.

T

wo of the papers, one could argue, represent truly novel results or ideas. The experimental paper “Running increases cell proliferation and neurogenesis in the adult mouse dentate gyrus” (4,908 citations) finds for the first time that running causes neurogenesis. The computational paper “Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects” (5,281 citations) introduces an entirely new model for interpreting well-established experimental results.The other two papers could be viewed more as the culmination of previous work, exploring or extending an accepted approach or question. The experimental paper on this side, “Brain development during childhood and adolescence: A longitudinal MRI study” (6,889 citations), provides a complete view of how white and gray matter change during development. The computational paper “Hierarchical models of object recognition in cortex” (4,251 citations) puts forth an extension of existing visual models that can capture the full ventral stream.

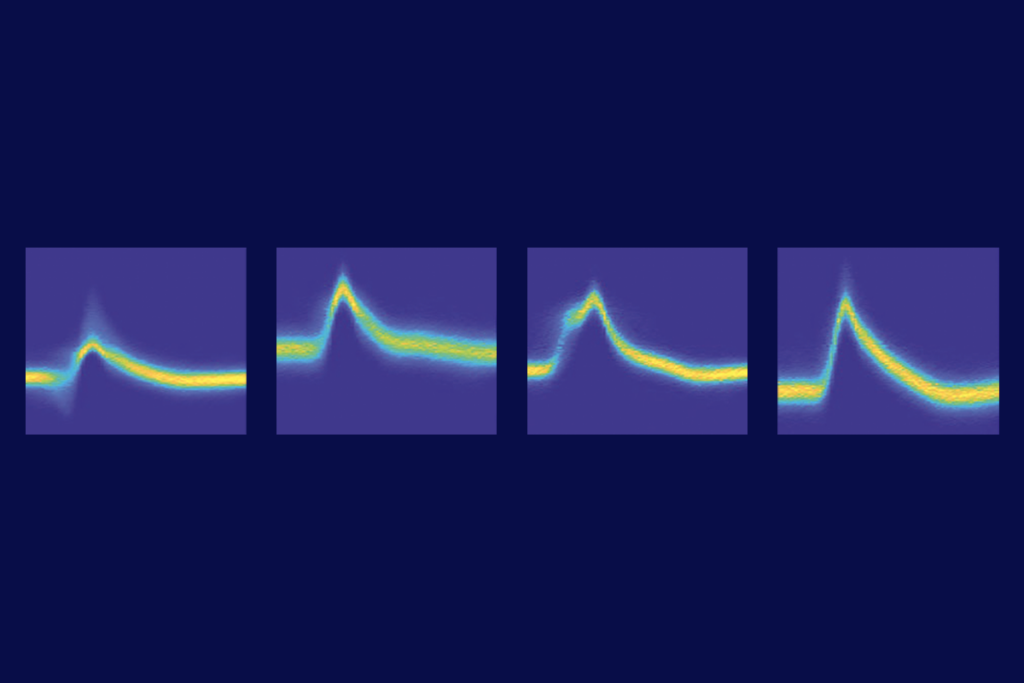

I first examined whether their impact was sustained over time. Some papers cause a stir when first released but fail to show enduring influence. Interestingly, three of the papers — both experimental papers and the object-recognition paper — showed a similar citation profile. Citations ramped up relatively quickly, peaked around 2015 and remain strong today. The predictive-coding paper was different: It started with relatively few citations per year, began to ramp up around 2014 and is only now peaking. All four papers continue to be highly cited, so their impact endures.

Looking at where the citations come from, however, reveals different patterns for the novel versus the culminatory work. For the two culminatory papers, the types of journals that cited them remained relatively constant over time. Experimental journals continually cited the fMRI paper, and computational journals continually cited the object-recognition paper. The novel papers, in contrast, showed a shift. The predictive coding paper’s citations initially appeared in computational journals, then expanded to include many experimental journals. The neurogenesis work is consistently cited in experimental journals, but the subject of these journals has shifted from high-level neuroscience to molecular and cellular neuroscience.

Based on these patterns, it seems the novel works have impact by snaking their way through multiple fields, where researchers explore their implications in different ways. The cumulative works, on the other hand, thrive in their home field, remaining a point of reference and an agreed-upon foundation for years to come.

R

eflecting on the potential impact of my own work, this observation offers some criteria to consider. If I am proposing something new, will it change the questions that scientists explore in the future? Will it have the potential to open new doors in many arenas? If I am doing more culminatory work, will it provide a sturdy anchor for the field, clarifying existing results and filling in gaps? Will it be robust and insightful enough to remain a building block?Unfortunately for self-doubting scientists, the path to greatest impact is likely unpredictable. But not every paper needs to be a home run — runs batted in still up the score. Even if a particular project doesn’t become a lasting touchstone, it can still be part of a chain of research that collectively has significant influence.

And here, there may indeed be a difference in what we should expect from computational and experimental work. If the goal is to provide the input for the next step in research, experimental work should offer useful constraints and goals for model building. Models should provide pointers to what experimental data would best further our understanding.