Evan Eichler

Professor of Genome Sciences

University of Washington

From this contributor

Autism and the complete human genome: Q&A with Evan Eichler

Scientists have at last filled in the missing gaps — an advance likely to inform every aspect of autism genetics research, Eichler says.

Autism and the complete human genome: Q&A with Evan Eichler

Remembering Steve Warren (1953-2021): A giant in the field of genetics

Steve Warren co-discovered the genetic mechanism that underpins fragile X syndrome and was a generous, inspiring mentor to many.

Remembering Steve Warren (1953-2021): A giant in the field of genetics

Questions for Evan Eichler: An evolving theory of autism

A gene that raises the risk of autism in some people may also give humans an evolutionary boost.

Questions for Evan Eichler: An evolving theory of autism

Explore more from The Transmitter

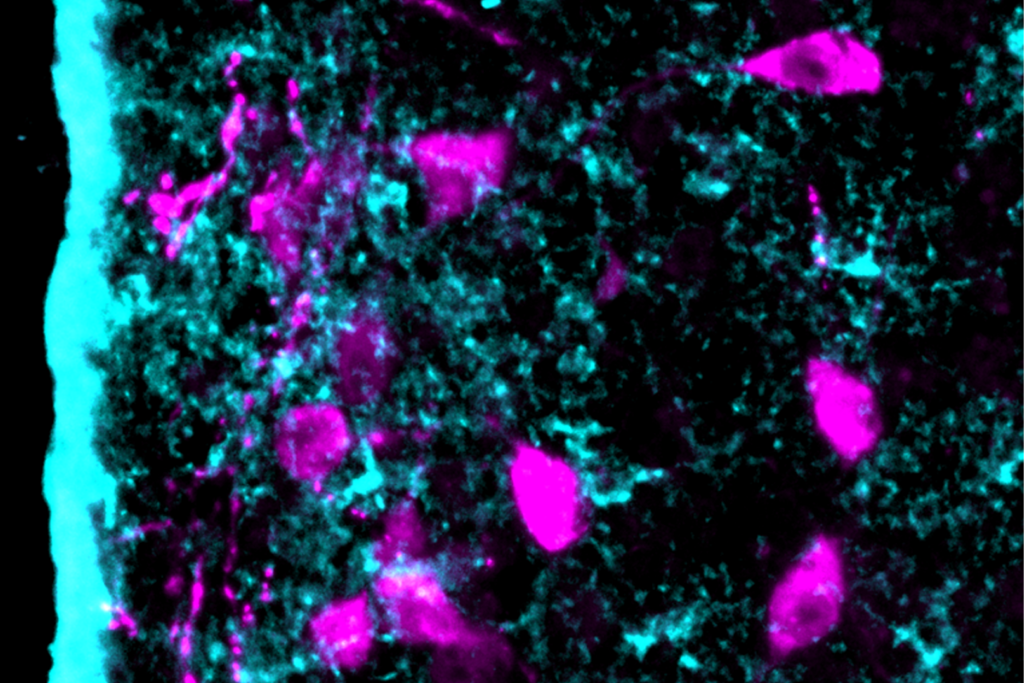

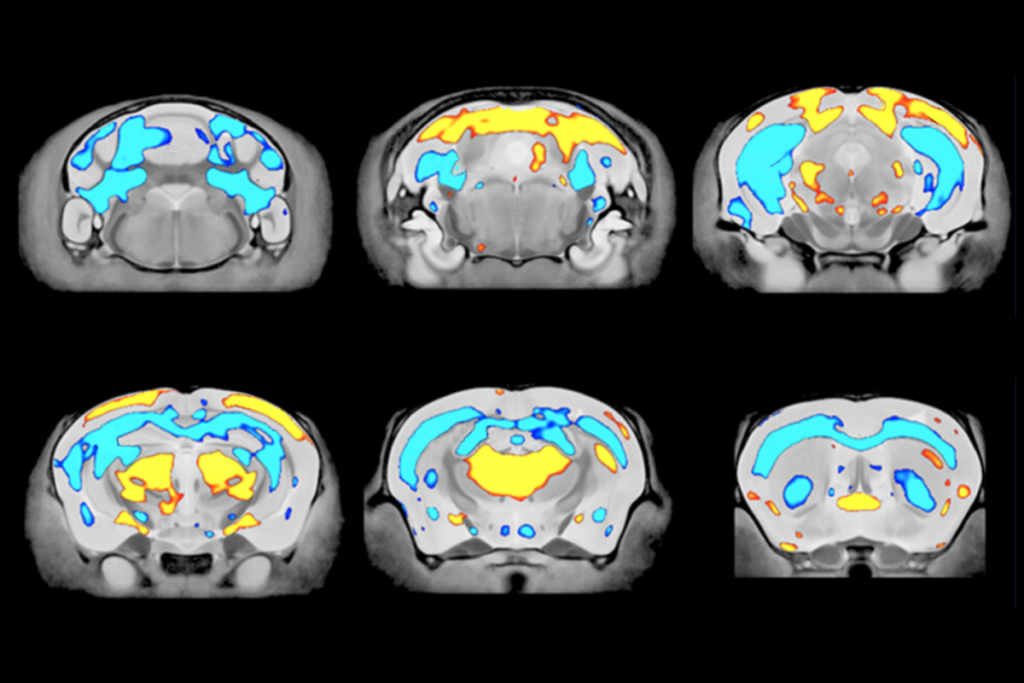

Astrocytes orchestrate oxytocin’s social effects in mice

The cells amplify oxytocin—and may be responsible for sex differences in social behavior, two preprints find.

Astrocytes orchestrate oxytocin’s social effects in mice

The cells amplify oxytocin—and may be responsible for sex differences in social behavior, two preprints find.

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.