Ingfei Chen is a writer and editor in Northern California who likes telling stories about medicine, science and the environment. Her articles have published in The New York Times, Science, KQED Mindshift, Scientific American and Smithsonian, among others.

Ingfei Chen

Freelance writer

From this contributor

What baby siblings can teach us about autism

Studies of infants at risk for autism have not yielded a test to predict who will eventually be diagnosed. But they have transformed our understanding of the condition.

The gene hunters

Criss-crossing the globe on a quest for unusual DNA, researchers have discovered a rare mutation that promises insights into both epilepsy and autism — and points to a treatment.

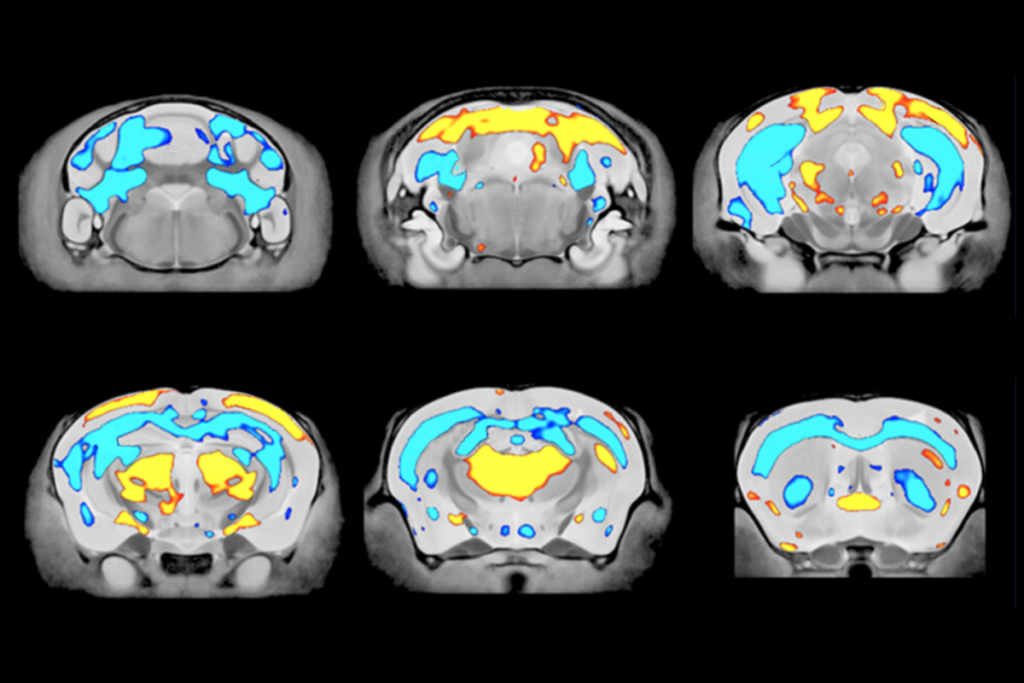

Wide awake: Why children with autism struggle with sleep

Half of children who have autism have trouble falling or staying asleep, which may make their symptoms worse. Scientists are just beginning to explore what goes wrong in the midnight hour.

Wide awake: Why children with autism struggle with sleep

Explore more from The Transmitter

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.

Lack of reviewers threatens robustness of neuroscience literature

Simple math suggests that small groups of scientists can significantly bias peer review.

Lack of reviewers threatens robustness of neuroscience literature

Simple math suggests that small groups of scientists can significantly bias peer review.