Jeremy Hsu is a science and technology journalist who writes for publications such as Scientific American, Discover, Wired, IEEE Spectrum and Undark. His recent focus has been on how artificial intelligence techniques such as deep learning could impact society.

Jeremy Hsu

From this contributor

How scientists secure the data driving autism research

Protecting the privacy of autistic people and their families faces new challenges in the era of big data.

How scientists secure the data driving autism research

Un ordinateur peut-il diagnostiquer l’autisme?

L’apprentissage automatique (machine learning) présente une possibilité pour aider les cliniciens à repérer l'autisme plus tôt, mais des obstacles techniques et éthiques demeurent.

Why are there so few autism specialists?

A lack of interest, training and pay may limit the supply of specialists best equipped to diagnose and treat children with autism.

Can a computer diagnose autism?

Machine-learning holds the promise to help clinicians spot autism sooner, but technical and ethical obstacles remain.

Explore more from The Transmitter

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

A 2013 Nature paper from David Anderson’s lab revealed a group of sensory neurons involved in pleasurable touch and led Abdus-Saboor down a new research path.

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

A 2013 Nature paper from David Anderson’s lab revealed a group of sensory neurons involved in pleasurable touch and led Abdus-Saboor down a new research path.

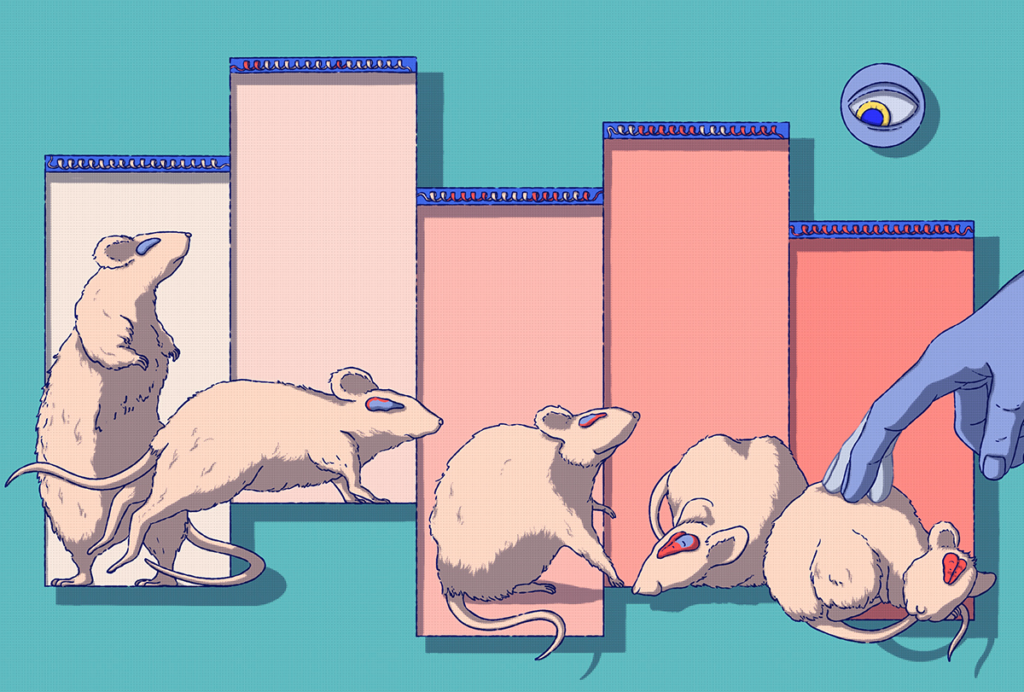

Sex bias in autism drops as age at diagnosis rises

The disparity begins to level out after age 10, raising questions about why so many autistic girls go undiagnosed earlier in childhood.

Sex bias in autism drops as age at diagnosis rises

The disparity begins to level out after age 10, raising questions about why so many autistic girls go undiagnosed earlier in childhood.

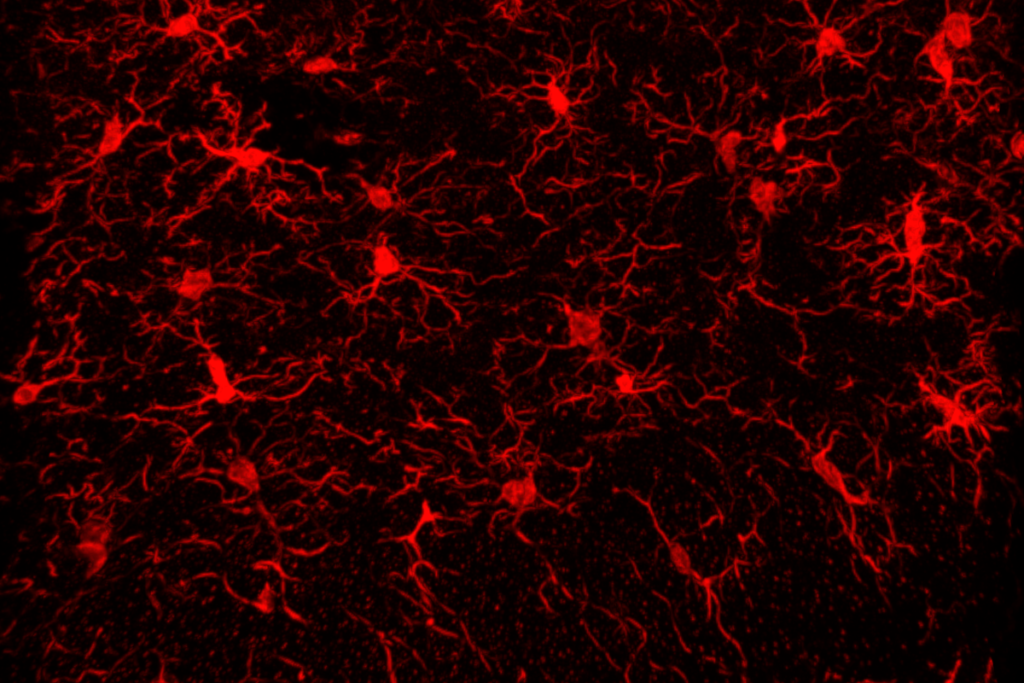

Microglia implicated in infantile amnesia

The glial cells could explain the link between maternal immune activation and autism-like behaviors in mice.

Microglia implicated in infantile amnesia

The glial cells could explain the link between maternal immune activation and autism-like behaviors in mice.