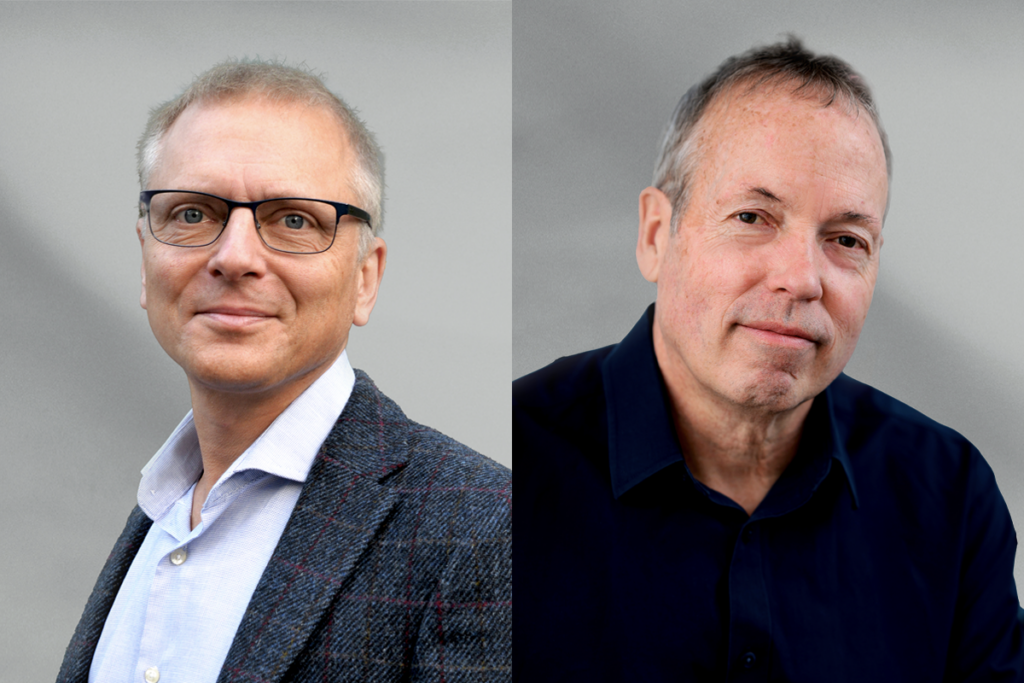

Terrence Sejnowski holds the Francis Crick Chair at the Salk Institute for Biological Studies. He is also professor of biology at the University of California, San Diego, where he co-directs the Institute for Neural Computation and the NSF Temporal Dynamics of Learning Center. He is president of the Neural Information Processing Systems Foundation, which organizes an annual conference attended by more than 1,000 researchers in machine learning and neural computation and is founding editor-in-chief of Neural Computation, published by the MIT Press.

As a pioneer in computational neuroscience, Sejnowski’s goal is to understand the principles that link brain to behavior. His laboratory uses both experimental and modeling techniques to study the biophysical properties of synapses and neurons and the population dynamics of large networks of neurons.

He received his Ph.D. in physics from Princeton University and was a postdoctoral fellow at Harvard Medical School. He was on the faculty at the Johns Hopkins University before joining the faculty at the University of California, San Diego. He has published more than 300 scientific papers and 12 books, including “The Computational Brain,” with Patricia Churchland.