Large language models

Recent articles

How artificial agents can help us understand social recognition

Neuroscience is chasing the complexity of social behavior, yet we have not answered the simplest question in the chain: How does a brain know “who is who”? Emerging multi-agent artificial intelligence may help accelerate our understanding of this fundamental computation.

How artificial agents can help us understand social recognition

Neuroscience is chasing the complexity of social behavior, yet we have not answered the simplest question in the chain: How does a brain know “who is who”? Emerging multi-agent artificial intelligence may help accelerate our understanding of this fundamental computation.

The BabyLM Challenge: In search of more efficient learning algorithms, researchers look to infants

A competition that trains language models on relatively small datasets of words, closer in size to what a child hears up to age 13, seeks solutions to some of the major challenges of today’s large language models.

The BabyLM Challenge: In search of more efficient learning algorithms, researchers look to infants

A competition that trains language models on relatively small datasets of words, closer in size to what a child hears up to age 13, seeks solutions to some of the major challenges of today’s large language models.

‘Digital humans’ in a virtual world

By combining large language models with modular cognitive control architecture, Robert Yang and his collaborators have built agents that are capable of grounded reasoning at a linguistic level. Striking collective behaviors have emerged.

‘Digital humans’ in a virtual world

By combining large language models with modular cognitive control architecture, Robert Yang and his collaborators have built agents that are capable of grounded reasoning at a linguistic level. Striking collective behaviors have emerged.

Are brains and AI converging?—an excerpt from ‘ChatGPT and the Future of AI: The Deep Language Revolution’

In his new book, to be published next week, computational neuroscience pioneer Terrence Sejnowski tackles debates about AI’s capacity to mirror cognitive processes.

Are brains and AI converging?—an excerpt from ‘ChatGPT and the Future of AI: The Deep Language Revolution’

In his new book, to be published next week, computational neuroscience pioneer Terrence Sejnowski tackles debates about AI’s capacity to mirror cognitive processes.

Explore more from The Transmitter

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

After a clinical research career, an interlude at Apple and four months in early retirement, Raphe Bernier found joy in teaching.

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

After a clinical research career, an interlude at Apple and four months in early retirement, Raphe Bernier found joy in teaching.

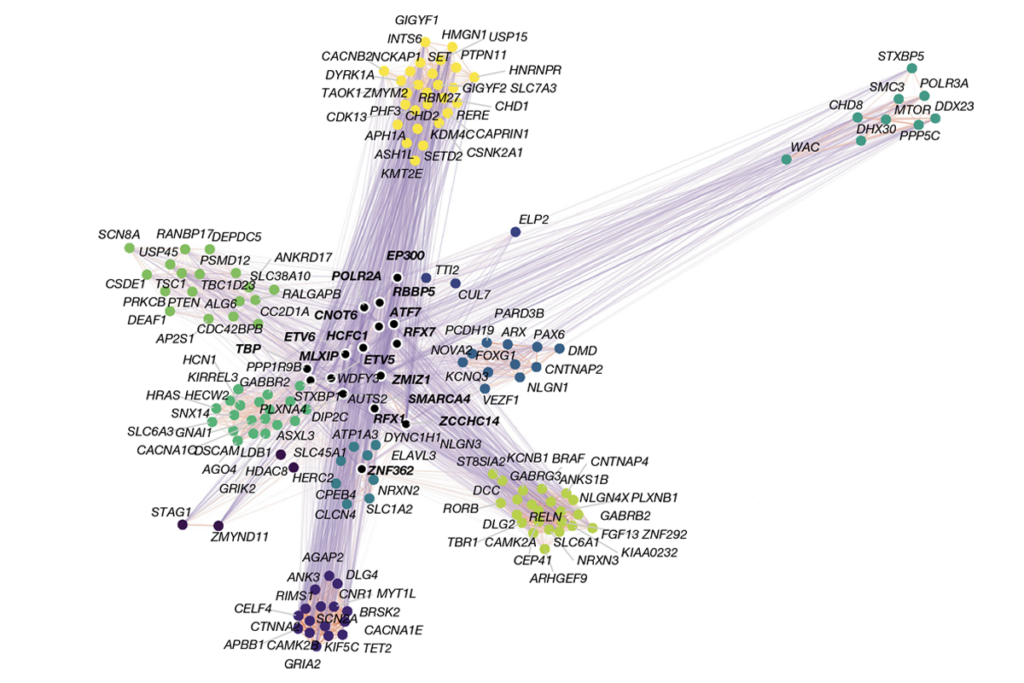

Organoid study reveals shared brain pathways across autism-linked variants

The genetic variants initially affect brain development in unique ways, but over time they converge on common molecular pathways.

Organoid study reveals shared brain pathways across autism-linked variants

The genetic variants initially affect brain development in unique ways, but over time they converge on common molecular pathways.

Single gene sways caregiving circuits, behavior in male mice

Brain levels of the agouti gene determine whether African striped mice are doting fathers—or infanticidal ones.

Single gene sways caregiving circuits, behavior in male mice

Brain levels of the agouti gene determine whether African striped mice are doting fathers—or infanticidal ones.