This paper changed my life: Dan Goodman on a paper that reignited the field of spiking neural networks

Friedemann Zenke’s 2019 paper, and its related coding tutorial SpyTorch, made it possible to apply modern machine learning to spiking neural networks. The innovation reinvigorated the field.

Answers have been edited for length and clarity.

What paper changed your life?

Surrogate gradient learning in spiking neural networks. Neftci EO, Mostafa H, Zenke F. IEEE Signal Processing Magazine (2019) and the SpyTorch Tutorial

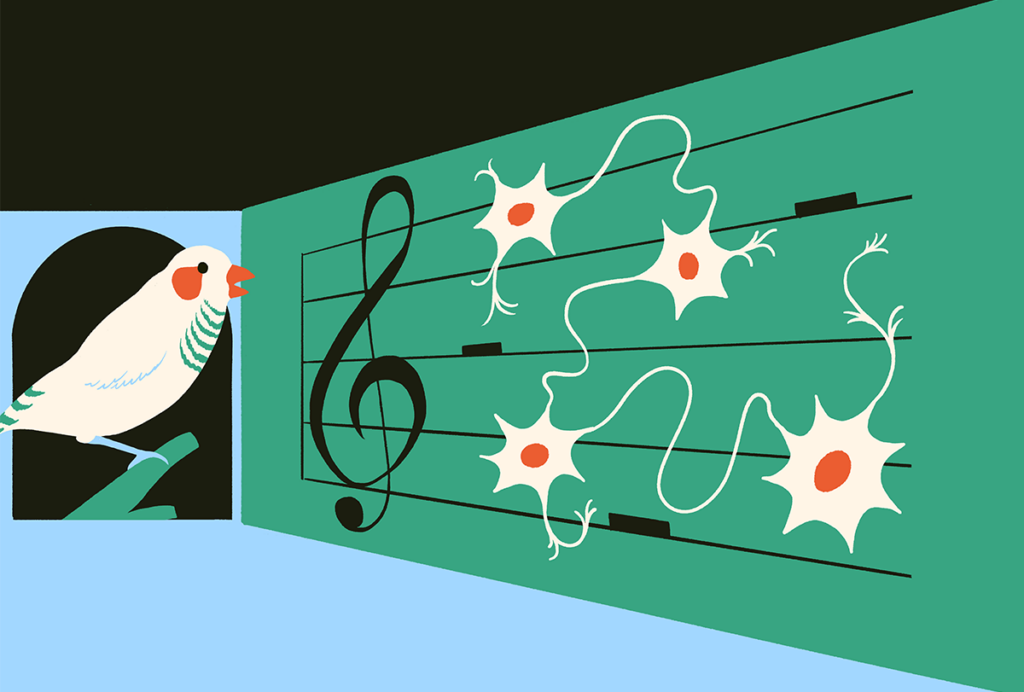

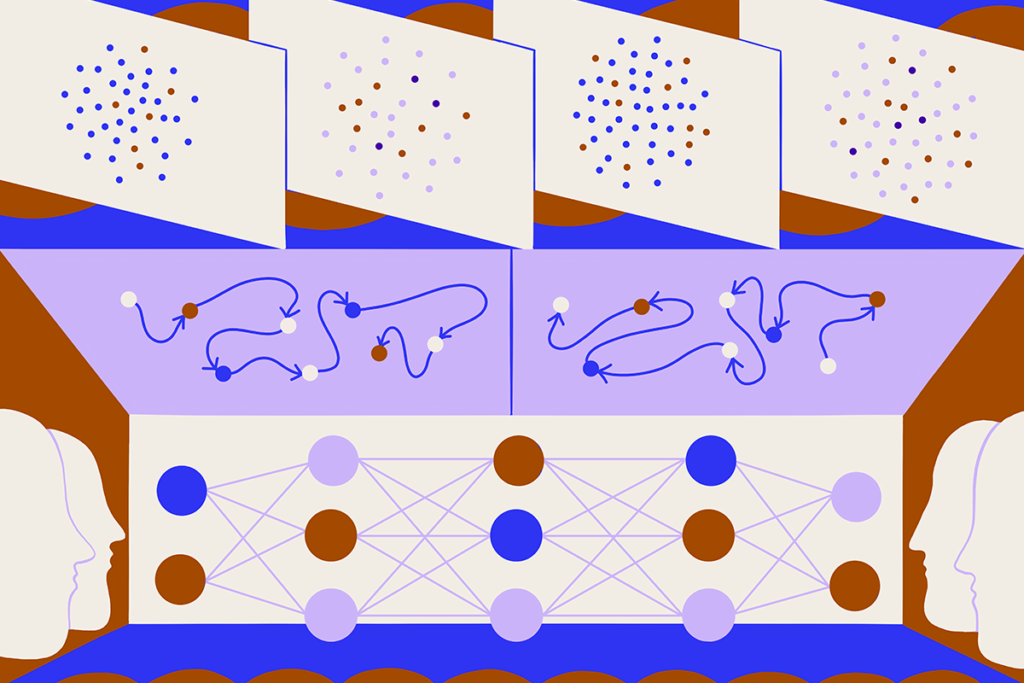

This paper shows how to use modern machine learning with spiking neural networks, networks of model neurons that, like real neurons, communicate via discrete action potentials, or spikes. When it came out, spiking neural networks were going out of fashion because they were incompatible with machine learning, unlike artificial neural networks that are easier to work with computationally but do not spike like real neurons. The issue was that spikes are a discrete event and therefore cannot be differentiated, an essential step in gradient descent—a key algorithm used in machine learning. This paper introduced a clever trick, which the authors called a “surrogate gradient.” This method bypasses the differentiability problem and lets us use the full power of modern machine learning on spiking neural networks. The advance over similar previous work was that this one just works out of the box. Also, the authors provided incredibly simple example code (available via the SpyTorch tutorial) that you could install in 10 minutes and adapt to explore other research questions in a couple of hours. This paper reignited the field of spiking neural networks, which is making faster progress now than it did in the decades before.

When did you first encounter this paper?

I tried to work out exactly when I read it first, but thanks to Outlook’s abysmal search function, I couldn’t nail down the exact date. I know that I met the author Friedemann Zenke and talked about it in November 2017, but SpyTorch wasn’t released until January 2019, and I think I read it for the first time between June and September of that year.

Why is this paper meaningful to you?

I started my career in neuroscience working on spiking neural networks. I wrote the “Brian” spiking neural network simulator software package and then did spiking models of sound localization. Over time, I drifted away from spikes, especially as the power of machine learning became evident. SpyTorch lets me have my cake and eat it too, combining the two major strands of my research: spiking neural networks and machine learning. It is one of the main tools I’ve used since it came out (although there are more advanced software packages available now).

How did this research change how you think about neuroscience or challenge your previous assumptions?

I had assumed that to make progress in understanding how spiking neural networks function, we would need to understand neural plasticity first. With this paper, I felt like it was the first time I could approach the problem of neural computation with spikes by just training spiking networks to do cognitively interesting tasks like recognizing visual gestures and spoken words or making multimodal decisions in a noisy environment, and looking at the sorts of solutions found. Of course, we still need to do a bit of thinking, too, but it’s a lot easier than before.

How did this research influence your career path?

I had been drifting away from spiking neural networks because I didn’t have the right tools, and this brought me back to them. I think it’s fair to say that the day I went through the SpyTorch tutorial completely changed the direction of my career path, and I don’t think I’m the only one. For me, this is a model of high-impact science, and I find it a bit sad that contributions like a tutorial aren’t formally recognized by academia in things like job applications, promotions, etc. On a more personal level, now that this paper has helped to make spiking neurons cool again, and many other people are using these tools, I’m getting asked to give talks much more often. So that’s nice.

Is there an underappreciated aspect of this paper you think other neuroscientists should know about?

I think a lot of people in neuroscience haven’t realized just how easy it is now to do this sort of research, and the potential it has to answer age-old questions about relating neural mechanisms to their computational role or function. The first paper we wrote using this tool required us to change two lines of code in the SpyTorch tutorial, and it let us give an answer to the question of why neurons are so heterogeneous. OK, I’m slightly exaggerating. In the end, we—of course—wrote a lot more code than that, and it’s unlikely that what we found are the only reasons for neural heterogeneity. The point is that these tools are so good that this type of research is incredibly accessible now, and more people should be doing it.

Recommended reading

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

This paper changed my life: John Tuthill reflects on the subjectivity of selfhood