Anna Devor is professor of biomedical engineering at Boston University (BU), associate director of the BU Neurophotonics Center, and editor-in-chief of the journal Neurophotonics, published by the optical engineering society SPIE.

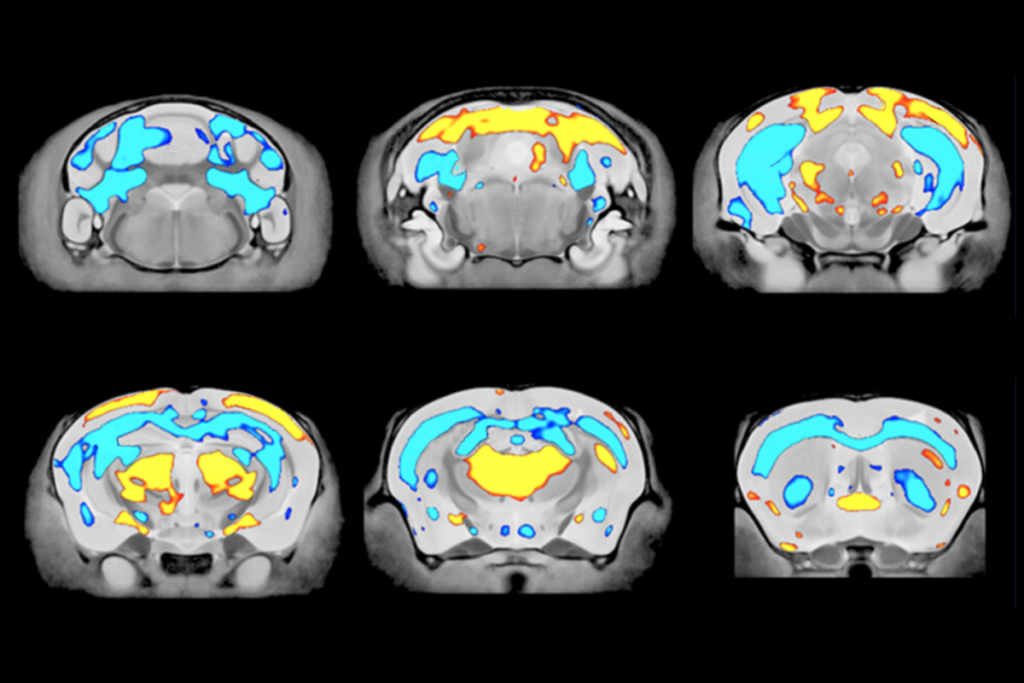

Devor’s lab, the Neurovascular Imaging Laboratory, specializes in imaging neuronal, glial, vascular and metabolic activity in the brains of living and behaving experimental animals. Her research is focused on understanding fundamental neurovascular and neurometabolic principles of brain activity and the mechanistic underpinning of noninvasive brain imaging signals. She also works on imaging of stem-cell-derived human neuronal networks.

Devor received her Ph.D. in neuroscience from the Hebrew University of Jerusalem. After completing her postdoctoral training in neuroimaging at the Athinoula A. Martinos Center for Biomedical Imaging, she established her own lab at the University of California, San Diego before moving it to BU in 2020. She has a wide network of collaborators across the world and is experienced in leading large, multidisciplinary teams.