Matthew Judson is a research associate in the UNC Neuroscience Center at the University of North Carolina at Chapel Hill.

Matthew Judson

Research associate

University of North Carolina at Chapel Hill

From this contributor

Angelman syndrome: Bellwether for genetic therapy in autism

It is not a matter of whether there will be clinical trials of genetic therapy for Angelman syndrome, but when.

Angelman syndrome: Bellwether for genetic therapy in autism

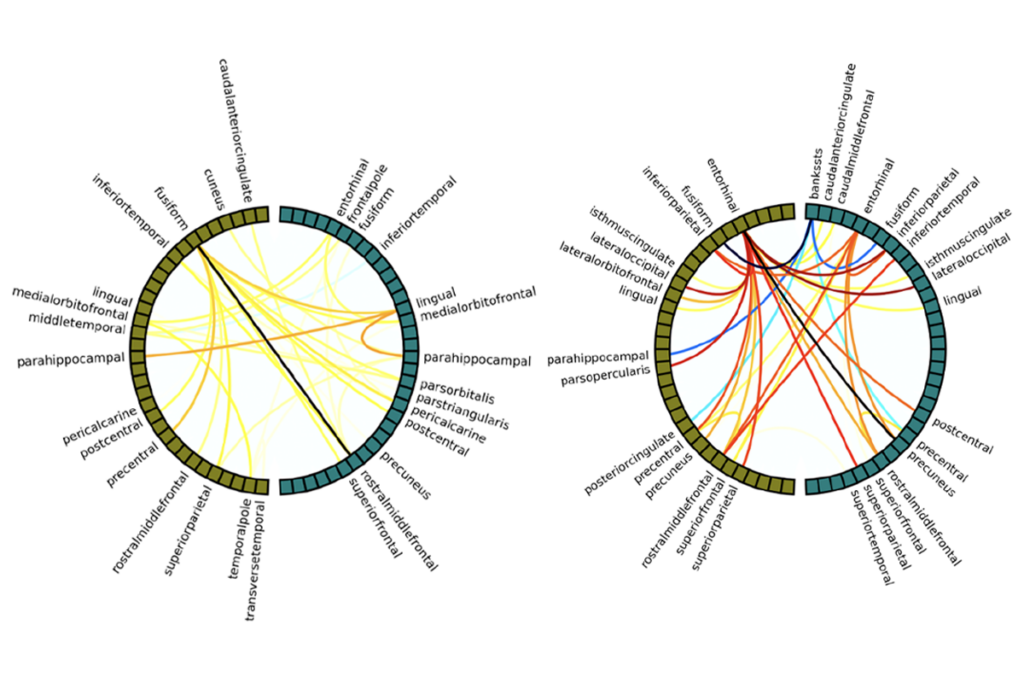

Insights for autism from Angelman syndrome

Deletions or duplications of the UBE3A gene lead to both Angelman syndrome and some cases of autism, respectively. Studying the effects of altered gene dosage in this region will provide insights into brain defects and suggest targets for therapies for both disorders, says expert Benjamin Philpot.

Insights for autism from Angelman syndrome

Explore more from The Transmitter

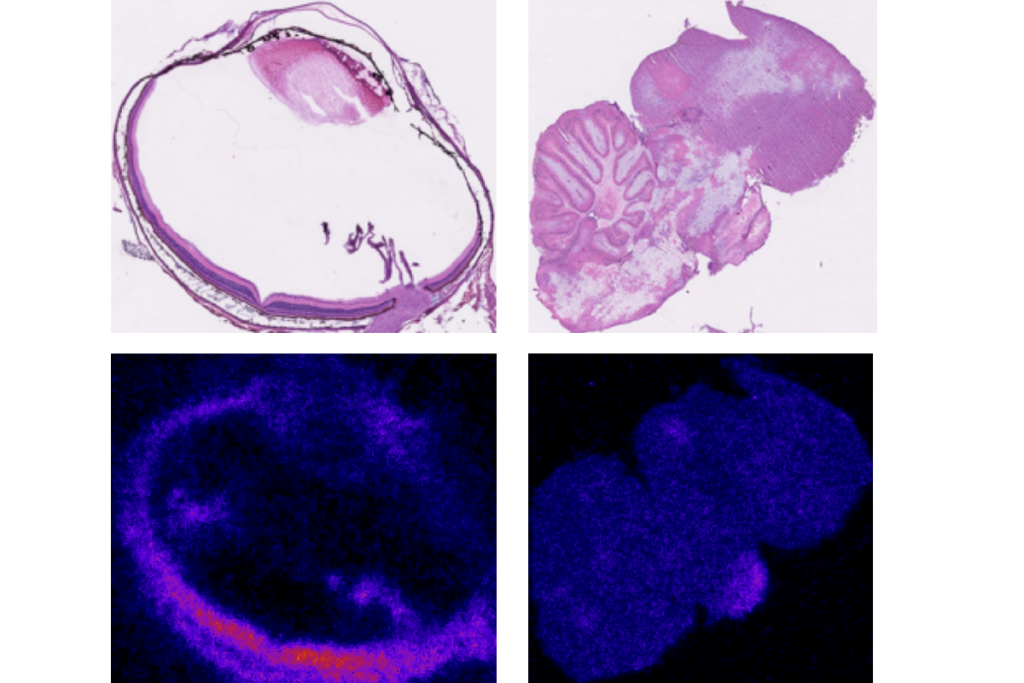

Inner retina of birds powers sight sans oxygen

The energy-intensive neural tissue relies instead on anaerobic glucose metabolism provided by the pecten oculi, a structure unique to the avian eye.

Inner retina of birds powers sight sans oxygen

The energy-intensive neural tissue relies instead on anaerobic glucose metabolism provided by the pecten oculi, a structure unique to the avian eye.

Neuroscience needs single-synapse studies

Studying individual synapses has the potential to help neuroscientists develop new theories, better understand brain disorders and reevaluate 70 years of work on synaptic transmission plasticity.

Neuroscience needs single-synapse studies

Studying individual synapses has the potential to help neuroscientists develop new theories, better understand brain disorders and reevaluate 70 years of work on synaptic transmission plasticity.

New insights on sex bias in autism, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 16 February.

New insights on sex bias in autism, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 16 February.