Mike Hawrylycz joined the Allen Institute for Brain Science in Seattle, Washington, in 2003 as director of informatics and one of the institute’s first staff. His group is responsible for developing algorithms and computational approaches in the development of multimodal brain atlases, and in data analysis and annotation. Hawrylycz has worked in a variety of applied mathematics and computer science areas, addressing challenges in consumer and investment finance, electrical engineering and image processing, and computational biology and genomics. He received his Ph.D. in applied mathematics at the Massachusetts Institute of Technology and subsequently was a postdoctoral researcher at the Center for Nonlinear Studies at the Los Alamos National Laboratory in New Mexico.

Michael Hawrylycz

Investigator

Allen Institute for Brain Science

From this contributor

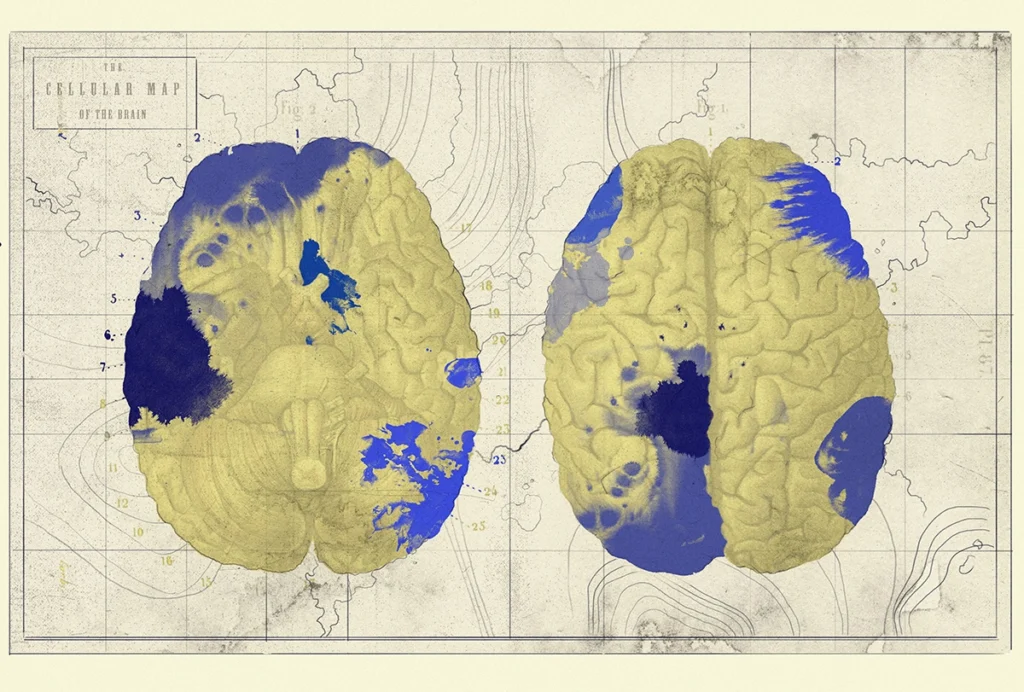

Knowledge graphs can help make sense of the flood of cell-type data

These tools, widely used in the technology industry, could provide a foundation for the study of brain circuits.

Knowledge graphs can help make sense of the flood of cell-type data

Explore more from The Transmitter

Lack of reviewers threatens robustness of neuroscience literature

Simple math suggests that small groups of scientists can significantly bias peer review.

Lack of reviewers threatens robustness of neuroscience literature

Simple math suggests that small groups of scientists can significantly bias peer review.

Dendrites help neuroscientists see the forest for the trees

Dendritic arbors provide just the right scale to study how individual neurons reciprocally interact with their broader circuitry—and are our best bet to bridge cellular and systems neuroscience.

Dendrites help neuroscientists see the forest for the trees

Dendritic arbors provide just the right scale to study how individual neurons reciprocally interact with their broader circuitry—and are our best bet to bridge cellular and systems neuroscience.

Two primate centers drop ‘primate’ from their name

The Washington and Tulane National Biomedical Research Centers—formerly called National Primate Research Centers—say they made the change to better reflect the breadth of research performed at the centers.

Two primate centers drop ‘primate’ from their name

The Washington and Tulane National Biomedical Research Centers—formerly called National Primate Research Centers—say they made the change to better reflect the breadth of research performed at the centers.