Nico Dosenbach is associate professor of neurology at Washington University School of Medicine. His research as a systems neuroscientist is focused on pushing resting-state functional connectivity MRI (RSFC), functional MRI (fMRI) and diffusion tensor imaging (DTI) to the level of individual patients. To create and annotate the connectomes of individuals he is working to improve the signal-to-noise, spatial resolution and replicability of RSFC, DTI and fMRI data.

Nico Dosenbach

Associate professor of neurology

Washington University School of Medicine

From this contributor

Breaking down the winner’s curse: Lessons from brain-wide association studies

We found an issue with a specific type of brain imaging study and tried to share it with the field. Then the backlash began.

Breaking down the winner’s curse: Lessons from brain-wide association studies

Explore more from The Transmitter

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

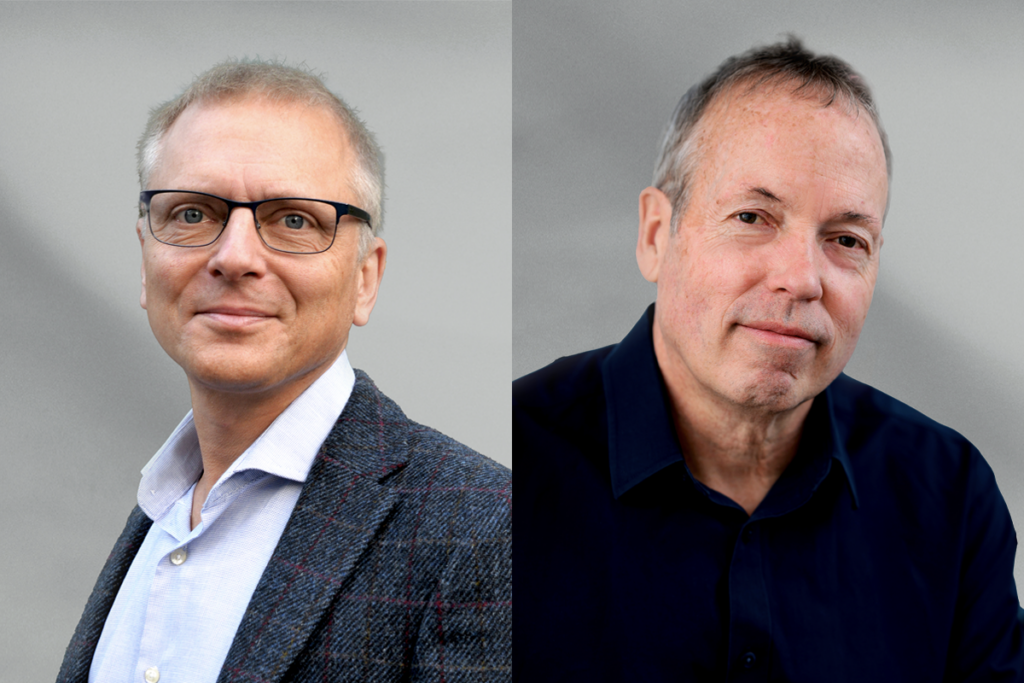

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.