Xujun Duan is a professor of Biomedical Engineering at the University of Electronic Science and Technology of China, Chengdu, China. She received her PhD in Biomedical Engineering at the University of Electronic Science and Technology of China, and conducted a Joint PhD study at Stanford University under the supervision of Dr. Vinod Menon. Her long-term research goal is to address how brain anatomy, function and connectivity are altered in autism spectrum disorder (ASD), and how they vary across the population, by using multi-modal brain imaging techniques and computational methods.

Xujun Duan

Professor of biomedical engineering

University of Electronic Science and Technology of China

From this contributor

Magnetic stimulation for autism: Q&A with Xujun Duan

A new individualized approach to transcranial magnetic stimulation may one day be an effective treatment for social and communication difficulties, if the results from Duan’s small preliminary trial pan out.

Magnetic stimulation for autism: Q&A with Xujun Duan

Explore more from The Transmitter

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

A 2013 Nature paper from David Anderson’s lab revealed a group of sensory neurons involved in pleasurable touch and led Abdus-Saboor down a new research path.

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

A 2013 Nature paper from David Anderson’s lab revealed a group of sensory neurons involved in pleasurable touch and led Abdus-Saboor down a new research path.

Sex bias in autism drops as age at diagnosis rises

The disparity begins to level out after age 10, raising questions about why so many autistic girls go undiagnosed earlier in childhood.

Sex bias in autism drops as age at diagnosis rises

The disparity begins to level out after age 10, raising questions about why so many autistic girls go undiagnosed earlier in childhood.

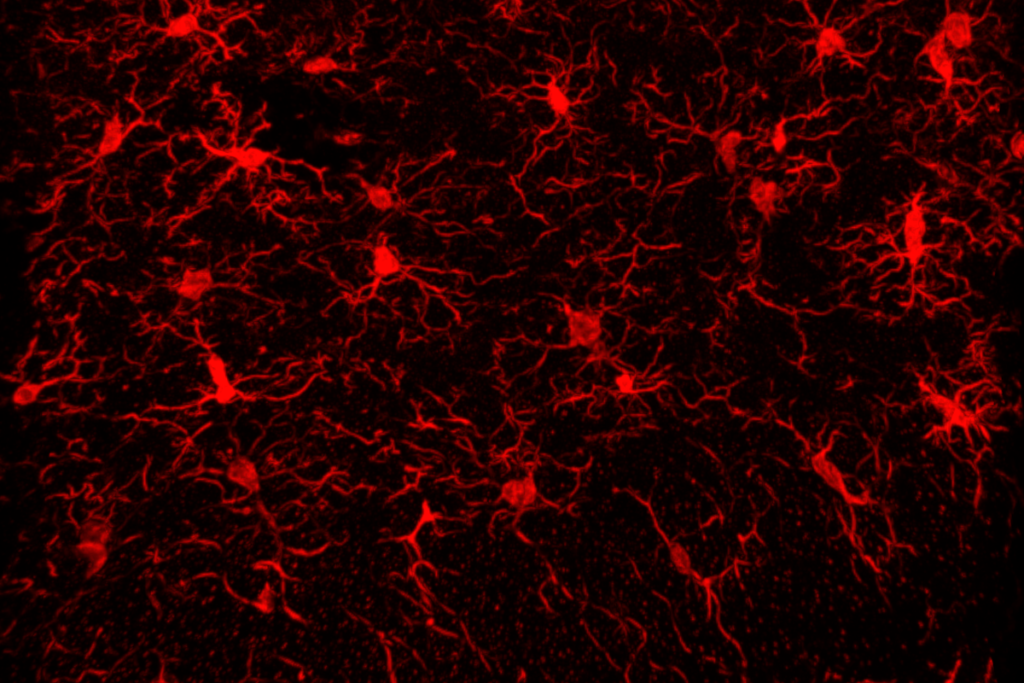

Microglia implicated in infantile amnesia

The glial cells could explain the link between maternal immune activation and autism-like behaviors in mice.

Microglia implicated in infantile amnesia

The glial cells could explain the link between maternal immune activation and autism-like behaviors in mice.