Reward prediction error

Recent articles

‘What Is Intelligence?’: An excerpt

In his new book, published today, Blaise Agüera y Arcas examines the fundamental aspects of intelligence in biological and artificial systems. In this excerpt from Chapter 4, he examines temporal difference, a reinforcement learning algorithm.

‘What Is Intelligence?’: An excerpt

In his new book, published today, Blaise Agüera y Arcas examines the fundamental aspects of intelligence in biological and artificial systems. In this excerpt from Chapter 4, he examines temporal difference, a reinforcement learning algorithm.

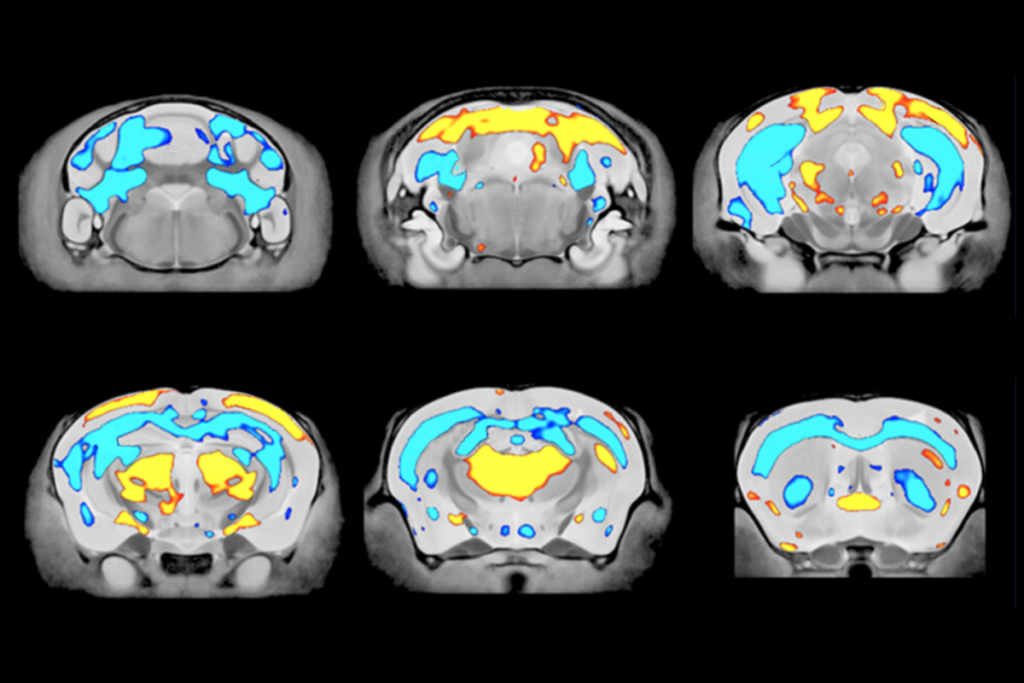

Vijay Namboodiri and Ali Mohebi on the evolving story of dopamine’s role in cognitive function

Researchers discuss the classic stories of dopamine’s role in learning, ongoing work linking it to a wide variety of cognitive functions, and recent research suggesting that dopamine may help us "look back" to discover the causes of events in the world.

Vijay Namboodiri and Ali Mohebi on the evolving story of dopamine’s role in cognitive function

Researchers discuss the classic stories of dopamine’s role in learning, ongoing work linking it to a wide variety of cognitive functions, and recent research suggesting that dopamine may help us "look back" to discover the causes of events in the world.

Explore more from The Transmitter

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.

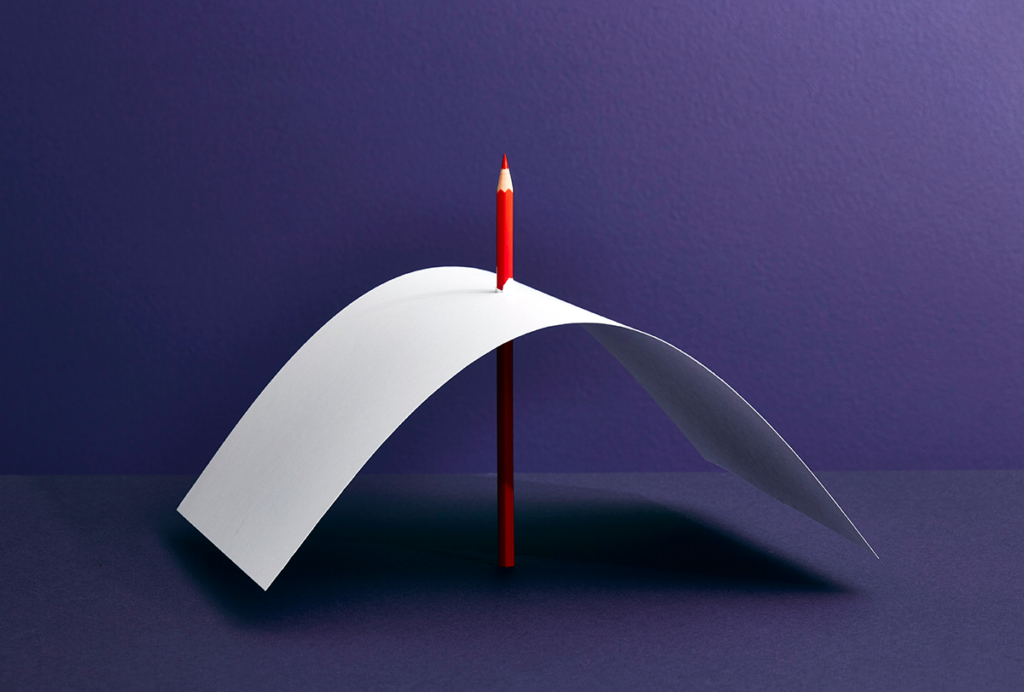

Lack of reviewers threatens robustness of neuroscience literature

Simple math suggests that small groups of scientists can significantly bias peer review.

Lack of reviewers threatens robustness of neuroscience literature

Simple math suggests that small groups of scientists can significantly bias peer review.