Neuroscientists have spent decades characterizing the types of information represented in the visual system. In some of the earliest studies, scientists recorded neural activity in anesthetized animals passively viewing stimuli—a setup that led to some of the most famous findings in visual neuroscience, including the discovery of orientation tuning by David Hubel and Torsten Wiesel.

But passive viewing, whether while awake or anesthetized, sidesteps one of the more intriguing questions for vision scientists: How does the rest of the brain use this visual information? Arguably, the main reason for painstakingly characterizing the information in the visual system is to understand how that information drives intelligent behavior. Connecting the dots between how visual neurons respond to incoming stimuli and how that information is “read out” by other brain regions has proven nontrivial. It is not clear that we have the necessary experimental and computational tools at present to fully characterize this process. To get a sense for what it might take, I asked 10 neuroscientists what experimental and conceptual methods they think we’re missing.

D

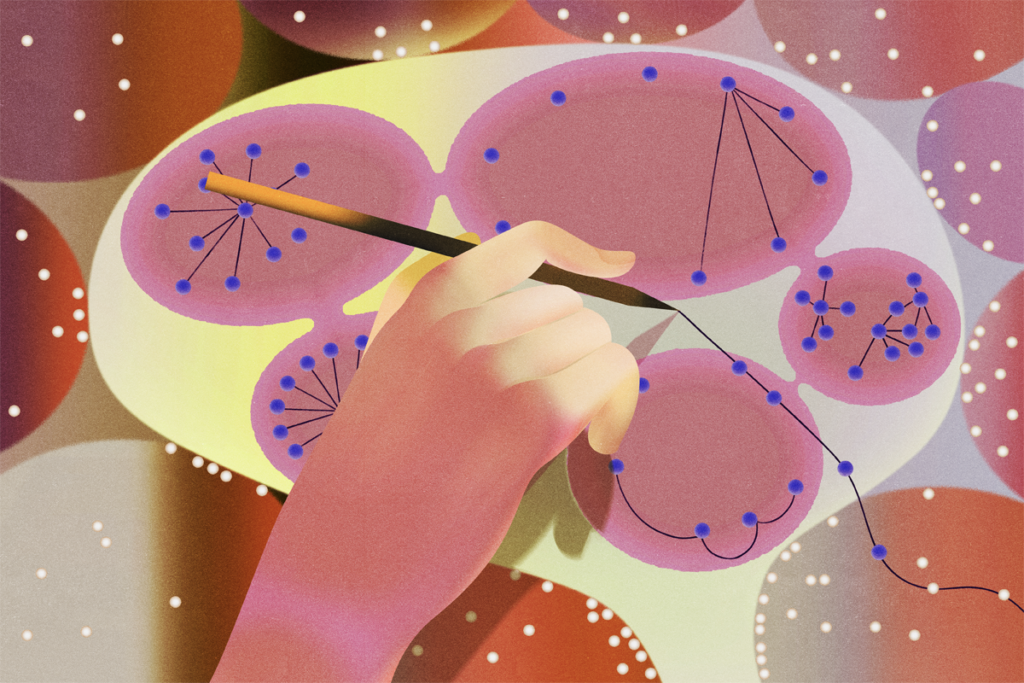

ecoding is a common approach for understanding the information present in the visual system and how it might be used. But decoding on its own—training classifiers to read out prespecified information about a visual stimulus from neural activity patterns—cannot tell us how the brain uses information to perform a task. This is because the decoders we use for data analysis do not necessarily match the downstream processes implemented by neural circuits. Indeed, there are pieces of information that can reliably be read out from the visual system but aren’t accessible to participants during tasks. Primary visual cortex contains information about the ocular origin of a stimulus, for example, but participants are not able to accurately report this information.This doesn’t mean decoding has no role to play. Attempting to identify decoders whose performance correlates with behavior broadly or on an image or trial-wise basis can sharpen our hypotheses about how visual information is read out. A recent study used such an approach to understand how mice perform an orientation detection task. This work compared a decoder that was trained to individually weight neurons to detect the grating with one that takes a simple mean of neural activity. They found that the latter was closer to matching mouse behavior; optogenetic perturbations validated this finding.

Complicating matters, evidence suggests that readout mechanisms can depend on the task or context. For example, a 2008 study showed a surprising result. When monkeys were trained to perform a coarse depth discrimination task, inactivating area MT significantly worsened performance. After training on a fine discrimination task, however, MT inactivation did not degrade coarse discrimination performance. This training did not cause observable changes in MT tuning properties, so the researchers proposed that it must have triggered a change in the readout mechanism instead.