Shafaq Zia is a science journalist and a graduate student in the Graduate Program in Science Writing at the Massachusetts Institute of Technology. Previously, she was a reporting intern at STAT, covering the COVID-19 pandemic and the latest research in health technology.

Shafaq Zia

From this contributor

Spotted around the web: COVID-19 during pregnancy, sleep problems, eugenics

Here is a roundup of news and research for the week of 6 June.

Spotted around the web: COVID-19 during pregnancy, sleep problems, eugenics

New resource tracks genetic variations in Han Chinese populations

An online database called NyuWa catalogs genetic variations among nearly 3,000 individuals and provides a comprehensive reference genome for the Han people.

New resource tracks genetic variations in Han Chinese populations

Explore more from The Transmitter

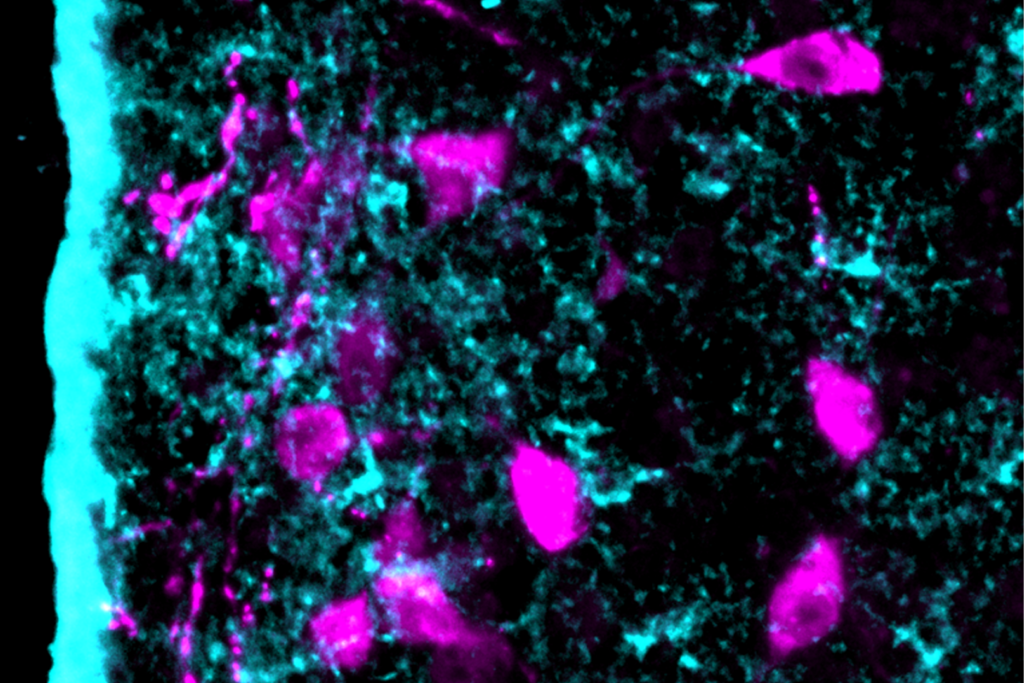

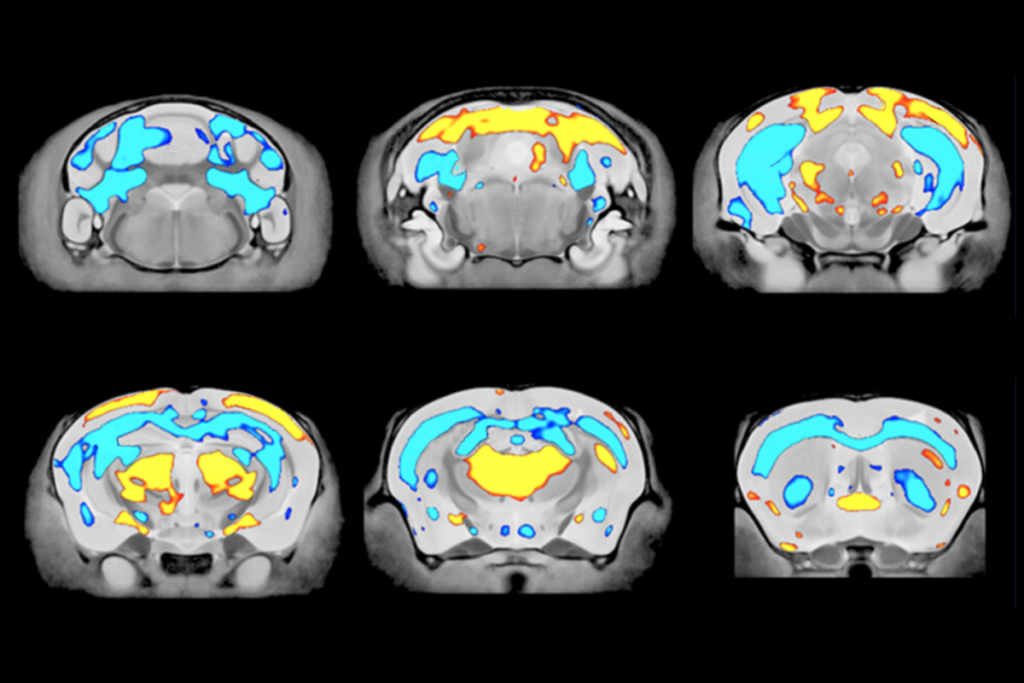

Astrocytes orchestrate oxytocin’s social effects in mice

The cells amplify oxytocin—and may be responsible for sex differences in social behavior, two preprints find.

Astrocytes orchestrate oxytocin’s social effects in mice

The cells amplify oxytocin—and may be responsible for sex differences in social behavior, two preprints find.

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Neuro’s ark: Spying on the secret sensory world of ticks

Carola Städele, a self-proclaimed “tick magnet,” studies the arachnids’ sensory neurobiology—in other words, how these tiny parasites zero in on their next meal.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.

Autism in old age, and more

Here is a roundup of autism-related news and research spotted around the web for the week of 2 March.