Ashley Juavinett is associate teaching professor of neurobiology at the University of California, San Diego, where she also co-directs STARTneuro, a program funded by the National Institutes of Health’s Blueprint Enhancing Neuroscience Diversity through Undergraduate Research Education Experiences. Through her work and writing, she seeks to understand the best ways to train the next generation of neuroscientists. A significant part of this effort is building resources to make such training accessible and more effective.

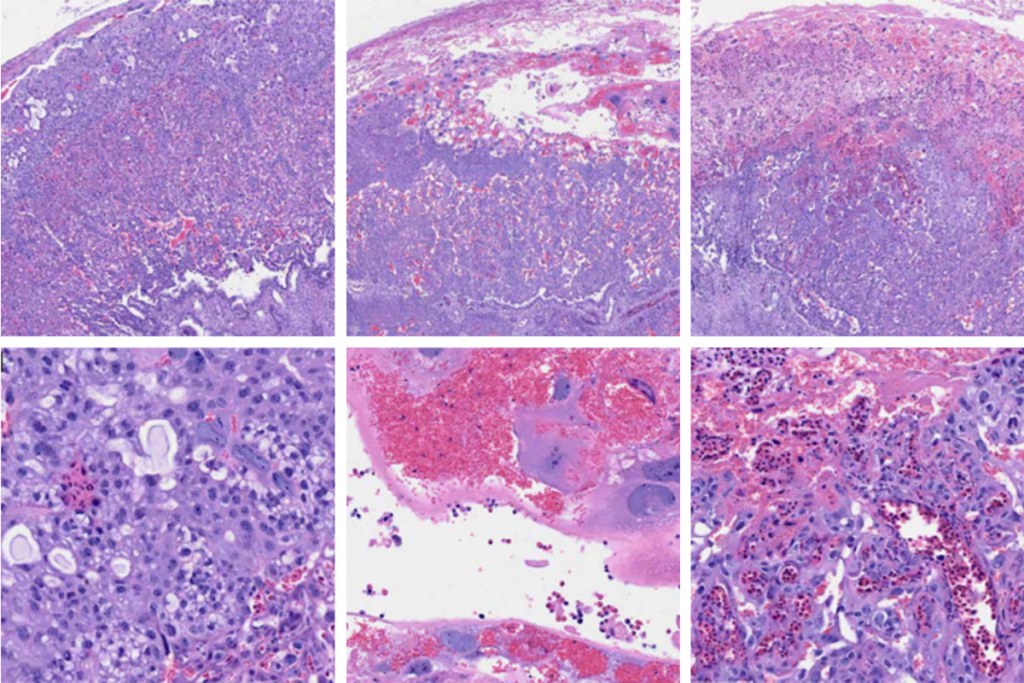

Juavinett completed her Ph.D. with Edward Callaway at the Salk Institute for Biological Studies in San Diego, California, investigating the cell types and circuits underlying visual perception in mice. She then conducted postdoctoral research with Anne Churchland at Cold Spring Harbor Laboratory in New York, advancing ethological approaches to understanding behavior as well as cutting-edge ways of recording from freely moving animals.

Juavinett is the author of “So You Want to Be a Neuroscientist?” an accessible guide to the field for aspiring researchers, and she has previously written for the Simons Collaboration on the Global Brain.