Tychele Turner is assistant professor of genetics at the Washington University School of Medicine in St. Louis, Missouri, where her lab focuses on the study of noncoding variation in autism, precision genomics in 9p deletion syndrome, optimization of genomic workflows and the application of long-read sequencing to human genetics.

Tychele Turner

Assistant professor of genetics

Washington University School of Medicine

From this contributor

How long-read sequencing will transform neuroscience

New technology that delivers much more than a simple DNA sequence could have a major impact on brain research, enabling researchers to study transcript diversity, imprinting and more.

How long-read sequencing will transform neuroscience

Focus on function may help unravel autism’s complex genetics

To find the pathogenic mutations in complex disorders such as autism, researchers may need to conduct sophisticated analyses of the genetic functions that are disrupted, says geneticist Aravinda Chakravarti.

Focus on function may help unravel autism’s complex genetics

Explore more from The Transmitter

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

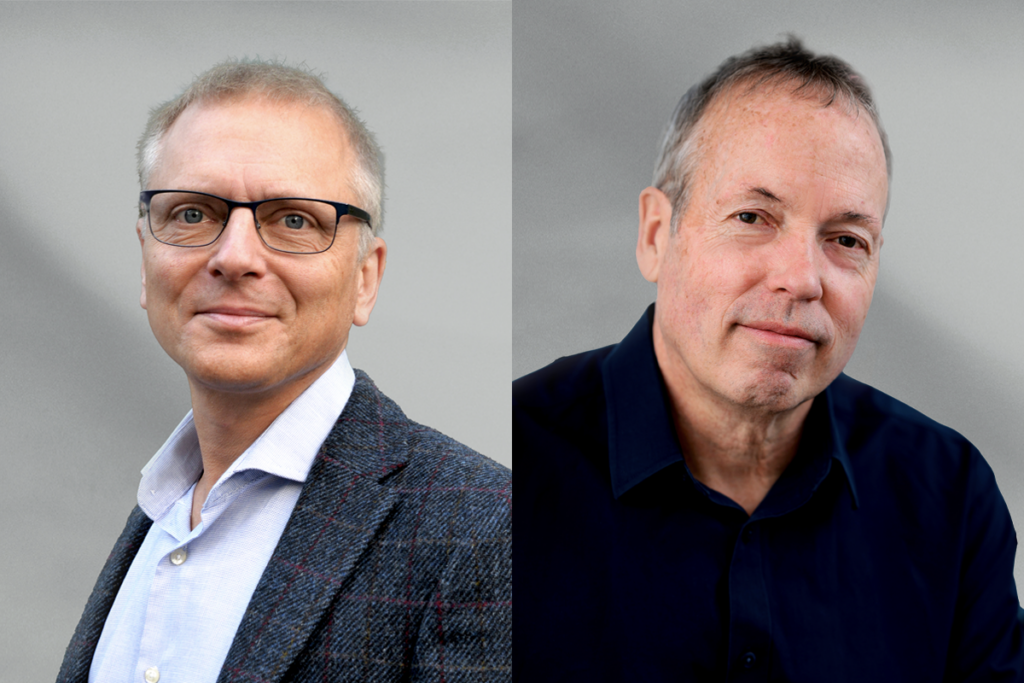

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.