Like many fields in neuroscience, the human functional neuroimaging community has turned a critical eye on its own methods and findings in the past few years. That introspection has led to calls for larger sample sizes to establish reproducible and generalizable associations between brain measures and individual phenotypes, a key step for functional MRI (fMRI) to expand its utility in clinical or other real-world settings.

One particularly influential paper, from Scott Marek and his colleagues, made the case that sample sizes in most published brain-wide association studies—that is, studies that aim to link structural or functional brain measures to individual phenotypes, which represent only a subset of all fMRI work—are far too small to yield meaningful results. If we see a statistically significant relationship between a single brain variable (such as the strength of a particular functional connection) and a single phenotypic variable (such as intelligence quotient) in 25 participants, the chances that the relationship will replicate in another 25 participants are only about 5 percent, or whatever was used as the original threshold for significance. For 1,000 participants, the chances are about 20 percent. In many cases, to detect associations robust enough to replicate requires thousands or even tens of thousands of participants.

Those results were sobering to those of us who do this kind of work with either basic-science or translational goals in mind. But such meta-scientific scrutiny is needed and welcome. Marek and his colleagues’ findings are true, and also perhaps unsurprising: When studying nuanced individual variability in a generally healthy adult human population, the combination of small effects and noisy data makes it difficult to conclusively establish relationships between brain measures and phenotypes (but see my recent editorial with Monica Rosenberg for steps we can take to build better models). But calls for a wholesale move toward gigantic numbers for any such study carry a real risk: We will stifle innovation on a key feature—namely, in-scanner paradigms—that could boost sensitivity to brain-phenotype relationships and thereby decrease sample sizes necessary for robust, reproducible results in this arena.

In-scanner paradigms, or what we have participants do during those valuable minutes they spend in the MRI machine, have received comparatively less attention than sample size and statistical practices, yet they are a powerful knob we can tweak to get the biggest bang for our buck out of our data. Although we may think we need huge numbers to discover stable and meaningful within- and across-person differences, that may be only because we’re using suboptimal paradigms.

B

ecause it is not practical for individual labs to scan thousands of participants, many of the best-powered brain-phenotype relationship studies have used data from modern consortia, which have been going to Herculean efforts to amass sample sizes large enough to get statistical traction. These studies include the Human Connectome Project, which scanned 1,200 healthy young adults; the Adolescent Brain and Cognitive Development study, which aims to scan nearly 12,000 adolescents five times each over a 10-year period; and the UK Biobank, the largest of all, which has targeted 100,000 participants, with longitudinal follow-ups. Many different research groups will mine the data these consortia produce to address many different questions for years to come, so the scan paradigms they choose are especially consequential.Unfortunately, these consortia studies often use paradigms that aren’t very sensitive to brain-behavior relationships. All three listed above devote the majority, or at least plurality, of their functional imaging time to the so-called “resting state,” in which people lie quietly in a scanner with no explicit stimulation or task and simply let their minds wander; the rest of the time is divided among a mix of traditional cognitive tasks (for example, n-back tasks that tap into working memory, or emotional faces tasks that tap into affective processing). Rest has its perks: It is doable even for hard-to-scan populations, including children and certain patient groups; easy to standardize across sites; and straightforward to use in longitudinal designs. For these reasons, it has become the de facto choice for brain-phenotype association studies. Indeed, Marek and his colleagues used resting-state data in their main analyses, and for good reason: These were the data that were available in large enough numbers to make their point.

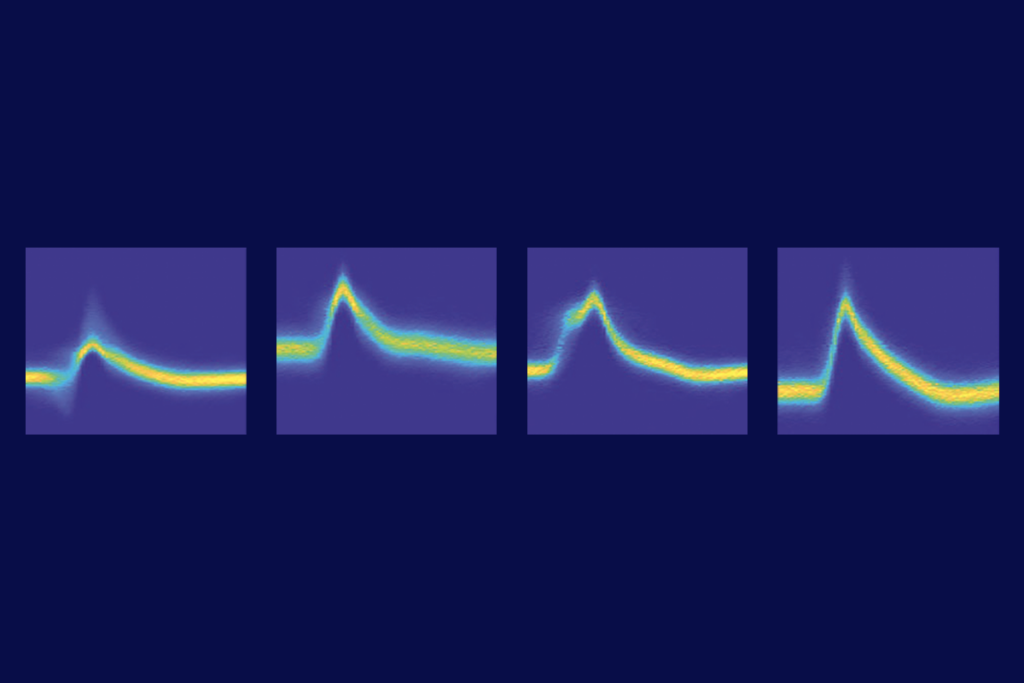

But in the decade or so since the start of the Human Connectome Project, a parallel literature has emerged showing that it’s not just how much data we acquire that matters, it’s what kind of data—and unfortunately, resting state is often the least sensitive to individual differences. Although some of this work is admittedly smaller scale, it is nonetheless convincing: Models built to predict the same phenotypes in the same participants work remarkably better when the functional imaging data are acquired with a task paradigm on board. This is true even when the paradigm is seemingly unrelated to the target behavior, such as using a simple motor (finger-tapping) task to predict fluid intelligence. In some cases, we can explain up to five times the variance in out-of-scanner phenotypes using task-based compared with resting-state data.

To understand why this might be the case, consider the analogy of a stress test: Rather than observe the brain in a completely unconstrained state, we deliberately place it under the conditions in which functional patterns relevant to phenotypes of interest—such as markers of present or future health status—are most likely to emerge. Although traditional tasks can already serve as more effective stress tests than rest, there is mounting evidence that non-traditional tasks, including so-called “naturalistic” tasks, such as having participants watch a movie or listen to a story in the scanner, might do even better.

So why are the current large-scale datasets so biased toward resting-state and a handful of well-worn cognitive tasks? By their nature, these projects require large teams with multiple principal investigators. The need to achieve consensus, or science-by-committee, often means a default to tried and true practices. This is understandable: It’s risky to include a newer paradigm in a protocol destined to be run on hundreds or thousands of people. On the other hand, by overemphasizing canon or the desire for harmonization across datasets, we risk getting stuck in a rut.

If not via tradition, how might we go about choosing in-scanner paradigms to use in consortium studies, and what are some good candidates? To be clear, I am not advocating that we throw entirely untested paradigms into multi-million-dollar scientific efforts. But we need to leave room to innovate on paradigms without requiring thousands of participants per study. By enabling evidence to build up from smaller-scale studies, future large-scale consortia can take “safe bets” on paradigms that have proven both reliable and sensitive to relevant variability within and among individuals across many smaller samples. In fact, evidence is stronger if it comes from multiple labs, because it reassures us that the results are robust to small variations in how distinct labs collect, preprocess and analyze data.

As mentioned above, some evidence shows that audiovisual movies and spoken-word stories help tease signal from noise while preserving or even enhancing meaningful differences. Another promising paradigm is annotated rest, in which participants verbally report the contents of their thoughts when probed at certain intervals during or after the scan, to prompt introspection and enhance links between measured brain signals and ongoing thought patterns. Finally, interactive games and other assays from the burgeoning field of computational psychiatry use behavior on carefully designed tasks as a readout of cognitive and affective styles, which can help surface relationships between brain activity and phenotypes, especially those relevant for mental health.

Frankly, the “best” paradigm, or suite of paradigms, is still largely an open question—but this is exactly the point: If we dismiss findings using new paradigms based on sample size alone, we miss the opportunity to develop new approaches to data collection that could be game-changing for how we run future large-scale studies, and therefore how we understand the human brain.