An unusual sort of gang violence gripped the city of Lopburi, Thailand, during the peak of the COVID-19 pandemic: Rival groups of macaque monkeys, whose appetites were typically satisfied by the generosity of now-absent tourists, began to fight over the scarce food available.

During the melees, the monkeys seemed to know which side they were fighting for, but to someone watching videos of the events—which Mark Laubach, professor of neuroscience at American University, did online—it was difficult to tell. “It would take 10 years of primatology and improved cameras” to figure it out, he says one of his colleagues who studies non-human primates told him. To Laubach, that assessment highlighted not only the complexity of monkey social dynamics, but also the limitations of the tools currently available to classify that behavior. “Those methods work for a single animal,” he says. “But when you get into multi-animal stuff, it becomes very challenging.”

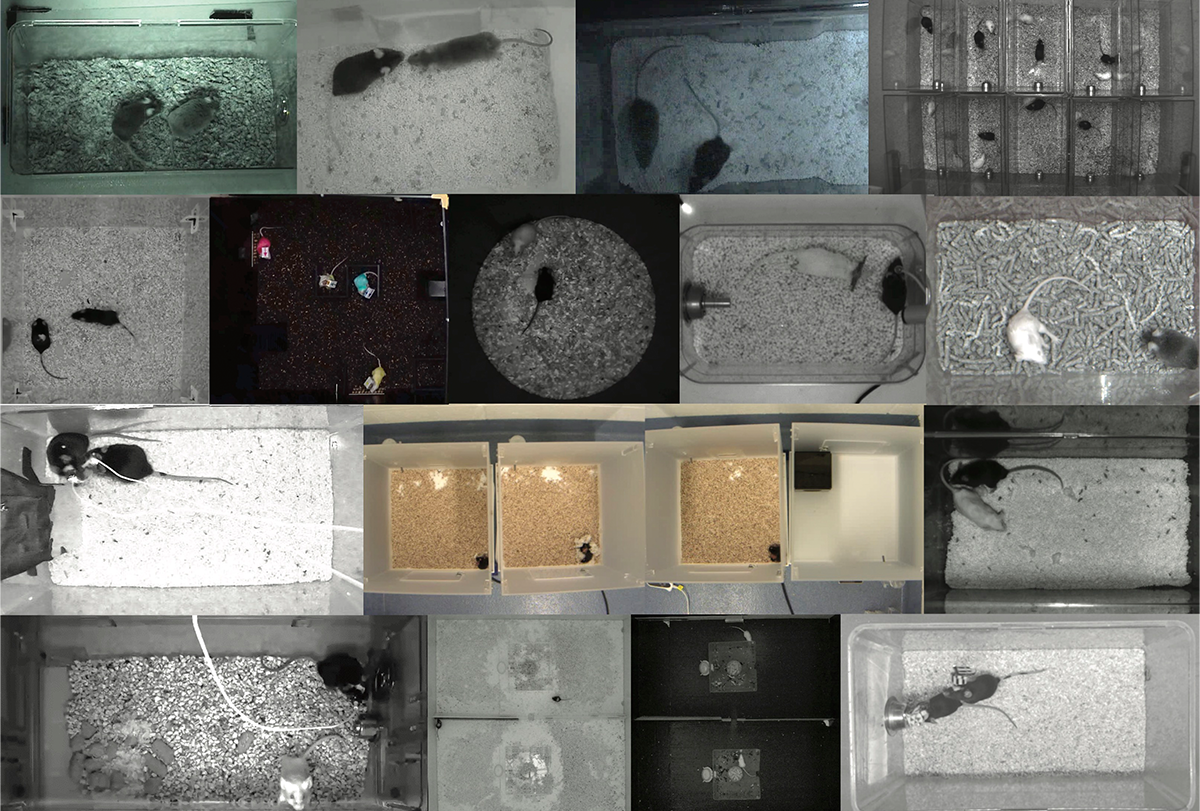

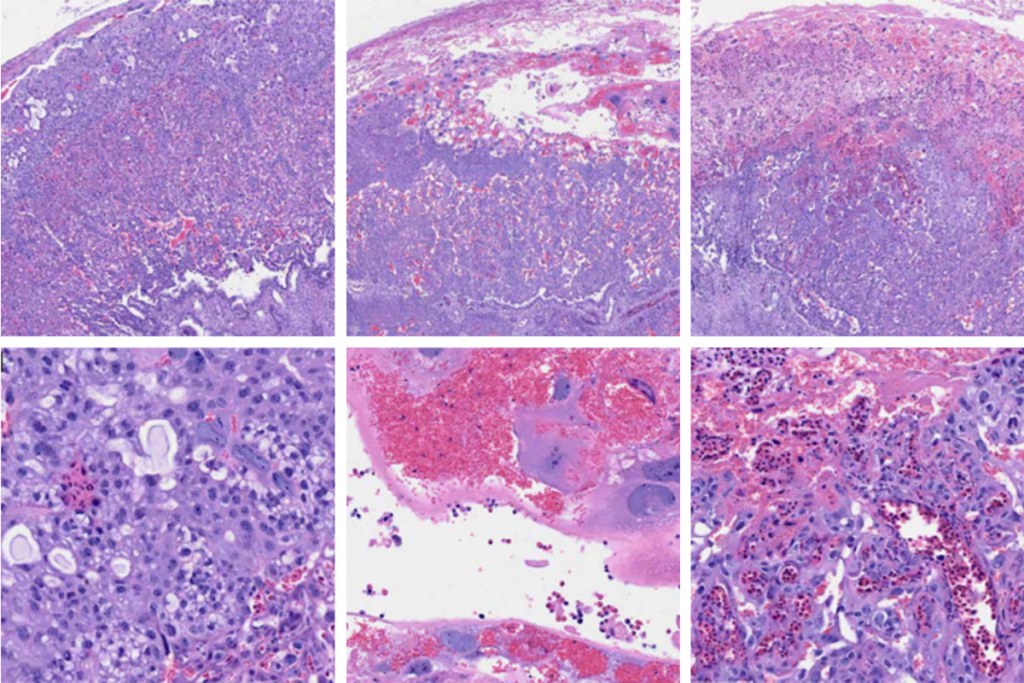

Social neuroscientists, who frequently video record interacting animals for hours upon hours, are particularly interested in this sort of question. And although a proliferation of new tools—such as improved tracking and pose estimators—has helped researchers streamline their work, limitations remain, says Ann Kennedy, associate professor of neuroscience at the Scripps Research Institute.

One flaw in particular is that classifiers trained on data from one lab do not typically work for others, Kennedy says. In an attempt to solve that problem, she and her colleagues have organized a “Multi-Agent Behavior Challenge,” which launched today. The challenge is the third competition to come from Kennedy and her co-organizers.

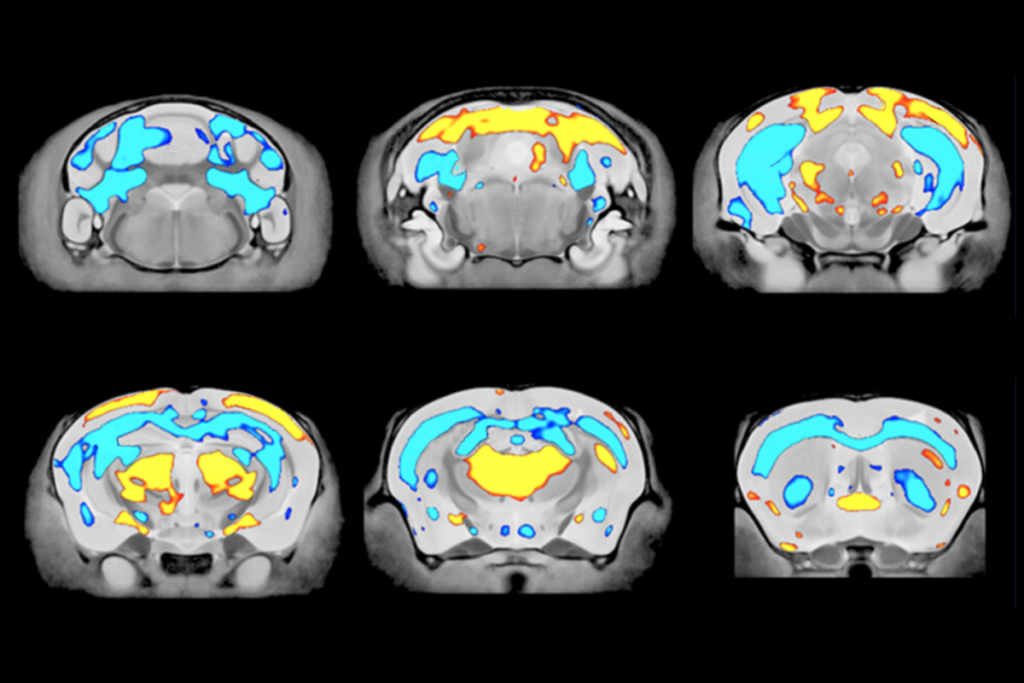

The plan is for competitors to receive pose-tracking estimates from videos of interacting mice, as well as handmade annotations of the recorded behaviors, from 15 different labs, Kennedy says. Competitors then train bespoke algorithms on those pose estimates and test how well they can predict 36 behaviors—which include sniffing, attacking, mounting, chasing and freezing—in a new set of estimates from which the annotations have been withheld.

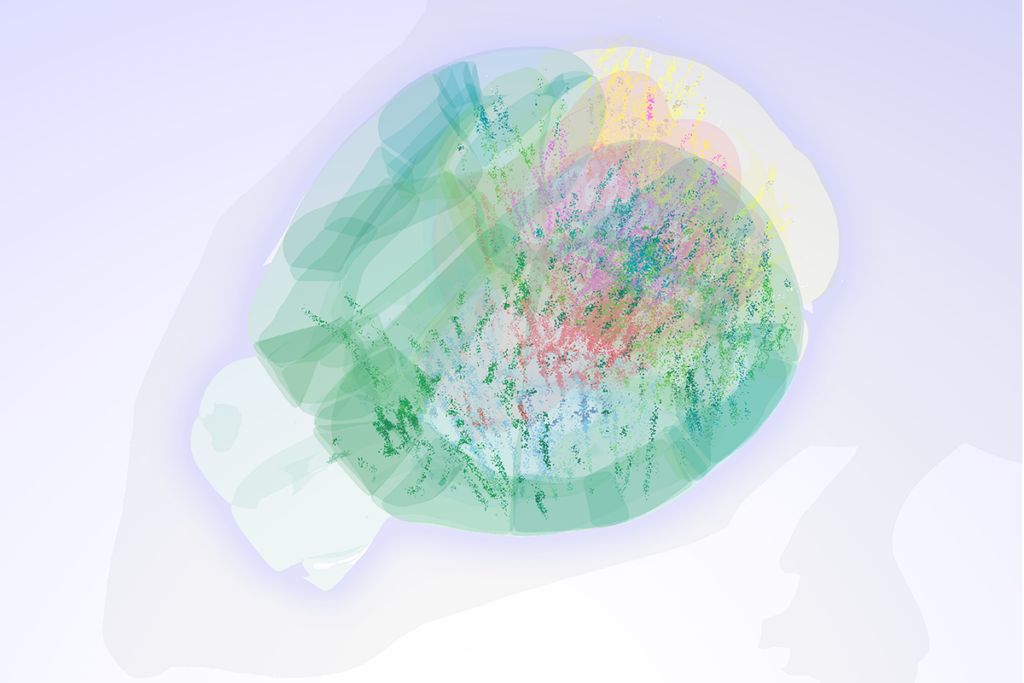

“We’re saying, in addition to learning representations that are good for arbitrary tasks, can you learn a common representation of behavior that’s invariant to all of these different lab-specific sources of noise?” Kennedy says.

The people who develop the model that can most accurately classify behaviors in the test dataset are slated to receive a cash prize of $20,000, with additional prizes for second through fifth place.

In creating the competition, the organizers can draw on the expertise of people outside of the social neuroscience field, says Laubach, who is not involved in the competition. “I don’t know how you would advance the field without this approach,” he says. “It is necessary, because the whole thing is collaborative. No [single] person knows how to do this.”

C

hallenges like this one are common in the field of computer science, says competition co-organizer Jennifer Sun, assistant professor of computer science at Cornell University. “There are so many new models being developed,” she says. “If they’re all evaluated on different datasets, it becomes really, really hard to compare the models and understand, does this model work better because it was trained on a different dataset or because the architecture was better or [because] it was trained longer?”For Sun and Kennedy’s first competition, which they launched in 2021, they drew inspiration from vision-science challenges, such as those that sought to improve algorithms for object recognition. The team asked participants to classify mouse behavior based on video tracking and pose estimation collected from a single lab. In 2022, their challenge involved creating representations of the behavior of multiple species, including mice, flies and ant beetles.

The results may help researchers decide which model to use for an experiment, Sun says. They can also establish benchmarks for where the field is and which issues still need to be addressed, she adds. “If a model works well, it lets us know what’s the best state-of-the-art method right now for tackling that task. And if things don’t work, then we know to invest more research resources from the computer science side into solving these problems.”

The challenges also help to reveal trends in the field, she says. For example, all of the top-performing models in the 2022 competition used transformers, a machine-learning tool also used in large language models. “There’s definitely this effect that we should disentangle,” Sun says. “Does that mean transformers are the best, or does it mean that more people try transformers?”

This year, the team framed the competition around the development of classifiers that work for multiple labs’ data, with certain constraints. “All of the datasets are top-down videos of interacting mice. And we’re giving people key points,” Kennedy says. But the winning approaches will still need to develop models that work for videos collected from different experiments and with different video frame rates, tracked body parts and mouse strains. “It’s representative of the variety of settings you see in social neuroscience labs studying mouse behavior. And so the hope is that people can learn representations not just within a lab, but across labs.”

Identifying a classifier that works across labs would be an improvement upon past competitions, says Nancy Padilla-Coreano, assistant professor of neuroscience at the University of Florida, who is not involved in the competition. “It’s a step towards it being useful for the field.”

A

nother benefit of this and past challenges is that they bring together researchers from different fields, says Shreya Saxena, assistant professor of biomedical engineering at Yale University, who was not involved in the competitions. Computer scientists may not have the neuroscience background to know how to identify problems in the field. “They may want to actually solve or help address some of these issues, but they may not know where to start,” she says.But neuroscientists should keep in mind that just because a model beats out the others does not necessarily mean that it advances the field, Saxena adds.

People who want to participate can make an account on Kaggle, the site that is hosting the competition. They can compete as individuals or as part of a team, using either a new or existing model. Participants will be asked to input their predictions based on their own model; but data, import code and submission code will be provided, Kennedy says.

The organizers plan to make the data and winning models freely available for researchers to use after the competition. For example, the dataset might help researchers better predict a mouse’s next behavior based on their past behavior, Kennedy says. And in the future, these improvements may help advance the field’s understanding of social behavior in other species, as well, she adds. “Once the dataset is curated and out there, the hope is that this can be used for all sorts of follow-up projects.”