Breaking the jar: Why NeuroAI needs embodiment

Brain function is inexorably shaped by the body. Embracing this fact will benefit computational models of real brain function, as well as the design of artificial neural networks.

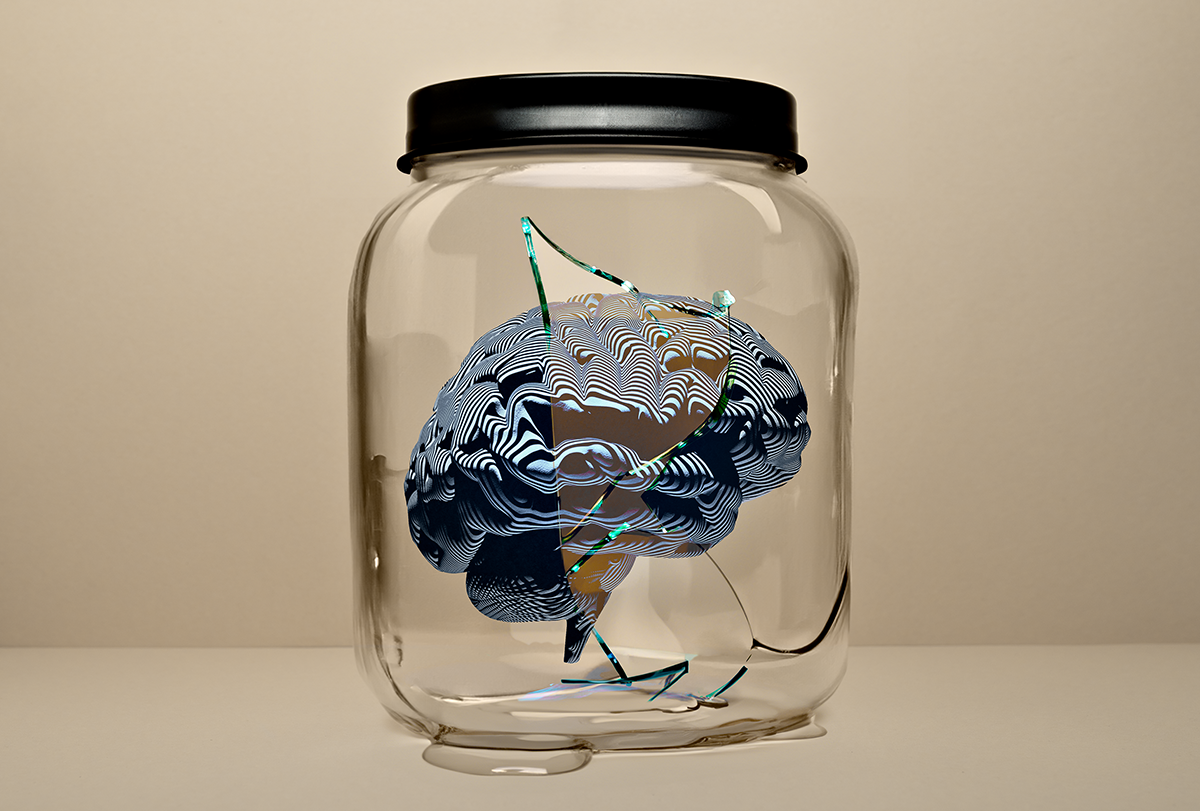

Among cartoon supervillains, Krang, the slimy nemesis of the Teenage Mutant Ninja Turtles, is a favorite of neuroscientists. After a vague accident, Krang is stripped of his body and reduced to a brain in a vat of cerebrospinal fluid. Lacking physical agency, Krang appeals to his fellow supervillain, the Shredder, to construct a new brain-machine interface and bipedal robotic skeleton. This freshly re-embodied android Krang teams up with the Shredder to take on the Ninja Turtles and conquer the Earth.

Like the visionary writers of the Ninja Turtles, neuroscientists often make the abstraction that a brain can exist in isolation from the body. They model the brain as a computational machine that exists in a realm of pure thought, receiving inputs and sending outputs as abstract information streams. Yet this “brain in a jar” worldview neglects a simple fact: Brains and nervous systems evolved jointly with the bodies they inhabit.

Indeed, coordinating body movement is the ultimate point of brain function. The brain is not connected to the external world, except through the body. It receives no sensory inputs except those transduced through the sensory organs, and it can enact no consequences except through muscles and other actuators. Thus, the biomechanical features and constraints of the body are built into the function of the brain. How animals move also determines how they gather new sensory information. Ultimately, Krang needed a robotic body to manifest his evil deeds. The cartoon spent little time elaborating on how a bipedal artificial body was tuned to be controlled by Krang’s reptilian brain, but some intense engineering (or millions of years of evolution) must have happened behind the scenes.

Embodiment, then, is the concept that the function of the brain is inexorably shaped by the body. Although it is not a new idea in neuroscience, the embodiment lens is often neglected when neuroscientists study specific brain subsystems, particularly higher-order “cognitive” functions. Fortunately, in the growing field of NeuroAI, embodiment is now garnering greater attention. In 2023, for example, Anthony Zador and his collaborators proposed an embodied Turing test, and the 2024 National Institutes of Health BRAIN NeuroAI Workshop featured embodiment as a central theme.

Here, we discuss three key features of embodied intelligence that are characteristic of animal brains: feedback, biomechanics and modularity. We argue that embracing these three features will benefit computational models of real brain function, as well as the design of artificial neural networks that move beyond large-scale word salad generation to efficiently accomplish real-world tasks.

F

irst, feedback is a ubiquitous characteristic of biological neural networks. Feedforward models in which information flows in only one direction, such as from the retina to the lateral geniculate nucleus to the primary visual cortex, are popular because they are easy to interpret. But they are valid only in very narrow contexts and more typically are mere fantasies or wishful thinking; for example, a vast majority of lateral geniculate nucleus inputs are not from the retina, and these inputs include significant feedback from the primary visual cortex. Biological systems almost always rely on constant and multiscale feedback: Animals interact with fluctuating physical environments, neural circuits rely heavily on recurrent connections, interlinked organs adjust their function based on feedback from other organs, and cells modify themselves through gene regulatory feedback mechanisms. The view of the brain as a passive computational engine that tries to understand (i.e., to form “representations” of) the world denies the essential agency of animals to act and to have an impact on the external world.Second, biomechanical features of each specific body are essential to understand the function of the neural system within it. Consider, for instance, this video of a trout; its body undulates in the current and then surges upstream toward a small rock. What appears to be a dexterous natural behavior is, in fact, executed by a recently dead fish. So this “behavior” requires no neural activity and arises entirely from the interaction between the vortices shedding from the rock and the biomechanics of the fish body. Some neuroscientists might find this distressing. But our more optimistic view is that harnessing the mechanical intelligence of the body can simplify the demands of nonlinear neural control.

Third, brains are highly modular. Even as we advocate for an integrative approach, there is undeniable value in deeply characterizing specific parts of the brain and musculoskeletal systems in isolation. But these modules eventually need to be stitched together. Another way of thinking about modularity is in terms of bottlenecks. In the retina, for instance, retinal ganglion cells are the only cells that send outputs to the rest of the image-forming visual system, and motor-control circuits all converge onto the final common output of motor neurons that synapse onto muscles. Such bottlenecks define modules, making it possible to develop computational models of each module that nevertheless interface with one another and from which emerges the holistic behavior of the virtual animal. Importantly, models of different subsystems can be made at different resolutions and learned from different data.

Embracing these three features can help neuroscientists frame research from an embodied perspective, but there is still a chasm between the aspiration to study an embodied brain and how to implement it in practice. After all, neural dynamics are complicated enough; do we now have to model nonlinear musculoskeletal dynamics as well? And how urgent is it to integrate a model of the bladder with a model of the striatum? Choices must be made. Fortunately, a convergence of comprehensive biological datasets and artificial-intelligence approaches makes these choices slightly easier. Scientists are developing (and sharing) biomechanically realistic full-body models of animals, including rats, mice and flies. Currently, most of the published models are still quite basic—essentially, skeletons of the body—but many collaborative groups, ourselves included, are working to add biologically realistic muscles and sensors.

W

hat makes this emerging zoo of virtual animals distinct from previous generations of biomechanical models is their compatibility with models of the brain. We can think of the brain models as “controllers” that plan and execute behavior while responding to sensory stimuli from the environment. Currently, a prevailing software platform for simulating virtual animals is MuJoCo, a physics engine that simulates the biomechanics of skeletons, muscles and tendons. Because MuJoCo was developed in part to facilitate research in robotics, its core capabilities include handling advanced contact forces of dynamic body parts with an environment and learning controllers to coordinate desired body movements. A JAX-accelerated implementation called MuJoCo-MJX now makes training whole-body virtual animals tractable for researchers with access to GPU computing.Even so, some future features would be welcome additions to this or another neuro-physical simulation platform. A flexible interface to model and fine-tune networks of neurons with recurrent architecture in a closed loop with the biomechanics would accelerate the development of fully integrated neuromechanical models. The ability to rapidly simulate non-rigid biomechanics, such as fluid-structure interactions, deformable skeletal elements and muscle actuators that slide past each other, would further expand the ability to ask questions at the interface of neural function, musculoskeletal dynamics and natural behavior.

The prospect of developing a fully integrated neuromechanical model is perhaps closest to fruition for the adult fruit fly, Drosophila. The recent completion of comprehensive synaptic wiring diagrams, known as connectomes, of the fly brain and ventral nerve cord means it is now tractable to hook up computational models of neural dynamics at the resolution of cells and synapses to whole-animal biomechanics. Such embodied models will open the door to in silico experiments that are currently not feasible in real animals, complementing existing genetics and behavioral tools. As an example, consider the sensorimotor control of robust walking. From more than a century of intense study, we know that nerve-cord circuits can generate rhythmic motor patterns autonomously, yet when the walking animal encounters a perturbation, such as uneven terrain, it must integrate feedback from sensory neurons with feedforward commands to adjust, recover and maintain walking. In silico experiments that ask how neural dynamics of recurrent circuits couple with biomechanical consequences in a virtual walking fly, complete with interactions with an unpredictable physical environment, will help untangle these dual functions.

An embodiment lens could also help shape a rapidly emerging area of NeuroAI to develop foundation models of animal brains and behaviors. The idea behind foundation models is to gather a large corpus of neural and video recordings of animals in a variety of contexts and then train neural-network models to predict neural activity and behavior in response to any arbitrary input. If such efforts are to provide any insight into biology, we argue that they must carefully consider each animal’s body, not just tracked key points on the body and other aggregate measures of behavior. An artificial neural network might learn to control swimming, but how it accomplishes this could be completely different from how the fish’s brain does it, if even a dead fish can surge upstream in a current. After all, the brain does not directly control positions of knees, elbows and shoulders; it controls muscles, which generate forces to move bodies and manifest behavior.

The convergence of software tools, open datasets and a culture of collaborative science makes it an auspicious time to pursue embodiment in NeuroAI. We stand to gain a deeper understanding of how the nervous system controls behavior and a more ethological perspective on AI—one in which the brain sits not in a jar but inside the body that it evolved to sense and control. As Master Splinter, the martial arts instructor and adoptive father of the Ninja Turtles, once wisely stated, “A creative mind must be balanced by a disciplined body.”

Recommended reading

The BabyLM Challenge: In search of more efficient learning algorithms, researchers look to infants

Accepting “the bitter lesson” and embracing the brain’s complexity

Does the solution to building safe artificial intelligence lie in the brain?

Explore more from The Transmitter

Imagining the ultimate systems neuroscience paper