People—including researchers—make mistakes. Some errors are simple: forgetting a colon in a computer program, or copy-and-pasting the wrong value from a paper during a literature review.

But when missteps evade detection and make it into a published paper, there can be “staggering” consequences, such as spurring a subfield into dead-end research questions, writes Malte Elson, professor of the psychology of digitalization at the University of Bern, in an editorial published last month in Nature.

To rectify this situation, Elson co-founded an error-hunt project, appropriately called Estimating the Reliability & Robustness of Research, or ERROR. Modeled after the “bug bounty” crusades in the tech industry that search for software glitches, ERROR pays reviewers to comb through highly cited published papers for mistakes. The paper authors must agree to participate, and they also receive a small fee.

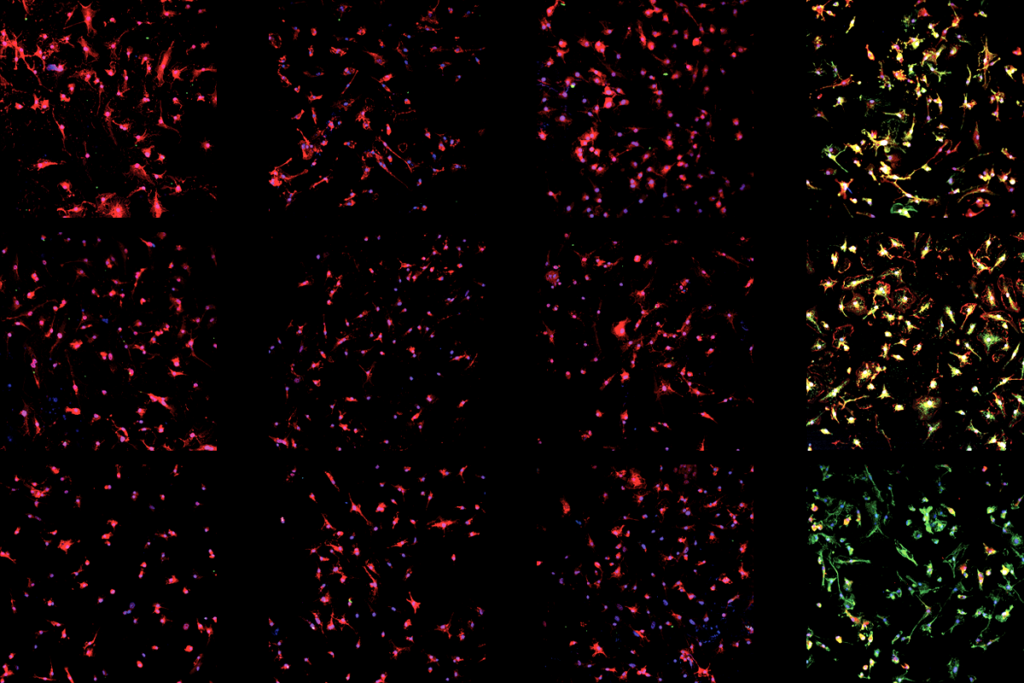

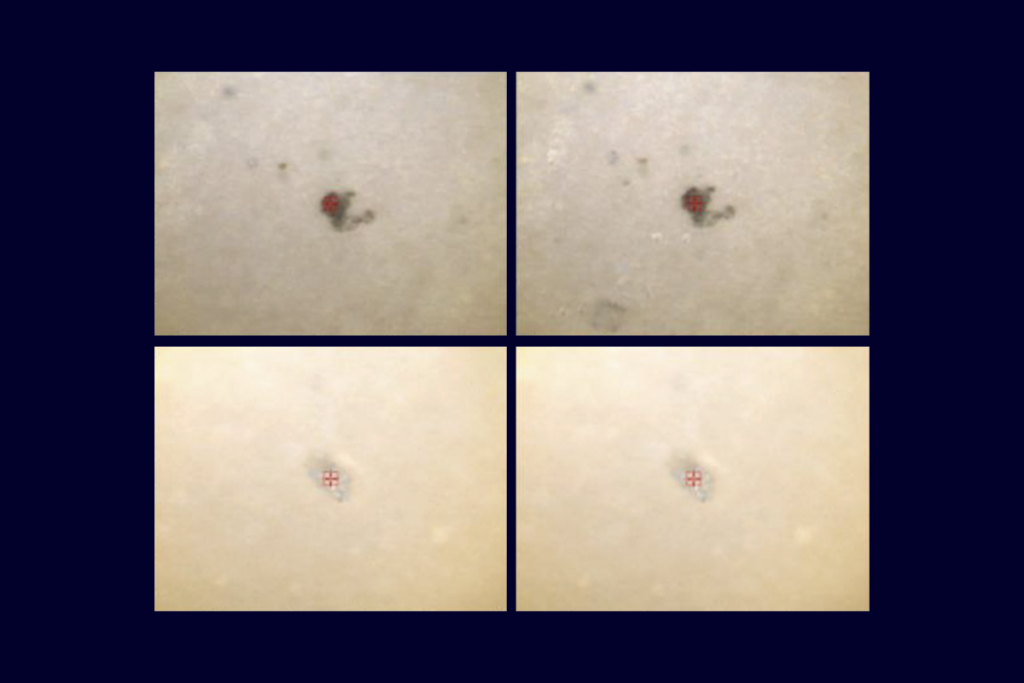

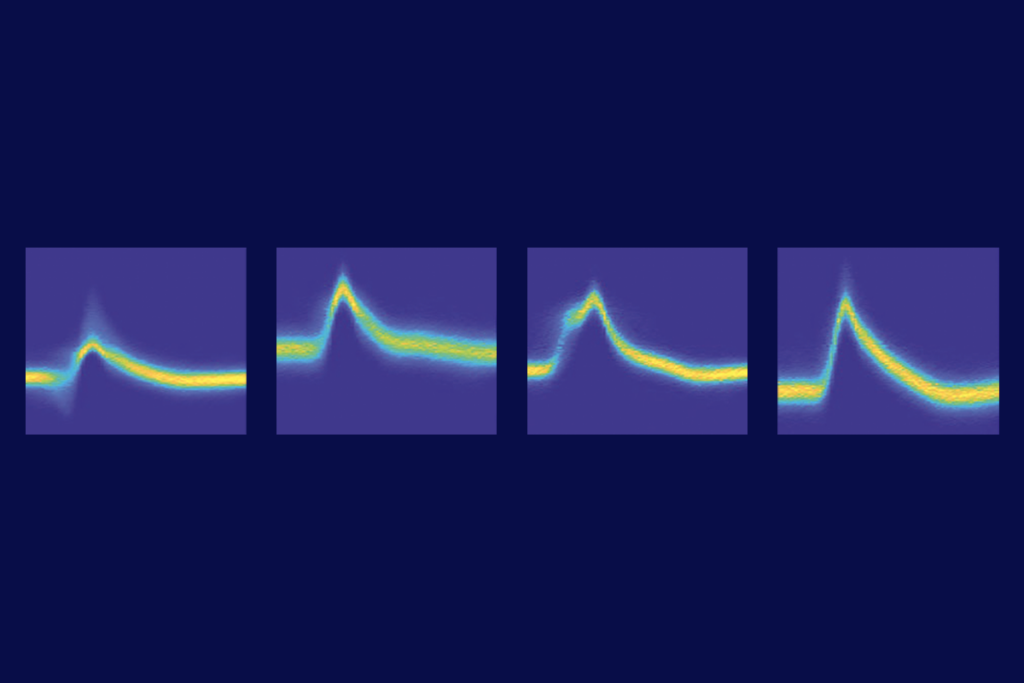

So far, 1 review is complete, and 12 others are underway. The completed review, posted 17 May on the ERROR project website, examined a 2018 paper that compared how different setups of an inhibitory control task evoke varying levels of electrical activity in the motor cortex.

In this “go/no-go” task, participants must respond to one stimulus (go trials) but not to another (no-go). For the no-go trials to actually measure inhibition, though, they should be less frequent than go trials and spaced closely together, the study shows. Yet about 40 percent of inhibitory control studies use inappropriate measurement parameters, according to a literature review included in the study.

Russell Poldrack, professor of psychology at Stanford University and a contributing editor for The Transmitter, conducted the error hunt of this work, focusing on the literature review portion. He reported minor errors, including a coding mistake in one figure, some overlooked papers in the literature search and some incorrectly copied values from the reviewed papers. None affected the paper’s results.

Poldrack checked only 10 percent of the reviewed papers. But when the study’s sole author, Jan Wessel, associate professor of psychological and brain sciences and neurology at the University of Iowa, learned about those mistakes, he had his trainees re-check every paper included in the review. They found additional mistakes—and even made new ones. And there is a 52 percent chance that he and one of his trainees both made the same error and never caught it, according to a calculation by Wessel. He says he contacted the journal editor, who decided the errors did not warrant a correction.

The Transmitter spoke with Poldrack and Wessel about their experience participating in the ERROR program and how they think the field should handle slipups in general.

This interview has been edited for length and clarity.

The Transmitter: How did you get involved in this project?

Jan Wessel: I went to college with Malte Elson—I think I once bought a couch from him. I’ve followed his work and admired what he’s done in the metascience space. When they launched this initiative, he wrote me a nice email asking me if I wanted to participate, and I was pretty excited about it. And then he and I discussed which paper would be most appropriate. They were interested in papers that have a decent number of citations, of which I don’t have hundreds, so that narrowed it down pretty quickly.

But to me, the only consideration was that I wanted it to be a single-author paper, because that made it much easier to say, “This is all me. If there’s a massive error to be found, we know who the culprit is.” And there’s also no issue of talking to one of my former grad students and being like, “Hey, can we submit this to somebody like Russ to find errors while you don’t have a tenure-track job yet?”

Russell Poldrack: Then Malte reached out to me, presumably because I’ve worked in that same space of response inhibition. It sounded fun.

TT: How did this experience compare with the traditional peer-review process?

RP: It was totally different. I wasn’t reading the paper to pick apart problems with the design and the interpretation; it was really, “Can I look very closely at what they did and see whether it was correct?” So for me, it was actually a lot more fun than the usual peer-review process.

JW: In classic peer review, you’re not really required—even though maybe you should be—to share your analysis code; there’s no expectation of people really digging in and looking for factual errors that you made. I described it to my wife as, “Well, peer review is like you walk down the street and somebody is supposed to comment on your outfit. And this is like you’re waking up in the morning, and you’re naked, and somebody is commenting on what you look like.”

TT: You both made mistakes when hunting for or correcting errors in the paper. What are your thoughts about that?

RP: It’s humans doing science, and humans make errors in whatever they do. Even in the most life-or-death situations. We’ve had two space shuttles explode in the air because somebody made a mistake at some point. And there’s basically no such thing as software code that’s bug-free: As hard as you try, you’re not going to get rid of bugs.

In the wake of the reproducibility crisis, there’s been this narrative that if you made an error in your paper, you’re a terrible scientist or you’re committing fraud. I think we need to pull back from that and realize that humans make errors, and our goal should be to figure out how to make fewer of them.

In my lab, if somebody makes an error, I have them present it and share what happened and how we might have prevented it. It’s a blame-free environment. I think we as a field need to move closer to a blame-free environment that tries to understand what kinds of errors people make and how we can minimize them, knowing that we can never completely get rid of them.

JW: You want to think of science as the one place where mistakes don’t happen, but that’s not realistic. It supports the idea that you can never ever take a single study as accepted knowledge. We need to replicate; we need to extend; we need to build theoretical complexes that feature many, many papers making a coherent point. So if you’re the first study in a space, you want to ensure you don’t make a major error. And then, still, you want to wait for replications to come in. At some point, you hope that even though there are probably minor errors in a lot of the papers, the truth will average out of the noise.

TT: So, what can researchers do to catch mistakes as they happen, or at least before they enter the literature?

JW: We’ve put some procedures in place in my lab to address some of these issues. For example, any value that somebody takes from a piece of paper and puts into the computer, two people look at it. It doesn’t make it impossible for mistakes to occur, but it makes it much less likely. The other big thing is code review. I talked about the ERROR program extensively in our last lab meeting, and I think it was really eye-opening for a lot of people and showed the utility in doing code review.

RP: I agree with all that, and I’ll add three things. One, so much of what we do depends on code now, and we should think about writing more tests as we develop our code to catch a lot of these issues. Two, our department now has a reproducibility consultant. When you finish a study, you can give them your data, your code and the draft of your paper, and they will try to reproduce what you’ve done. We should think about providing that kind of resource at the department level.

And the last thing: In our field, people are incentivized to publish papers in prestigious journals. Those journals generally require you to have a clean story supported by positive results. So people are incentivized to find results that are really clean and nice, and not to dig in and find all the flaws. We need to incentivize people to think that finding a significant result is just a first step. And then you need to figure out all the ways in which you could have done it wrong before you send the paper in, rather than saying, “Oh, the p-value is less than 0.05—next!”

TT: This project focuses on finding errors in published papers, but do you think there should be an error hunt during the traditional pre-publication peer review?

JW: It would be doable. The publishers make an intense amount of profit off our work, and they could use some of that money to pay for error reviewers during peer review. Of course, they would never do that, because why would they? It narrows their profit margins. I think it’s perfectly feasible; it’s also never going to happen.

RP: It would be great if it happened, but I don’t think it’s feasible. You either have to get regular old peer reviewers off the street to do it, or you have to hire people to do it. Given how hard it is to even find reviewers these days, finding reviewers to also dig into methods is really challenging.

On a smaller scale, I do think it’s doable, but given how many papers are getting submitted to every journal right now … if we can get everybody to agree to do like a tenth as much science as they’re doing now, or at least a submit a tenth as many papers, then I think we could do it.

JW: Yeah, I don’t think it’s a universal thing that you could implement. And there was a financial incentive for us—I don’t know exactly how much money you got, Russ—but if you can imagine a postdoc who was making $58,000 a year, if you give them $1,000 to look at a Nature Neuroscience paper and try to find a bug, I think that is doable.

RP: I think you’re right. If there were strong enough financial incentives, that could well work.