Reproducibility is a team sport: Lessons from a large-scale collaboration

Building reproducible systems across labs is possible, even in large-scale neuroscience projects. You just need rigor, collaboration and the willingness to look your own practices dead in the eye.

Almost everyone says they care about reproducibility. And sure, lots of folks have heard of and perhaps worried about the replication crisis that has been rattling some areas of science. But when it comes to doing something about it, the crowd gets a lot thinner. Few people roll up their sleeves to tackle the issue head-on, and even fewer pause to wonder if their own methods might be part of the problem.

Why is that? Well, digging into reproducibility feels like a chore, certainly more so than chasing novel results. Moreover, although individual labs might be great at pointing out inconsistencies in the literature, they’re not so great at figuring out what improves reproducibility. That’s because of a structural limitation: To really test what makes results reproducible across labs, you need multiple labs. One lab in isolation can’t do it.

I didn’t set out to study reproducibility. My colleagues and I stumbled into it as part of our work on the International Brain Laboratory (IBL), a large-scale collaboration across 21 labs. The goal was to generate a brain-wide neural activity map in mice as they made decisions, to help us understand how animals blend sensory inputs and internal beliefs to guide actions. As it turned out, the scale of our project created a golden opportunity to study reproducibility. Recording from the entire mouse brain was simply too much for any one lab, so we had to pool data from many labs. That meant we needed to ensure that one lab’s data could reliably stand in for another’s. Reproducibility became not just a “nice to have” but an essential, affording the rare chance to ask: How reproducible are results across labs, and what makes these results more or less reliable?

After years of concerted effort, we were relieved to discover that reproducibility is indeed possible—with careful rigor. The lessons we learned can help others in the field adopt more reproducible practices, which in turn will help speed the pace of discovery, reduce costs and, hopefully, improve faith in science. In periods of political uncertainty with unstable funding priorities, reproducibility in science becomes even more important. The ability to independently verify scientific findings is not merely a technical concern but a safeguard against politicization, misinformation and the erosion of credibility. In this sense, initiatives to embed reproducibility as a core activity at the U.S. National Institutes of Health provide a stabilizing force in an otherwise volatile research environment. Moreover, initiatives promoting reproducibility ensure that even amid shifting political currents, the scientific record remains reliable.

O

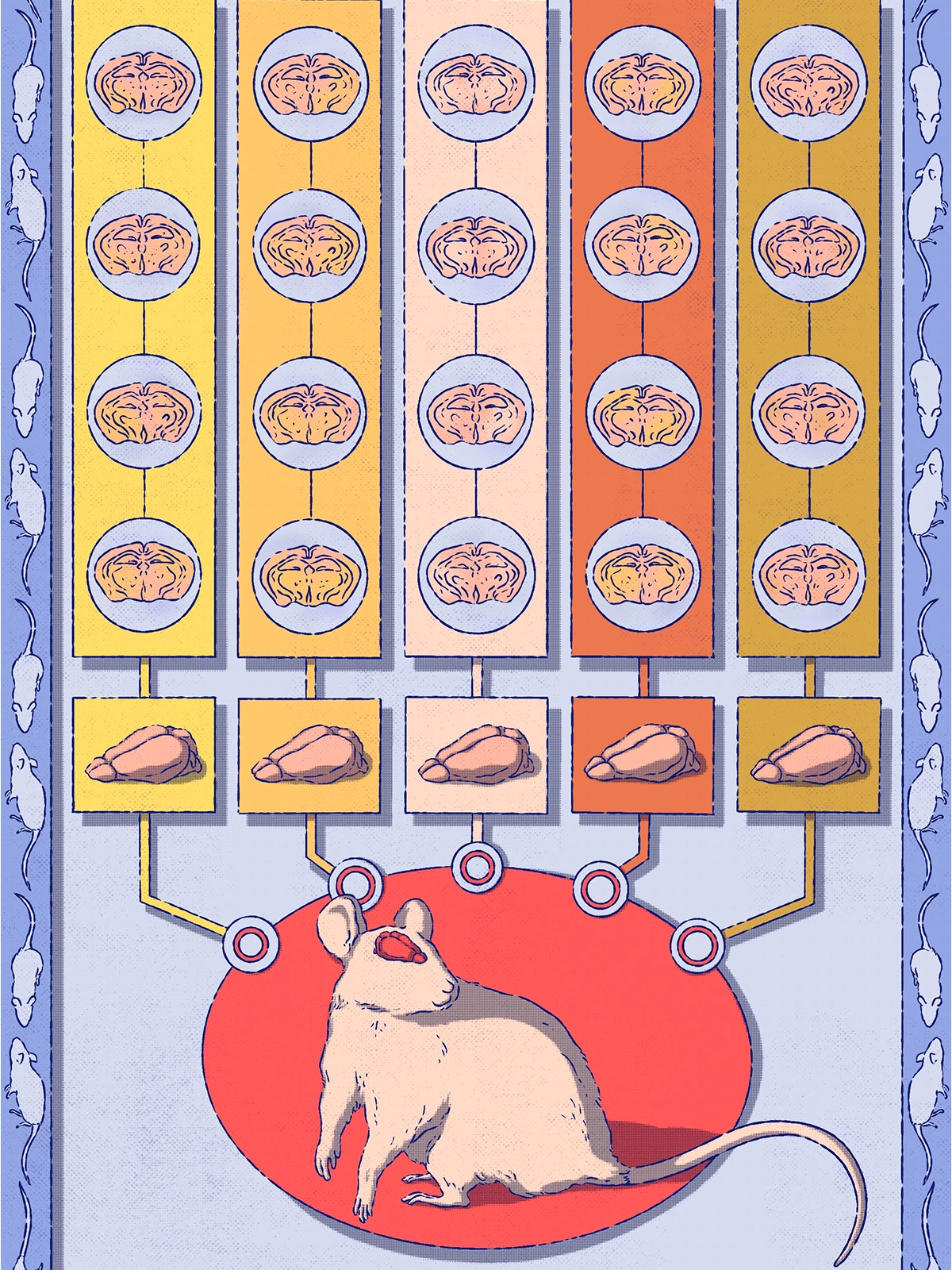

ur first reproducibility challenge at the IBL was with behavioral data. We standardized the instruments and training protocols and then tested whether mice trained in different labs behaved the same way. Following some aha moments—mice bred in-house differed from mice purchased directly from the vendor, for example—we demonstrated that the behavior was indeed reproducible across labs. This was a big deal, because previous work had argued that mouse behavior was too variable to replicate from lab to lab. Fortunately, reproducibility in behavior is possible—but only if you’re rigorous. Standardized tools and strict quality control made all the difference.Assessing the reproducibility of behavior was challenging, but neural data provided a much harder nut to crack. Previous efforts to evaluate the reproducibility of electrophysiological signals left open questions. Some efforts, such as those looking at the nature of preplay in hippocampal signals or the existence of spike-timing-dependent plasticity in nematodes, focused on measurements in only a few labs that had not set out to standardize their approaches. Other efforts, such as those led by the Allen Institute, made clear that standardization within a single facility can enhance reproducibility but left unclear whether this was achievable in individual, geographically separated laboratories. To assess reproducibility in our brain-wide recordings, we made sure every mouse had at least one electrode inserted in exactly the same brain location, specified by coordinates indicating the front-back and center-out location. In total, our team made 121 insertions in this location in the brain. We used linear probes that spanned secondary visual areas, the hippocampus and some higher-order thalamic nuclei. After aligning the brain images from each mouse with automated tools, we were in a position to ask: How similar are the results?

Here is what surprised us: The trajectory of the electrode itself was a major source of variability. Despite using identical instruments and standardized coordinates, some electrodes missed the mark—completely. Why? Two main reasons: First, mouse brains vary in size. What works for one brain might be off target for another. Second, experimenters made on-the-fly tweaks. Avoiding blood vessels is smart (no one wants a hemorrhage), but those small adjustments sometimes meant the electrode missed its target region entirely. Luckily, once we applied strict quality control, we were able to preserve a solid subset of trajectories for analysis.

When we focused on those verified brain areas, neural activity was surprisingly consistent. We even trained machine-learning decoders to “guess” which lab a given dataset came from, and they failed—a win for reproducibility. That said, individual neurons were a bit all over the place. Their responses varied enough that we needed lots of neurons to get reliable results. The takeaway: Population-level analyses were much more stable and reproducible than metrics focused on single neurons. For example, decoder performance held steady across labs, but the “proportion of neurons modulated by x” was often less consistent.

What did we learn that matters for the rest of the field? Three things. First, set quality-control rules early and enforce them like your results depend on it. Because they do. Yes, it hurts to toss out data you worked hard to acquire, but poor-quality data will only lead you astray. The Allen Institute has been leading by example here (and they have been honest about how painful it can be). We should all follow suit. Second, lean into population-level analyses. Don’t let the idiosyncrasies of individual neurons lead you down a rabbit hole. Bigger populations provide a sturdier signal and more reproducible results. Finally, share your data and code openly. This one might sting a little. I would like to believe I write pristine, flawless code out of pure internal scientific virtue. But the truth is, knowing the community will see my work makes me try harder. Public scrutiny improves quality, and responding to feedback from other users has absolutely made my code better. Code review helps a lot here, too. In the IBL, we took the time to scrutinize one another’s code, on the lookout for errors. This effort benefited the code considerably and helped the code writer and the code reviewer learn better practices along the way.

The bottom line is simple: Reproducibility isn’t glamorous, but it’s essential. And as our experience shows, it’s not just a theoretical ideal. You absolutely can build reproducible systems across labs, even in large-scale collaborations. You just need rigor, collaboration and the willingness to look your own practices in the eye.

Disclosure: Anne Churchland is a member of the IBL, a project funded in part by the Simons Foundation, which also funds The Transmitter. She receives an honorarium for her membership on the executive committee for the Simons Collaboration on the Global Brain. Churchland used ChatGPT to make suggestions for modifying a few sentences to be more widely accessible.

Recommended reading

Neuroscience needs single-synapse studies

This paper changed my life: Ishmail Abdus-Saboor on balancing the study of pain and pleasure

Explore more from The Transmitter

Sounding the alarm on pseudoreplication: Q&A with Constantinos Eleftheriou and Peter Kind

Neuroscience’s open-data revolution is just getting started