Computational neuroscience

Recent articles

From genes to dynamics: Examining brain cell types in action may reveal the logic of brain function

Defining brain cell types is no longer a matter of classification alone, but of embedding their genetic identities within the dynamical organization of population activity.

From genes to dynamics: Examining brain cell types in action may reveal the logic of brain function

Defining brain cell types is no longer a matter of classification alone, but of embedding their genetic identities within the dynamical organization of population activity.

Cracking the neural code for emotional states

Rather than act as a simple switchboard for innate behaviors, the hypothalamus encodes an animal's internal state, which influences behavior.

Cracking the neural code for emotional states

Rather than act as a simple switchboard for innate behaviors, the hypothalamus encodes an animal's internal state, which influences behavior.

The 1,000 neuron challenge

A competition to design small, efficient neural models might provide new insight into real brains—and perhaps unite disparate modeling efforts.

The 1,000 neuron challenge

A competition to design small, efficient neural models might provide new insight into real brains—and perhaps unite disparate modeling efforts.

The best of ‘this paper changed my life’ in 2025

From a study that upended astrocyte research to one that reignited the field of spiking neural networks, experts weighed in on the papers that significantly shaped how they think about and approach neuroscience.

The best of ‘this paper changed my life’ in 2025

From a study that upended astrocyte research to one that reignited the field of spiking neural networks, experts weighed in on the papers that significantly shaped how they think about and approach neuroscience.

Tatiana Engel explains how to connect high-dimensional neural circuitry with low-dimensional cognitive functions

Neuroscientists have long sought to understand the relationship between structure and function in the vast connectivity and activity patterns in the brain. Engel discusses her modeling approach to discovering the hidden patterns that connect the two.

Tatiana Engel explains how to connect high-dimensional neural circuitry with low-dimensional cognitive functions

Neuroscientists have long sought to understand the relationship between structure and function in the vast connectivity and activity patterns in the brain. Engel discusses her modeling approach to discovering the hidden patterns that connect the two.

Explore more from The Transmitter

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

Let’s teach neuroscientists how to be thoughtful and fair reviewers

Blanco-Suárez revamped the traditional journal club by developing a course in which students peer review preprints alongside the published papers that evolved from them.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

New autism committee positions itself as science-backed alternative to government group

The Independent Autism Coordinating Committee plans to meet at the same time as the U.S. federal Interagency Autism Coordinating Committee later this month—and offer its own research agenda.

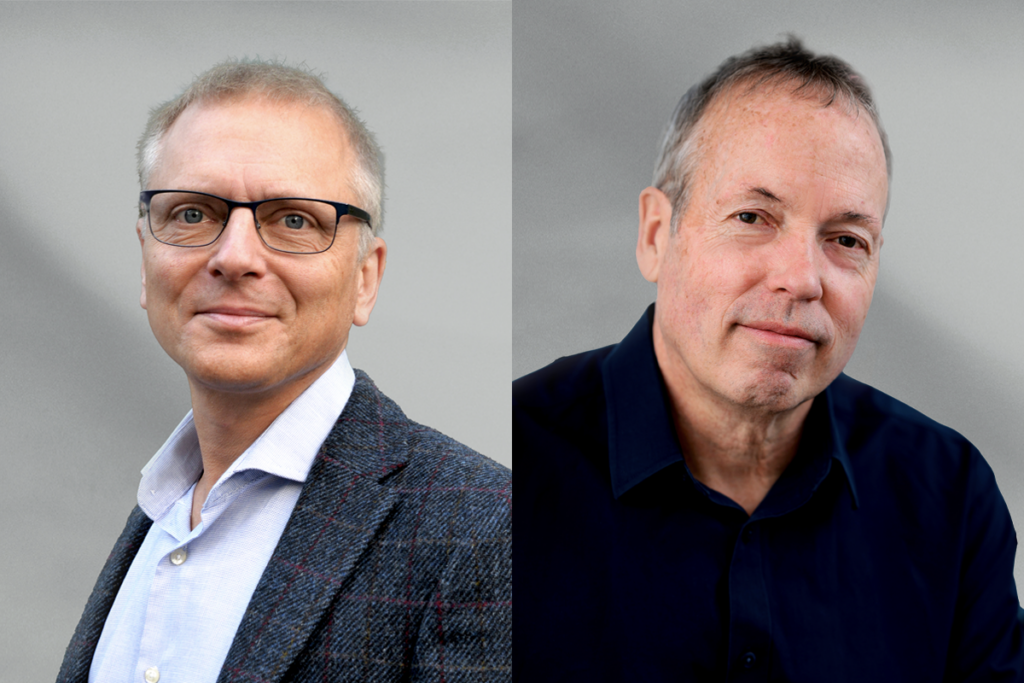

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.

Two neurobiologists win 2026 Brain Prize for discovering mechanics of touch

Research by Patrik Ernfors and David Ginty has delineated the diverse cell types of the somatosensory system and revealed how they detect and discriminate among different types of tactile information.