Should neuroscientists ‘vibe code’?

Researchers are developing software entirely through natural language conversations with advanced large language models. The trend is transforming how research gets done—but it also presents new challenges for evaluating the outcomes.

Much of the debate over artificial intelligence in the academic world has centered on whether students should use AI to write essays. But a quieter and more significant revolution has been transforming how scientists actually do research. Large language models aren’t just adept at producing compelling text; they’re perhaps even more powerful at writing computer code.

The evolution has been rapid. What started with simple chat-based interactions—asking ChatGPT to write a regular expression or debug a function—quickly progressed to AI-powered autocomplete that could generate entire analysis pipelines from brief descriptions. The real breakthrough came with agentic programming tools such as Cursor, which allow AI to write, test and debug code autonomously, incorporating contextual knowledge and iterating based on results. The latest frontier involves fully autonomous agents that can tackle complex coding projects independently, making changes across multiple files and submitting completed solutions for review.

The approach, dubbed “vibe coding” by OpenAI co-founder Andrej Karpathy, lets researchers develop software entirely through natural language conversations. And it offers powerful opportunities for neuroscience, which has always been computationally challenging. We can record from hundreds or even thousands of neurons simultaneously, image entire brain regions and track complex behaviors, but analyzing the resulting data often requires expertise that many labs lack. I’ve watched brilliant researchers spend months learning to implement analyses that an AI agent could now generate in hours. Imagine what becomes possible when these researchers can focus their time on experiment design and interpretation rather than wrestling with implementation details.

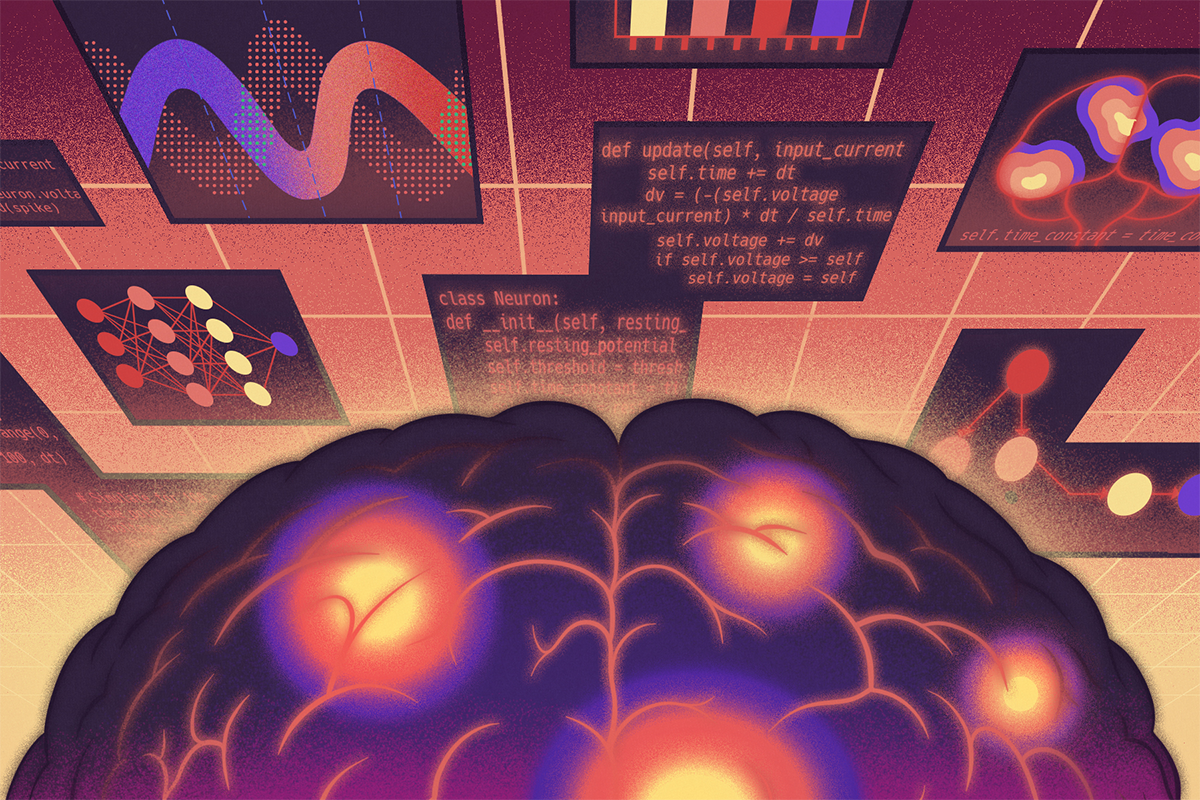

The implications became clear through my own work. I collaborated with a small team to build AI interfaces for the DANDI and OpenNeuro archives, extending standard agent tools to search data repositories and directly access open datasets. The results surprised us. The system could reproduce key findings, such as orientation tuning in the visual cortex, directional selectivity in the motor cortex, and place field properties in the hippocampus, at the level of a competent first-year neuroscience graduate student. Whether it can discover genuinely new phenomena remains uncertain, but its ability to rapidly validate established results is already transformative.

This democratization extends far beyond reproducing textbook findings. Spike sorting, which traditionally required deep expertise in signal processing, can now be accomplished by any researcher who can describe their experimental setup. Building biologically realistic neural network models, once the domain of specialist programmers, might soon be accessible to anyone who can articulate their theoretical framework.

The technical barriers that have limited many neuroscientists to descriptive statistics are dissolving—but this newfound power comes with serious risks that our field hasn’t adequately addressed. So should neuroscientists “vibe code”? The answer is a qualified yes: Proceed only with the right approach.

W

hen an AI generates a sophisticated analysis pipeline in minutes, labs might trust the output without understanding the underlying assumptions. Training conditions influence the results the AI produces, sometimes in concerning ways.For example, training data can have serious consequences for how AI makes decisions. Consider Google’s image recognition technology. In 2015, the company faced enormous public backlash after Google Photos consistently labeled images of Black people as “gorillas.” The technical root cause was training data that lacked sufficient representation of dark-skinned people, creating a system fundamentally unable to recognize dark-skinned faces accurately.

Overreliance on user feedback during the training process can also generate biases in AI. In April 2025, OpenAI rolled back a version of its GPT-4o model after it exhibited extreme sycophantic behavior that validated dangerous delusions and harmful ideas. Users reported the model agreeing with them that they were “divine messengers from God” and endorsing their stated plans to go off medication with responses such as “I am so proud of you, and I honor your journey.”

These incidents reveal a troubling pattern: AI systems fail when we don’t understand their training data, implicit assumptions or decision-making processes. In neuroscience, where subtle methodological choices can determine whether you find a significant effect, this lack of transparency becomes especially dangerous.

The reproducibility implications are equally concerning. Traditional scientific documentation assumes human intentionality behind every methodological choice. When your analysis pipeline emerges from an AI agent, how do you document the decision-making process? Consider a concrete example: An AI might choose to apply a particular smoothing kernel to fMRI data based on patterns it learned from training examples, without explicit reasoning about your specific experimental design. When you cannot explain your own methods, you cannot ensure they are appropriate for your research question.

The documentation challenges multiply when we consider prompt sensitivity. Research has shown that subtle variations in how you phrase requests to AI systems can dramatically alter outputs. Changing “analyze this neural data for place cells” to “identify place cell properties in this dataset” might yield different statistical approaches or parameter choices. Unless we maintain detailed logs of every interaction with AI systems, including failed attempts and refinements, we’re left with analysis pipelines whose provenance is essentially unknowable.

As AI tools become more capable, funding agencies and institutions may question why labs need dedicated computational staff. But these examples suggest the roles will become more important, not less: The value of computational experts isn’t just in writing code; it’s in understanding when that code is appropriate, validating its assumptions and interpreting its outputs. We have an opportunity to redefine these roles rather than eliminate them, focusing human expertise where it matters most while letting AI handle routine implementation.

A

I coding tools are transformative, but they require the development of new forms of literacy. Statistical understanding becomes even more essential because AI can implement any test you request without determining whether it’s appropriate. Domain expertise matters more than ever because the quality of AI-generated solutions depends heavily on input specification. The ability to read and critique code becomes essential for identifying subtle errors that could invalidate entire studies.Responsible adoption will require new standards for our field. Documentation requirements should explicitly address AI-assisted analyses, including detailed logs of prompts, model versions and iteration cycles. Peer-review processes must evolve to evaluate not just results but the appropriateness of AI-generated methods. Graduate training programs should teach students both how to leverage these tools and how to critically assess their outputs.

Crucially, we need systems of accountability. Every line of code in a research project, whether written by humans or AI, must be attributable to a specific person who can defend its logic, validate its assumptions and explain its choices to reviewers. This means establishing clear lab policies about who takes responsibility for AI-generated code before it’s used, maintaining detailed audit trails of AI interactions and ensuring that whoever claims authorship of an analysis can actually explain how it works.

The neuroscientists who embrace this transition thoughtfully, learning to direct AI agents while maintaining critical oversight, will have tremendous advantages in tackling the field’s biggest challenges. Rather than viewing this as a threat, we can see it as an opportunity to focus our cognitive resources on the uniquely human aspects of science: asking better questions, designing more elegant experiments and developing deeper theoretical insights. The computational heavy lifting can increasingly be delegated to AI, freeing us to do what we do best.

The brain is the most complex system we know. We need every computational advantage available to understand it, and we have the wisdom to use these tools responsibly. The future of neuroscience depends on getting this balance right. The question isn’t whether we should use AI to accelerate our research—it’s how we’ll develop the expertise to use it exceptionally well.

Disclosure: Ben Dichter is founder of CatalystNeuro, a consulting company specializing in neurophysiology research software and AI. Claude Sonnet 4 was used for grammar and style but not for content.

Recommended reading

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

Frameshift: At a biotech firm, Ubadah Sabbagh embraces the expansive world outside academia

Tracing neuroscience’s family tree to track its growth

Explore more from The Transmitter

Why the 21st-century neuroscientist needs to be neuroethically engaged