Neural coding

Recent articles

From genes to dynamics: Examining brain cell types in action may reveal the logic of brain function

Defining brain cell types is no longer a matter of classification alone, but of embedding their genetic identities within the dynamical organization of population activity.

From genes to dynamics: Examining brain cell types in action may reveal the logic of brain function

Defining brain cell types is no longer a matter of classification alone, but of embedding their genetic identities within the dynamical organization of population activity.

The challenge of defining a neural population

Our current approach is largely arbitrary. We need new methods for grouping cells, ideally by their dynamics.

The challenge of defining a neural population

Our current approach is largely arbitrary. We need new methods for grouping cells, ideally by their dynamics.

John Beggs unpacks the critical brain hypothesis

Beggs outlines why and how brains operate at criticality, a sweet spot between order and chaos.

John Beggs unpacks the critical brain hypothesis

Beggs outlines why and how brains operate at criticality, a sweet spot between order and chaos.

Why the 21st-century neuroscientist needs to be neuroethically engaged

Technological advances in decoding brain activity and in growing human brain cells raise new ethical issues. Here is a framework to help researchers navigate them.

Why the 21st-century neuroscientist needs to be neuroethically engaged

Technological advances in decoding brain activity and in growing human brain cells raise new ethical issues. Here is a framework to help researchers navigate them.

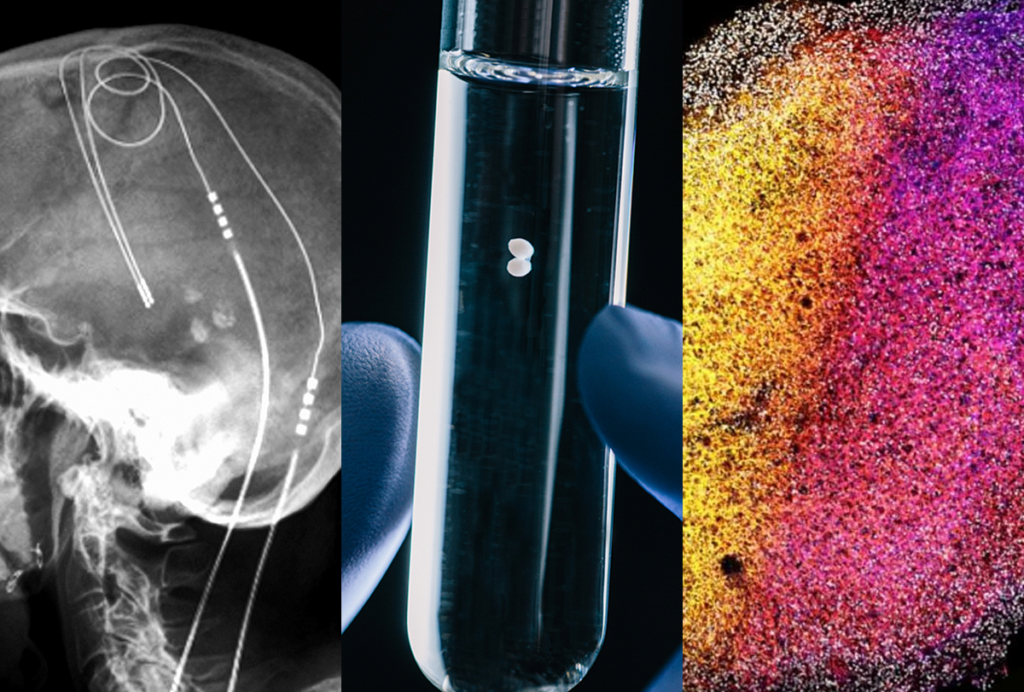

Tracking single neurons in the human brain reveals new insight into language and other human-specific functions

Better technologies to stably monitor cell populations over long periods of time make it possible to study neural coding and dynamics in the human brain.

Tracking single neurons in the human brain reveals new insight into language and other human-specific functions

Better technologies to stably monitor cell populations over long periods of time make it possible to study neural coding and dynamics in the human brain.

It’s time to examine neural coding from the message’s point of view

In studying the brain, we almost always take the neuron’s perspective. But we can gain new insights by reorienting our frame of reference to that of the messages flowing over brain networks.

It’s time to examine neural coding from the message’s point of view

In studying the brain, we almost always take the neuron’s perspective. But we can gain new insights by reorienting our frame of reference to that of the messages flowing over brain networks.

Dmitri Chklovskii outlines how single neurons may act as their own optimal feedback controllers

From logical gates to grandmother cells, neuroscientists have employed many metaphors to explain single neuron function. Chklovskii makes the case that neurons are actually trying to control how their outputs affect the rest of the brain.

Dmitri Chklovskii outlines how single neurons may act as their own optimal feedback controllers

From logical gates to grandmother cells, neuroscientists have employed many metaphors to explain single neuron function. Chklovskii makes the case that neurons are actually trying to control how their outputs affect the rest of the brain.

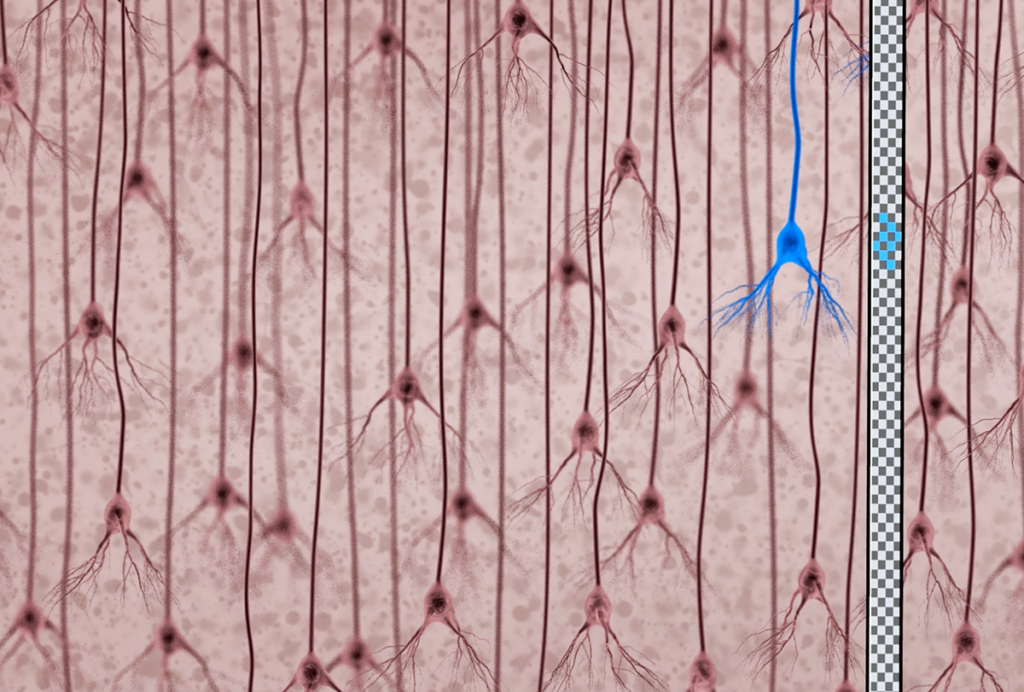

Most neurons in mouse cortex defy functional categories

The majority of cells in the cerebral cortex are unspecialized, according to an unpublished analysis—and scientists need to take care in naming neurons, the researchers warn.

Most neurons in mouse cortex defy functional categories

The majority of cells in the cerebral cortex are unspecialized, according to an unpublished analysis—and scientists need to take care in naming neurons, the researchers warn.

Eli Sennesh talks about bridging predictive coding and NeuroAI

Predictive coding is an enticing theory of brain function. Building on decades of models and experimental work, Eli Sennesh proposes a biologically plausible way our brain might implement it.

Eli Sennesh talks about bridging predictive coding and NeuroAI

Predictive coding is an enticing theory of brain function. Building on decades of models and experimental work, Eli Sennesh proposes a biologically plausible way our brain might implement it.

The Transmitter’s favorite essays and columns of 2024

From sex differences in Alzheimer’s disease to enduring citation bias, experts weighed in on important scientific and practical issues in neuroscience.

The Transmitter’s favorite essays and columns of 2024

From sex differences in Alzheimer’s disease to enduring citation bias, experts weighed in on important scientific and practical issues in neuroscience.

Explore more from The Transmitter

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

After a clinical research career, an interlude at Apple and four months in early retirement, Raphe Bernier found joy in teaching.

Frameshift: Raphe Bernier followed his heart out of academia, then made his way back again

After a clinical research career, an interlude at Apple and four months in early retirement, Raphe Bernier found joy in teaching.

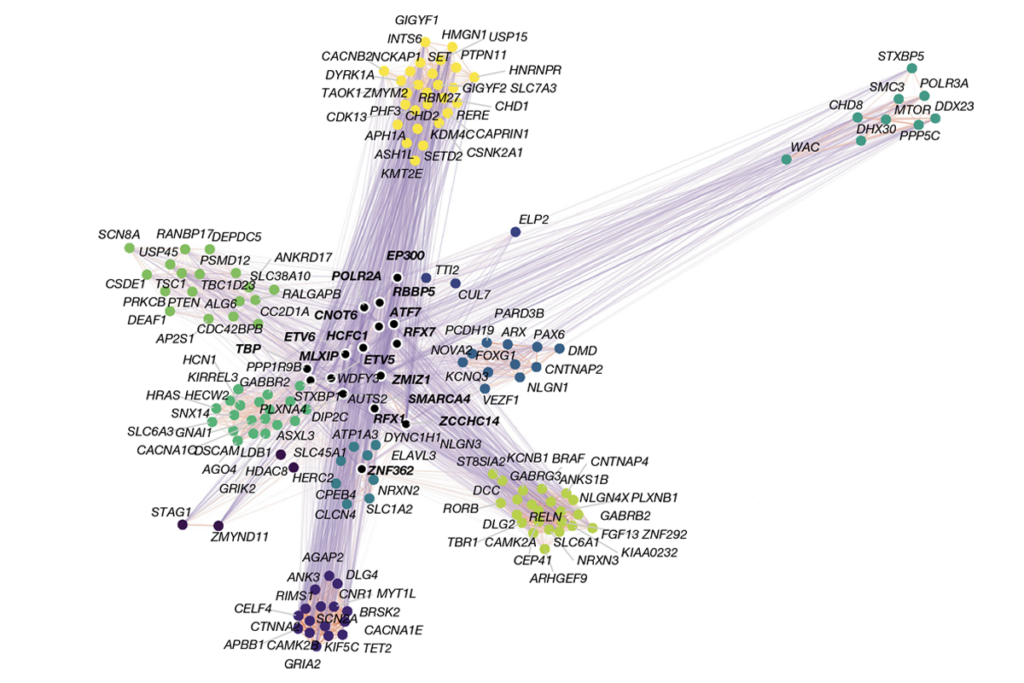

Organoid study reveals shared brain pathways across autism-linked variants

The genetic variants initially affect brain development in unique ways, but over time they converge on common molecular pathways.

Organoid study reveals shared brain pathways across autism-linked variants

The genetic variants initially affect brain development in unique ways, but over time they converge on common molecular pathways.

Single gene sways caregiving circuits, behavior in male mice

Brain levels of the agouti gene determine whether African striped mice are doting fathers—or infanticidal ones.

Single gene sways caregiving circuits, behavior in male mice

Brain levels of the agouti gene determine whether African striped mice are doting fathers—or infanticidal ones.