Neuroscience’s open-data revolution is just getting started

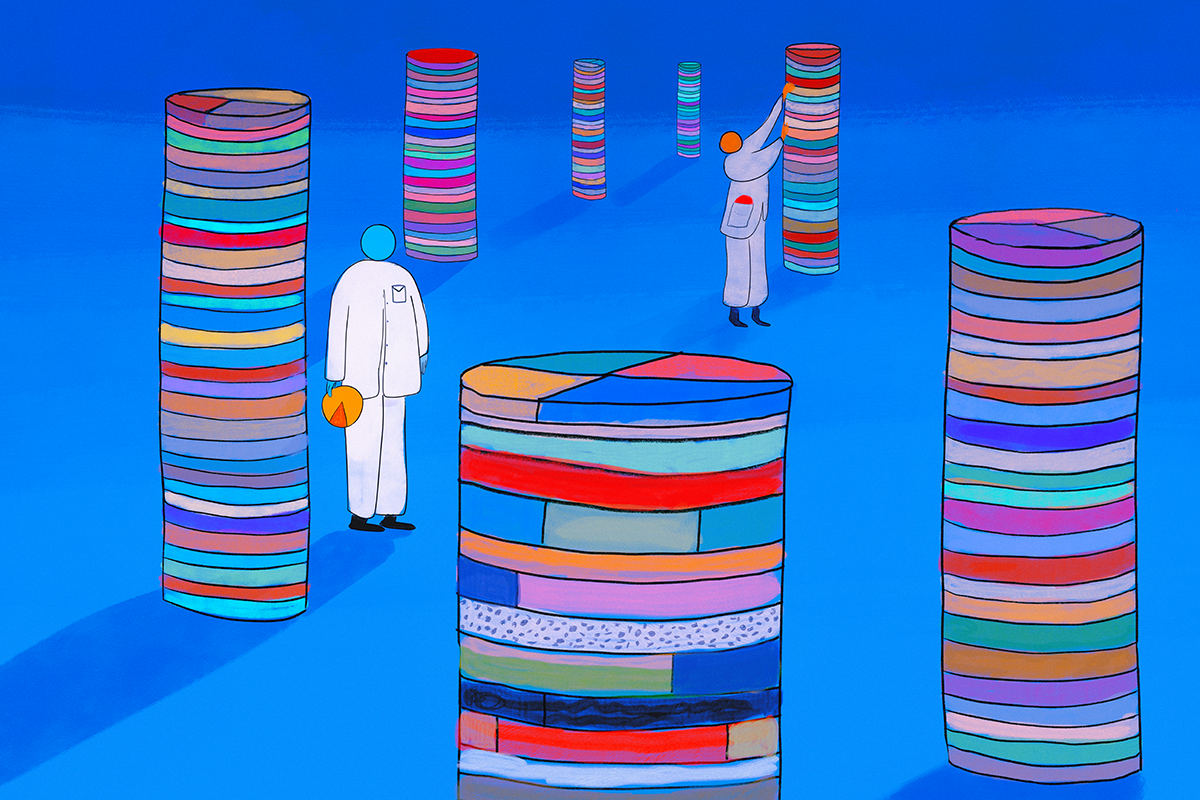

Data reuse represents an opportunity to accelerate the pace of science, reduce costs and increase the value of our collective research investments. New tools that make open data easier to use—and new pressures, including funding cuts—may increase uptake.

When COVID-19 shut down labs worldwide, Jordan Farrell, then a postdoctoral researcher at Stanford University, faced a dilemma. His work on the distinct roles of dentate spikes and sharp wave ripples in the hippocampus demanded extensive neural recordings from multiple brain regions simultaneously—a daunting experimental setup in the best of times and nearly impossible during a pandemic. But rather than abandoning his project, Farrell turned to open datasets from the Allen Institute and the lab of Kenneth Harris and Matteo Carandini at University College London, which contained simultaneous recordings from multiple Neuropixels probes across brain regions.

By cleverly combining these open resources with targeted experiments once restrictions eased, Farrell uncovered something remarkable: Whereas sharp wave ripples mediate an introspective planning state, dentate spikes activate an observational state. Farrell, now assistant professor of neurology at Harvard University, continues to use open data as a key resource in his lab.

Stories such as Farrell’s clearly illustrate the benefits of data reuse: Analyzing existing data instead of collecting it yourself accelerates your research, reduces time spent collecting data, lowers the cost of science and decreases the number of animal lives required. It also builds trust in your findings—it is much harder to doctor someone else’s data undetected than it is to doctor your own.

Multiple papers published in the past few years demonstrate that it’s possible to test novel hypotheses with open data—and publish the results in prestigious journals. In 2023, Richard Burman and his colleagues at the University of Oxford published a paper in Neuron on the effects of anesthesia on brain activity, leveraging data from the Allen Institute. In a 2023 Nature paper, Steffen Schneider and his colleagues at École Polytechnique Fédérale de Lausanne used open data to demonstrate the generalizability of CEBRA, a tool for dimensionality reduction of neural and behavioral data. At the Janelia Research Campus, Marius Pachitariu’s Nature Methods paper on the Kilosort4 spike sorting algorithm and Carsen Stringer’s Nature Neuroscience paper on Rastermap, both published last year, relied heavily on open datasets to showcase their tools. In a 2023 eLife paper, Saskia de Vries reported more than 100 papers or preprints using data from Allen Brain Observatory visual coding experiments. And the list doesn’t end there.

A

bounty of data remains ripe for the picking. Over the past five years, the number of datasets shared to open archives, along with the infrastructure needed to support them, has blossomed, driven largely by support from the BRAIN Initiative and private foundations.The DANDI archive, for example, now houses more than 350 open neurophysiology datasets, comprising more than 350 terabytes of data. Researchers studying the role of the hippocampus in navigation have led the way, with several labs contributing 60 hippocampal neurophysiology datasets to DANDI. The 11 public OpenScope datasets provide unparalleled opportunities for studying predictive coding in the visual domain. The multi-lab Brain Wide Map from the International Brain Laboratory provides state-of-the-art Neuropixels electrophysiology recordings across an array of brain areas for a visual decision-making task. MICrONs data offer an unprecedented linkage between high-density neurophysiology records and electron microscopy.

Data-sharing is only half the story—to be successful, open data need to be used by other researchers to drive new discoveries. But to date, the field hasn’t placed equal emphasis on the reuse side of the data-sharing equation. Comparatively few researchers are taking advantage of these and other datasets, often because of a lack of awareness, along with uncertainty over how to use them. Scientists may not know what data are available or may struggle with the technical aspects of accessing and analyzing them. Moreover, the culture of neuroscience has traditionally valued collecting new data over reusing existing datasets, creating a perception that high-impact work always requires novel data collection.

I believe the opposite is true: Labs that don’t embrace open data will increasingly find themselves at a competitive disadvantage, missing opportunities to accelerate their research and validate their findings.

T

hings are beginning to change, with the advent of new tools to make datasets easier to work with. Datasets are easy to search for on dandiarchive.org, downloadable in seconds without a login or coding. (Programmatic interfaces are also available for those who prefer them.) All of the neurophysiology data on DANDI follow the Neurodata Without Borders standard, which packages data with the metadata necessary for reanalysis. The data formats are optimized for local, cluster and cloud computing. The Neurosift application enables anyone to browse these datasets in-browser and code-free, without downloading or installing anything. Pynapple helps to easily load and analyze these datasets.To further support data reuse, four years ago I started NeuroDataReHack, a free, week-long summer school supported by the Janelia Research Campus. In just a week, participants learn to incorporate open data into their work and bolster findings in existing projects. They leave as believers in open science, ready to teach their communities how to use open data.

Analogous efforts are ongoing in other domains of neuroscience. Sharing of neuroimaging data dates back more than 20 years, but reuse of data has grown significantly in the past decade, largely because of high-value datasets such as the Human Connectome Project, wide adoption of the Brain Imaging Data Structure (BIDS) standard, and the OpenNeuro archive, which hosts more than 1,000 standardized, openly accessible neuroimaging datasets. Integrated tools, such as BrainLife, and training courses, such as NeuroHackademy and the African Brain Data Science Academy, lower the barrier for integrating these datasets into current projects.

To be clear, a greater emphasis on using open data does not imply stopping collection of new data. Rather, it means justifying data collection in the context of data that already exist. Continued experiments will still be essential with the advent of new technologies and new scientific questions. The proportion of questions answerable by existing open data will grow, but science will always present more questions requiring new data.

If you’re planning a study, search DANDI or OpenNeuro first to see if relevant data already exist. If you’re teaching, incorporate open datasets into your curriculum. If you’re reviewing grants or papers, ask authors if they have considered how existing data might complement their work. When reviewing a paper, consider asking the authors if they can reproduce their findings on existing datasets. When reviewing a grant proposal, expect researchers to justify data collection in the context of what data already exist.

The infrastructure is built. The data are waiting. Now it’s time to use them.

Disclosures: Benjamin Dichter is founder of CatalystNeuro, a software consulting company that specializes in open data in neurophysiology, and through this company has a financial stake in the success of Neurodata Without Borders and DANDI. Dichter used Claude 3.7 Sonnet for grammar and style, not for content.

Correction

Recommended reading

The S-index Challenge: Develop a metric to quantify data-sharing success

A README for open neuroscience

Explore more from The Transmitter

To keep or not to keep: Neurophysiology’s data dilemma