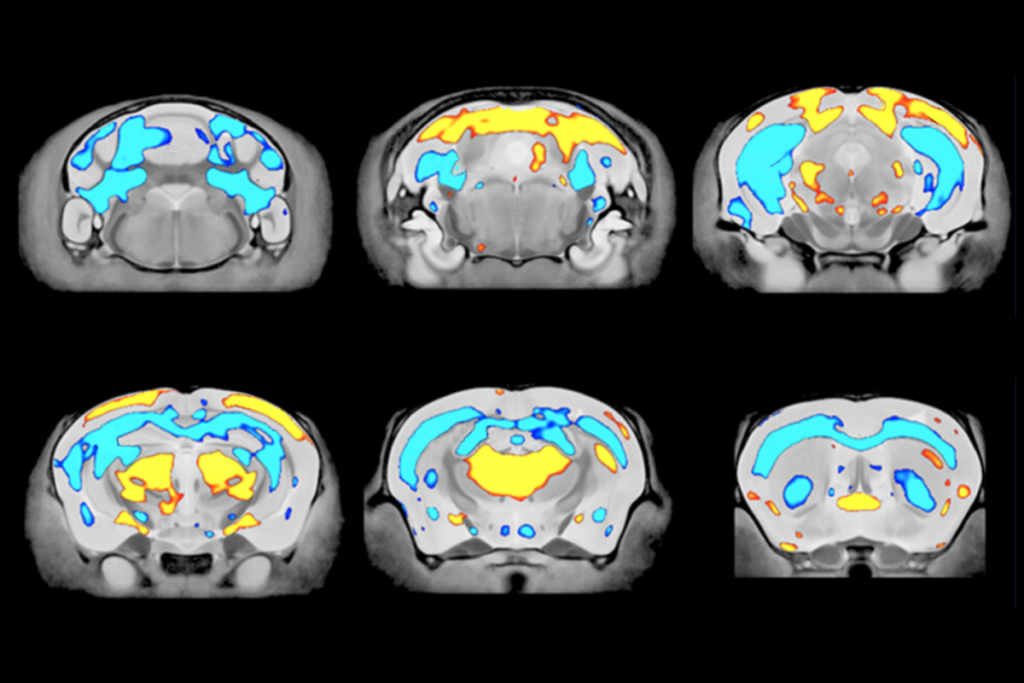

For the past 15 years, Peter Kind, professor of neuroscience at the University of Edinburgh, has tracked a worrying statistical error: pseudoreplication, or analyzing samples from the same animal as if they were independent specimens. This practice can lead to effects that look statistically significant when they are not. For example, in an experiment with two groups, three mice per group and six cells from each mouse, pseudoreplication can give a p-value of 0.05 when it’s actually 0.33.

Nevertheless, the practice is widespread in neuroscience, according to a new study co-led by Kind and published last month in Molecular Autism. The team combed through 345 mouse studies of fragile X syndrome—150 published from 2001 to 2012, and 195 published from 2013 to 2024, after Nature issued new statistical reporting guidelines that demanded greater transparency and documentation, with most other journals soon following suit. They also scrutinized 300 mouse studies of neurological disorders more generally, choosing only those published in top neuroscience journals and again dividing them into two groups based on publication date.

More than half of the papers had at least one figure that involved pseudoreplication. After Nature’s stricter guidelines were published, the prevalence of suspected pseudoreplication increased by about 14 percent for fragile X studies and 17 percent for neurological disorder studies generally.

The Transmitter spoke with Kind and his co-author Constantinos Eleftheriou, a postdoctoral researcher at the University of Edinburgh, about why pseudoreplication is so common and what researchers and journals can do about it.

This interview has been lightly edited for length and clarity.

The Transmitter: Why is pseudoreplication on the rise?

Peter Kind: I don’t think it has actually gotten worse. I think that because reporting has gotten better and the journals are demanding more clarity on the statistics used, it made pseudoreplication a lot easier to detect.

I suspect most people aren’t aware of the statistical issue of pseudoreplication, so they don’t necessarily know they’re doing anything wrong. They think each cell can be treated as an independent replicate, when really, two cells in the same brain or two spines on the same dendrite are not affected independently by anything that the animal is going through. And by misrepresenting the independent replicate, their study gains more artificial power, if you will, and that leads to more significant results, which is what’s favored in science.

TT: What needs to change to stop this practice?

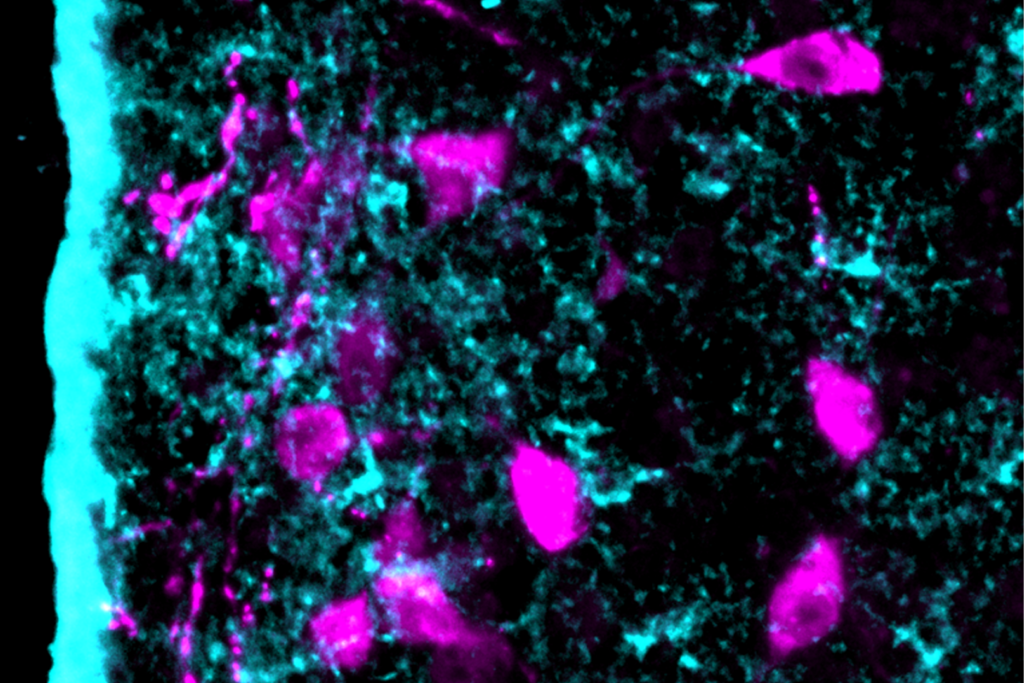

PK: We need to start treating our statistics with the same respect that we do our experimental design. We spend months learning to use a two-photon microscope, for example. We have to put as much time and effort into learning more complex statistics so we can apply the correct statistical approach.

Constantinos Eleftheriou: It also needs to feature more in science education. A lot of the feedback we’ve gotten on the paper has been, “Well, we just didn’t know; no one’s ever told us what pseudoreplication is.”

TT: What role can journals and reviewers play in ending this practice?

PK: It should be the journals, not necessarily the reviewers, taking responsibility. When I review and I bring up pseudoreplication, the common response from study authors is that this is standard in the field. How do you change that culture?

The reporting standards have been a huge help. Before, we couldn’t even tell if a study was pseudoreplicating. But it would be really nice to see journals go a step further and take responsibility for saying that what’s published in their journals is of high statistical rigor—which means not publishing, where appropriate.

TT: Using more mice is costly, but is that the only way to avoid pseudoreplication?

PK: It’s a common falsity that people just need to use more animals. That’s not ideal either, because it leads to more false negatives. In our lab, we have not increased our use of animals at all.

To do statistics with samples from the same animals requires alternative models like linear mixed models (LMMs) or general linear mixed models. LMMs take into account the variability within an animal and the variability between animals and then give you a genotype result. These models take longer to learn, and each dataset is different and has to be modeled independently.

Using LMMs has, if anything, slightly reduced our number of animals, because you get all the power of the within- and between-subject variability.

TT: Considering how prevalent this issue is, what kinds of results should we be taking a second look at?

PK: If you’re reading a paper with three mice in each category but hundreds of replicates per animal, that’s a paper that you need to think hard about. Hopefully that group has put their data online somewhere, and it can be reanalyzed with the right statistics. That’s happening more and more with open science.

CE: On the flip side, just because a paper has pseudoreplication doesn’t necessarily invalidate the results.

PK: It’s just something to identify, like any other technical aspect of the paper.

TT: Can pseudoreplication explain a lack of reproducibility for certain results?

CE: Pseudoreplication exacerbates the chance of seeing an effect that isn’t there. And science is self-correcting—results that are due to pseudoreplication don’t get carried forward.

PK: That’s the origin of this entire project. When we were working on fragile X 15 years ago, we could not reproduce the long, thin spine finding that every lab seemed to be reporting. We identified pseudoreplication in a lot of fragile X papers with spines.

CE: Again, it all boils down to that pressure from journals to publish statistically significant results, which means results that yield a p-value of less than 0.05.

PK: But publishing descriptive statistics with effect sizes and variance is often more informative than what the p-value is.

TT: Is pseudoreplication likely to continue in the future?

CE: Techniques such as high-density electrodes and wider-field two-photon imaging are actually driving us toward recording from more cells. Many of these studies span multiple days, which makes the statistics even more complicated. So I think our techniques are advancing at a faster pace than our statistical education, perhaps.

PK: But I think people are getting more savvy to the problem. If we repeat this study in another 5 to 10 years, my guess is it will drop. I hope it drops.